Fully Autonomous Cars Are Unlikely, Says America’s Top Transportation Safety Official

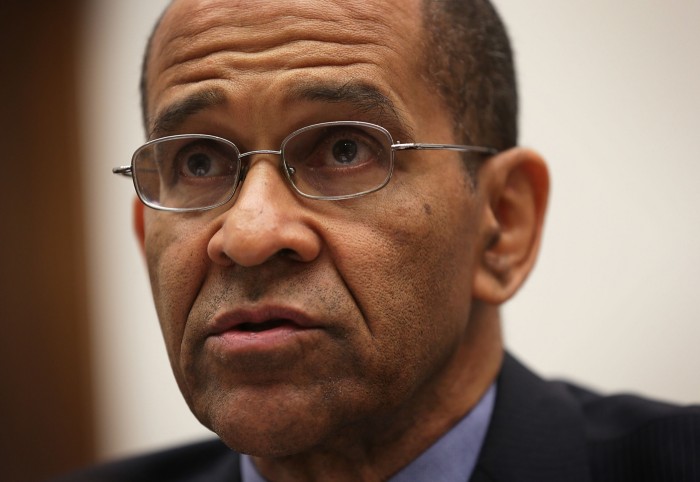

Auto accidents kill more than 33,000 Americans each year, more than homicide or prescription drug overdoses. Companies working on self-driving cars, such as Alphabet and Ford, say their technology can slash that number by removing human liabilities such as texting, drunkenness, and fatigue. But Christopher Hart, chairman of the National Transportation Safety Board, says his agency’s experience investigating accidents involving autopilot systems used in trains and planes suggests that humans can’t be fully removed from control. He told MIT Technology Review that future autos will be much safer, but that some will still need humans as copilots. What follows is a condensed transcript.

How optimistic are you that self-driving cars will cut into the auto accident death toll?

I'm very optimistic. For decades we've been looking at ways to mitigate injury when you have a crash. We’ve got seat belts, we’ve got air bags, we’ve got more robust [auto body] structures. Right now, we have the opportunity to prevent the crash altogether. And that's going to save tens of thousands of lives. That's the ideal end point: no crashes whatsoever.

Autopilot systems can also create new dangers. The NTSB has said that pilots’ overreliance on automation has caused crashes. Do you worry about this phenomenon being a problem for cars, too?

The ideal scenario that I talked about, saving the tens of thousands of lives a year, assumes complete automation with no human engagement whatsoever. I'm not confident that we will ever reach that point. I don’t see the ideal of complete automation coming anytime soon.

Some people just like to drive. Some people don't trust the automation so they're going to want to drive. [And] there’s no software designer in the world that's ever going to be smart enough to anticipate all the potential circumstances this software is going to encounter. The dog that runs out into the street, the person who runs up the street, the bicyclist, the policeman or the construction worker. Or the bridge collapses in a flood. There is no way that you're going to be able to design a system that can handle it.

The challenge is when you have not-so-complete automation, with still significant human engagement—that's when the complacency becomes an issue. That's when lack of skills becomes the issue. So our challenge is: how do we handle what is probably going to be a long-term scenario of still some human engagement in this largely automated system?

The recent fatality of a driver using Tesla’s Autopilot feature may be a failure of automation. Why is the NTSB investigating that crash?

We don't investigate most car crashes. What we bring to this table is experience [with] scenarios of introducing automation into complex, human-centric systems, which this one definitely is. We’re investigating this crash because we see that automation is coming in cars and we would like to try to help inform that process with our experience with other types of automation.

Like what?

Well, we investigated a people-mover accident in an airport. It collided with another people mover. And our investigation found that the problem was a maintenance problem. Even if you eliminate the operator, you've still got human error from the people who designed it, people who built it, people who maintain it.

Some people say that self-driving cars will have to make ethical decisions, for example deciding whom to harm when a collision is unavoidable. Is this a genuine problem?

I can give you an example I've seen mentioned in several places. My automated car is confronted by an 80,000 pound truck in my lane. Now the car has to decide whether to run into this truck and kill me, the driver, or to go up on the sidewalk and kill 15 pedestrians. That would [have to] be put into the system. Protect occupants or protect other people? That to me is going to take a federal government response to address. Those kinds of ethical choices will be inevitable.

In addition to just ethical choices—what if the system fails? Is the system going to fail in a way that minimizes [harm] to the public, other cars, bicyclists? The federal government is going to be involved.

What might that process look like?

The Federal Aviation Administration has a scheme whereby if something is less likely than one in a billion to happen you don't have to have an alternate load path [a fallback structure to bear the weight of the plane]. More likely than that and you need a fail-safe. Unless you can show that the wing spar failing—the wing coming off—is less than one in a billion, it's “likely” to happen. Then you need to have a plan B.

That same process is going to have to occur with cars. I think the government is going to have to come into play and say, “You need to show me a less than X likelihood of failure, or you need to show me a fail-safe that ensures that this failure won't kill people.” Setting the limit is something that I think is going to be in the federal government domain, not state government. I don't want 50 limits.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.