How to Prevent a Plague of Dumb Chatbots

For the past few minutes I’ve been chatting with George Washington, and honestly he seems rather drunk. He also appears to have been hanging around with 20-somethings, because he keeps saying things like “cool, haha,” and “u wanna join my army or wut?”

This, of course, is not actually America’s first president. It is automated, conversational artificial intelligence, known as a chatbot, created by Drunk History, a comedy TV show, and made available through the messaging program Kik. It’s surprisingly entertaining, if not very coherent.

You can now chat with all sorts of bots through a number of messaging services including Kik, WeChat, Telegram, and now, Facebook Messenger. Some are simply meant to entertain, but a growing number are designed to do something useful. You can now book a flight, peruse the latest tech headlines, and even buy a hamburger from Burger King by typing messages to a virtual helper. Startups are racing to offer tools for speeding the development, management, and “monetization” of these virtual butlers.

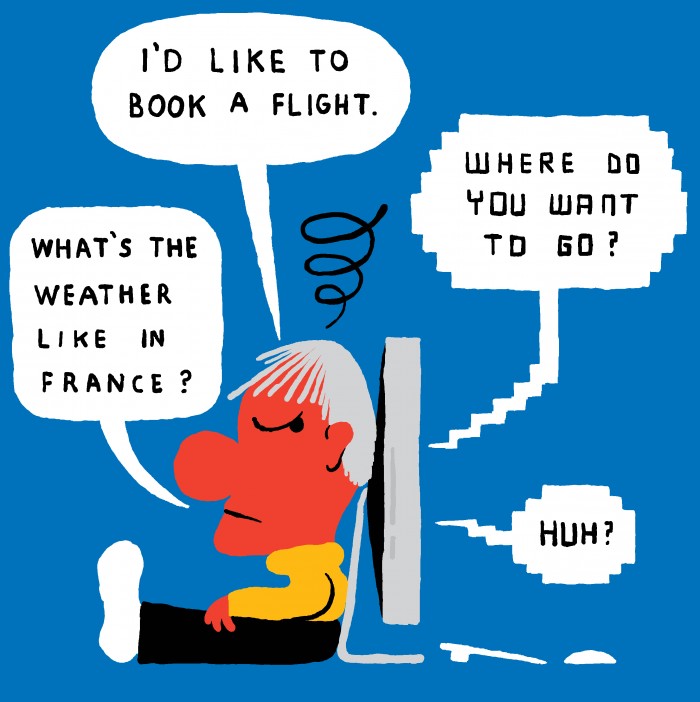

The trouble is that computers still have a hard time understanding human language in all its complexity and subtlety. Some impressive progress is being made, but chatbots are still prone to confusion and misunderstanding. And while drunken George Washington can make a virtue of that, it’s less charming when you’re trying to book a flight.

The best commercial chatbots will most likely be those that recognize their own limitations. “A pitfall is trying to do too many things at once,” says Paul Gray, director of platform services at Kik, which has offered integration for bots since 2014. “You should start off small and simple.”

30SecondstoFly, based in New York and Bangkok, is building a chatbot for travel booking that works via SMS or the messaging platform Slack. The company is using tools provided by API.ai, a California company that has built up a powerful suite of tools for handling queries written in natural language, but it still misunderstands people.

“We have colloquial language that’s very difficult; then we have specific requests that are not in our user scenarios,” says cofounder Felicia Schneiderhan. This led the company to develop its own tool for rerouting messages to human helpers.

This rush toward chatbots is partly due to the popularity of several new messaging services. But efforts to harness bots have also no doubt been inspired by incredible progress in other areas of artificial intelligence in recent years, such as processing imagery and audio.

But processing language is an altogether different challenge, and one that has bedeviled AI researchers for decades. Chatbots date back to the earliest days of AI. One of the very first, called Eliza, was developed at MIT in 1964. Playing the role of a psychotherapist, Eliza used a simple trick of stringing people along: asking standard questions, and often rephrasing a person’s own statements in the form of a question. But if you stray very far from the formula, Eliza quickly loses the plot.

Today’s chatbots are better, but not much, and it’s hardly surprising. There have been no fundamental breakthroughs in training computers to process and respond to language (a field known as natural language processing) in recent years.

Microsoft is a case in point: It has declared that the future of personal computing will be all about bots, even though its own experiments with self-learning chatbots have highlighted some of the remaining challenges (see “Microsoft Says Maverick Chatbot Foreshadows the Future of Computing” and “Why Microsoft Accidentally Unleashed a Nazi Sexbot”).

That said, the techniques that have led to advances in other areas, primarily deep learning, are showing promise for parsing language, says Chris Dyer, an assistant professor at Carnegie Mellon University. “Neural networks have a lot of promise here,” says Dyer. “There is a lot of exciting work coming out every few months on question-answering.”

Some of that work is now feeding into the chatbots that are popping up. Derrick Connell, a corporate vice president at Microsoft who oversees engineering for the company’s search engine, Bing, and its personal assistant, Cortana, says queries entered into Bing are used to train the chatbot algorithms Microsoft is making available to developers. He also says most chatbots will need periodic retraining in order to understand new phrases and concepts, but that they should improve steadily with that. “There will be edge cases, but over time the system will learn and continue to get better,” Connell says.

Plenty of work still needs to be done. Indeed, Facebook’s own virtual helper, called M, is still staffed by humans. The answers these people provide are being used to train deep-learning algorithms that may eventually answer questions themselves.

Retrieving and presenting information from a database in a way that feels natural to a person is particularly difficult to achieve, Dyer says; and understanding a person’s intention is still an unsolved challenge.

Given the likelihood of confusion and misunderstanding, it hardly makes sense to design chatbots to be too general, or to have them do anything particularly important. “Legal advice, medical advice, and psychiatric counseling would probably all be very risky,” says Dyer.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.