Machine Vision Algorithm Chooses the Most Creative Paintings in History

Creativity is one of humanity’s uniquely defining qualities. Numerous thinkers have explored the qualities that creativity must have, and most pick out two important factors: whatever the process of creativity produces, it must be novel and it must be influential.

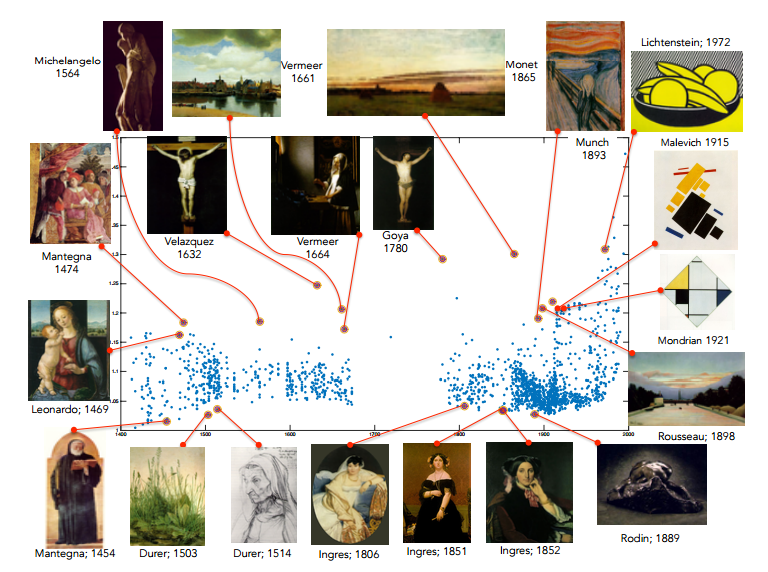

The history of art is filled with good examples in the form of paintings that are unlike any that have appeared before and that have hugely influenced those that follow. Leonardo’s 1469 Madonna and child with a pomegranate, Goya’s 1780 Christ crucified or Monet’s 1865 Haystacks at Chailly at sunrise and so on. Others paintings are more derivative, showing many similarities with those that have gone before and so are thought of as less creative.

The job of distinguishing the most creative from the others falls to art historians. And it is no easy task. It requires, at the very least, an encyclopedic knowledge of the history of art. The historian must then spot novel features and be able to recognize similar features in future paintings to determine their influence.

Those are tricky tasks for a human and until recently, it would have been unimaginable that a computer could take them on. But today that changes thanks to the work of Ahmed Elgammal and Babak Saleh at Rutgers University in New Jersey, who say they have a machine that can do just this.

They’ve put it to work on a database of some 62,000 pictures of fine art paintings to determine those that are the most creative in history. The results provide a new way to explore the history of art and the role that creativity has played in it.

Several advances have come together to make this advance possible. The first is the rapid breakthroughs that have been made in recent years in machine vision, based on a way to classify images by the visual concepts they contain.

These visual concepts are called classemes. They can be low-level features such as color, texture, and so on, simple objects such as a house, a church or a haystack and much higher-level features such as walking, a dead body, and so on.

This approach allows a machine vision algorithm to analyze a picture and produce a list of classemes that describe it (up to 2,559 different classemes, in this case). This list is like a vector that defines the picture and can be used to compare it against others analyzed in the same way.

The second advance that makes this work possible is the advent of huge online databases of art. This is important because machine visions algorithms need big databases to learn their trade. Elgammal and Saleh do it on two large databases, one of which, from the Wikiart website, contains images and annotations on some 62,000 works of art from throughout history.

The final component of their work is theoretical. The problem is to work out which paintings are the most novel compared to others that have gone before and then determine how many paintings in the future have uses similar features to work out their influence.

Elgammal and Saleh approach this as a problem of network science. Their idea is to treat the history of art as a network in which each painting links to similar paintings in the future and is linked to by similar paintings from the past.

The problem of determining the most creative is then one of working out when certain patterns of classemes first appear and how these patterns are adopted in the future. “We show that the problem can reduce to a variant of network centrality problems, which can be solved efficiently,” they say.

In other words, the problem of finding the most creative paintings is similar to the problem of finding the most influential person on a social network, or the most important station in a city’s metro system or super spreaders of disease. These have become standard problems in network theory in recent years, and now Elgammal and Saleh apply it to creativity networks for the first time.

The results of the machine vision algorithm’s analysis are interesting. The figure above shows artworks plotted by date along the bottom axis and by the algorithm’s creativity score on the vertical axis.

Several famous pictures stand out as being particularly novel and influential, such as Goya’s Christ crucified, Monet’s Haystacks at Chailly at sunrise and Munch’s The Scream. Other works of art stand out because they are not deemed creative, such as Rodin’s 1889 sculpture Danaid and Durer’s charcoal drawing of Barbara Durer dating from 1514.

Many art historians would agree. “In most cases the results of the algorithm are pieces of art that art historians indeed highlight as innovative and influential,” say Elgammal and Saleh.

An important point here is that these results are entirely automated. They come about because of the network of links between paintings that the algorithm uncovers. There is no initial seeding that biases the search one way or another.

Of course, art historians will always argue about exactly how to define creativity and how this changes their view of what makes it onto the list of most creative. The beauty of Elgammal and Saleh’s techniques is that small changes to their algorithm allow different definitions of creativity to be explored automatically.

This kind of data mining could have important impacts on the way art historians evaluate paintings. The ability to represent the entire history of art in this way changes the way it is possible to think about art and to discuss it. In a way, this kind of data mining, and the figures that represent it, are new instruments of reason for art historians.

And this approach is not just limited to art. Elgammal and Saleh point out that it can also be used to explore creativity in literature, sculpture, and even in science.

We’ll look forward to seeing how these guys apply it elsewhere.

Ref: arxiv.org/abs/1506.00711 : Quantifying Creativity in Art Networks

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.