Virtual Eyes Train Deep Learning Algorithm to Recognize Gaze Direction

Eye contact is one of the most powerful forms of nonverbal communication. If avatars and robots are ever to exploit it, computer scientists will need to better monitor, understand, and reproduce this behavior.

But eye tracking is easier said than done. Perhaps the most promising approach is to train a machine-learning algorithm to recognize gaze direction by studying a large database of images of eyes in which the gaze direction is already known.

The problem here is that large databases of this kind do not exist. And they are hard to create: imagine photographing a person looking in a wide range of directions, using all kinds of different camera angles under many different lighting conditions. And then doing it again for another person with a different eye shape and face and so on. Such a project would be vastly time-consuming and expensive.

Today, Erroll Wood at the University of Cambridge in the U.K. and a few pals say they have solved this problem by creating a huge database of just the kind of images of eyes that a machine learning algorithm requires. That has allowed them to train a machine to recognize gaze direction more accurately than has ever been achieved before.

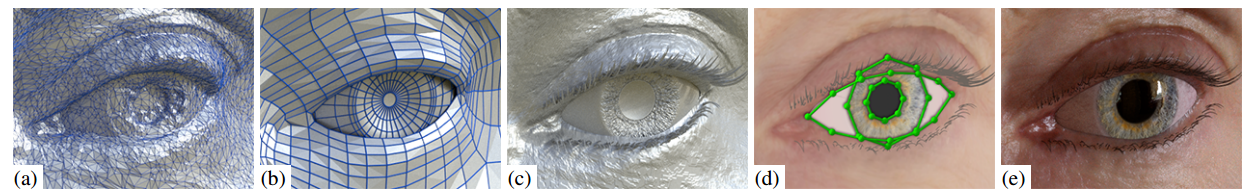

So how have they done this? Their trick is to create the database entirely artificially. They begin by building a highly detailed virtual model of an eye, an eyelid, and the region around it. They then build this model into various different faces representing people of different ages, skin colors, and eye types and photograph them—virtually.

The photographs can be described by four different variables. These are: camera position, gaze direction, lighting environment, and eye model. To create the database, Wood and co begin with a particular eye model and lighting environment and start with the eyes pointing in a specific direction. They then vary the camera position, taking photographs from a wide range of angles around the head.

Next, they move the eyes to another position and repeat the variations in camera position. And so on.

The result is a database of more than 11,000 images covering 40 degree variations in camera angle and changes in gaze variation over 90 degrees. They chose eye color and environmental lighting conditions randomly for each image.

Finally, Wood and co used the data set to train a deep convolutional neural network to recognize gaze direction. And they tested the resulting algorithm on a set of natural images taken from the wild. “We demonstrated that our method outperforms state-of-the-art methods for cross-data set appearance-based gaze estimation in the wild,” they say.

That’s interesting work. Deep learning techniques are currently taking the word of computer science by storm thanks to two advances. The first is a better understanding of neural networks themselves which has allowed computer scientists to significantly improve them.

The second is the creation of huge annotated data sets that can be used to train these networks. Many of these new data sets have been created using crowd sourcing methods such as Amazon’s Mechanical Turk.

But Wood and co have taken a different approach. Their data set is entirely synthetic, created inside a computer. So it will be interesting to see where else they can apply this synthetic method to create data sets for other types of deep learning.

Ref: arxiv.org/abs/1505.05916 : Rendering of Eyes for Eye-Shape Registration and Gaze Estimation

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.