Human Face Recognition Found In Neural Network Based On Monkey Brains

When neuroscientists use functional magnetic resonance imaging to see how a monkey’s brain responds to familiar faces, something odd happens. When shown a familiar face, a monkey’s brain lights up, not in a specific area, but in nine different ones.

Neuroscientists call these areas “face patches” and think they are neural networks with the specialised functions associated with face recognition. In recent years, researchers have begun to tease apart what each of these patches do. However, how they all function together is poorly understood.

Today, we get some insight into this problem thanks to the work of Amirhossein Farzmahdi at the Institute for Research on Fundamental Sciences in Tehran, Iran, and a few pals from around the world. These guys have built a number of neural networks, each with the same functions as those found in monkey brains. They’ve then joined them together to see how they work as a whole.

The result is a neural network that can recognise faces accurately. But that’s not all. The network also displays many of the idiosyncratic properties of face recognition in humans and monkeys, for example, the inability to recognise faces easily when they are upside down.

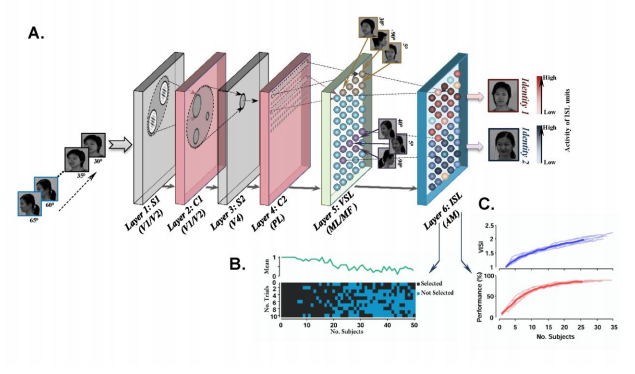

The new neural network consists of six layers with the first four trained to extract primary features. The first two recognise edges, rather like two areas of the visual cortex known as V1 and V2. The next two layers recognise face parts, such as the pattern of eyes, nose and mouth. These layers simulate the behaviour of parts of the brain called V4 and the anterior IT neurons.

The fifth the layer is trained to recognise the same face from different angles. It is known as the view selective layer and inspired by parts of monkey brains called middle face patches.

The final layer matches the face to an identity. This is called the identity selective layer and simulates a part of the simian brain known as the anterior face patch.

Farzmahdi and co train the layers in the system using different image databases. For example, one of the datasets contain 740 face images consisting of 37 different views of 20 people. Another dataset contains images of 90 people taken from 37 different viewing angles. They also have a number of datasets for evaluating specific properties of the neural net.

Having trained the neural network, Farzmahdi and co put it through its paces. In particular, they test whether the network demonstrates known human behaviours when recognising faces.

For example, various behavioural studies have shown that humans recognise faces most easily when seen from a three quarters point of view contains, that’s halfway between a full frontal and a profile.

Curiously, Farzmahdi and co say their network behaves in the same way—the optimal viewing angle is the same three-quarter view that humans prefer.

Another curious feature of human face recognition is that it is much harder to recognise faces when they are upside down. And Farzmahdi and co’s neural network shows exactly the same property.

What’s more, it also demonstrates the “composite face effect”. This occurs when identical images of the top of a face are aligned with different bottom halves, in which case humans perceive them as being different people. Neuroscientists say this suggests that face recognition works only on the level of whole faces rather than in parts.

Farzmahdi and co say their new neural network behaves in exactly the same way. It considers composite faces as new identities, suggesting that the network must be recognising faces as a whole, just like humans.

Finally, Farzmahdi and co say that when their neural network is trained using faces of a specific race, it finds it much harder to identify faces of a different race. Once again, that is a phenomena well known in humans. “People are better at identifying faces of their own race than other races, an effect known as other race effect,” they say.

That’s interesting work because no other face recognition system has been able to reproduce these biological characteristics. The results suggest that Farzmahdi and co have found an interesting way to reproduce these human and monkey behaviours in an artificial system for the first time. “Our proposed model…explains neural response characteristics of monkey face patches; as well several behavioral phenomena observed in humans,” they say.

The process behind this work is almost as fascinating as the result. These guys have taken certain structures found in monkey brains, built synthetic system based on the structures and then found that the artificial behaviour matches the biological behaviour.

If that works for vision, then might it also work for hearing, touch, balance, movement and so on? And beyond that there is the potential for capturing the essence of being human, which must somehow be captured by structures within the brain.

Other suggestions in the comments section please.

Clearly, the fields of synthetic neuroscience and artificial intelligence are changing. And quickly.

Ref: arxiv.org/abs/1502.01241 : A Specialized Face-Processing Network Consistent With The Representational Geometry Of Monkey Face Patches

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.