Weathering the Storm

For four days in the summer of 2011, the National Weather Service predicted that a huge hurricane called Irene was headed for New York City. Some media reports discussed the possibility of an unprecedented storm surge that could cause massive flooding in Manhattan and major power outages. Mayor Michael Bloomberg called for the first-ever evacuation of parts of New York City. He ordered 370,000 New Yorkers to leave their homes and launched a massive and costly relocation program for patients and residents in hospitals and nursing homes.

Meanwhile, upstate in Yorktown, researchers at IBM were getting results from an experimental forecasting system called Deep Thunder, and it was telling them something very different. Yes, the storm was headed right for New York, but Deep Thunder forecast a much weaker tropical storm that was likely to have little impact on the city apart from subjecting it to heavy rains.

It was right. The inaccurate forecast on Irene caused a public backlash. Managers at nursing homes and hospitals criticized officials for putting their sick, frail patients at risk by forcing them to move.

A year later, when the much more destructive Hurricane Sandy approached the city, officials were more tentative. They ordered an evacuation of 375,000 people, but they told nursing homes and hospitals to discharge those patients they could and not evacuate the rest. In spite of the order, thousands of people in the evacuation zone refused to leave.

When Sandy did hit, its unprecedented storm surge—a massive rush of water nearly two and a half meters higher than ground level in certain parts of Manhattan—forced emergency evacuations at 35 facilities. In the scramble to get patients and nursing home residents to safety, many were shuttled to shelters without medications or critical medical information, the New York Times reported. According to the report, some family members were still searching for their relatives more than a week after the storm. Among them was a 93-year-old woman who was blind and suffered from dementia. In the general population, 43 died.

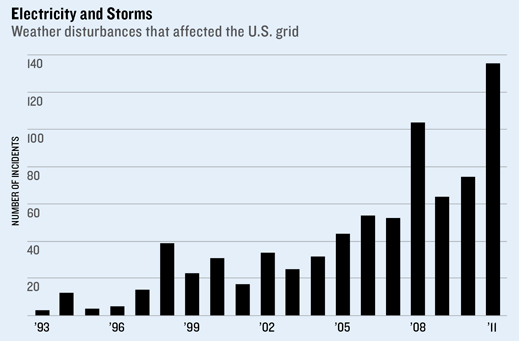

For cities, storm forecasts have high stakes. They will never be perfect, but IBM and the National Weather Service are developing much more advanced computer models that will help cities make better decisions. The need for these systems is increasing. The number of weather-related power outages in the U.S. annually is at least double what it was a couple of decades ago, and some scientists say climate change could make storms worse in the future.

To improve accuracy, IBM is using finer-grained data and more complex physical models, enabled by powerful computers. It’s also extending its models to predict three days ahead, giving city planners more time to begin making decisions about evacuation.

To gauge not just a storm’s force but its potential impact and the best way to prepare for it, IBM and other groups are also starting to couple weather forecasts with more detailed information about geography, infrastructure, and the resources that cities and utilities have for responding to disasters. IBM can use a model of a utility’s power grid, along with historical data about how previous storms have affected the grid, to recommend preparing for specific events such as downed power lines in one area or blown transformers somewhere else, says Lloyd Treinish, who leads the Deep Thunder project.

Finally, IBM creates a third computer model that suggests how utilities can optimize their resources given the predicted impact of the storm. How many workers do they need to have on call? What equipment is needed and where? Treinish says that the models can take into account the cost of different options and assign different priorities—directing workers to restore power to hospitals first, for example, while initially ignoring the hard-to-reach single home without power at the end of a cul de sac.

Deep Thunder is still largely an R&D project. But the company has clients in the U.S., Europe, and Asia, including both governments and utilities, which are already making use of its models. (Aside from a Detroit utility, IBM won’t disclose its clients.)

There is still one big question IBM can’t answer: its models don’t yet predict storm surge, a mounting swell of water created by hurricane winds. Historically, that’s what’s caused most hurricane deaths and certainly much of the damage, says James McConnell, New York City’s assistant commissioner for strategic data in the Office of Emergency Management. But storm surge is more difficult to predict than rain and wind speed.

On this, the National Weather Service is further along. It first depends on an accurate forecast for the strength, direction, and speed of a hurricane—and sometimes of other storms in the area. Then it requires a detailed model of the sea floor and the natural and man-made geography of a city.

Jamie Rhome, who leads storm-surge-related efforts at the National Weather Service’s Hurricane Center, makes monthly trips to New Orleans to keep track of the rebuilding still going on in that city after Katrina, the 2005 storm that killed 1,200 people and caused $108 billion in damage. A new construction project can make a big difference in where surge waters end up.

Rhome says some of the most important work being done at the National Hurricane Center focuses on ways to communicate the information in its models. What was provided to city planners for Irene and Sandy was “like a foreign language” to nonscientists, he says. The output of the models was all numbers and text. What’s more, it didn’t account for a very important variable—the tides. “We were struggling to convey how far inland the water would go,” he says. Now the Hurricane Center is testing detailed and easy-to-read maps that show exactly where water could go in a worst-case scenario. The hope is that people will understand the reason for an evacuation, in very vivid and local terms.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.