Solving the Tongue-Twisting Problems of Speech Animation

Motion capture is a widely used technique to record the movement of the human body. Indeed, the technique has become ubiquitous in areas such as sports science, where it is used to analyze movement and gait, as well as in movie animation and gaming, where it is used to control computer-generated avatars.

As a result, an entire industry exists for analyzing movement in this way, along with cheap, off-the-shelf equipment and software for capturing and handling the data. “There is a huge community of producers and consumers of motion capture, countless databases and stock animation resources, and a rich ecosystem of proprietary and open-source software tools to manipulate them,” say Ingmar Steiner at Saarland University in Germany and a few pals.

One of the features of the motion capture world is that a de facto standard, known as BioVision Hierarchy or BVH, has emerged for encoding body motion data. “BVH has survived the company that created it and is now widely supported, presumably because it is simple and clearly defined, straightforward to implement, and human-readable,” say Steiner and co.

But while BVH is used to encode data from almost every conceivable form of motion capture, there is one exception—tongue articulation during speech. If you’ve thought that speech animation in gaming characters looks poor, that’s the reason.

This state of affairs has not come about for lack of data, or the techniques for producing it. On the contrary, researchers have been using real-time MRI and electromagnetic imaging techniques to record tongue articulation for some time now. Indeed these techniques produce animations in significantly higher resolution than is normally used in films and gaming, for example.

The problem is that these techniques record the data in an entirely different format than is conventionally used and nobody has gotten round to the job of converting it into a more common format such as BVH. That makes speech analysis technically challenging to carry out and almost impossible to include easily in other media

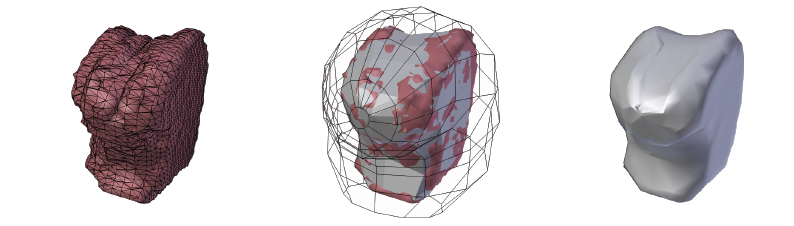

All that changes today, thanks to the work of Steiner and co. These guys have worked out how to convert high-resolution tongue data into BVH format, combining data from several sources at the same time.

They have demonstrated the new approach on a standard database of existing tongue articulation recordings. These include real time MRI, dental scans and electromagnetic recording which captures movement by placing conducting coils on different parts of the tongue, and skull for reference, and measuring the way these interact with an electromagnetic field as they move.

The results are animations generated from high resolution data that can be handled by more or less any motion capture software and, crucially, with a small enough footprint to be included in real time visualization and control techniques.

Steiner and co are quick to point out that the limitations of their approach. “This technique is by no means intended to provide an accurate model of tongue shapes or movements, as previous work using biomechanical models does,” they say. “Rather, the advantage here is the lightweight implementation … where realistic animation is more important than matching the true shape of the tongue.”

That will be useful for the film and gaming industry where good speech animation is noteworthy by its absence. So that looks set to change. Look out for better speech animation in virtual characters and animations in the not too distant future.

Ref: arxiv.org/abs/1310.8585: Speech Animation Using Electromagnetic Articulography As Motion Capture Data

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.