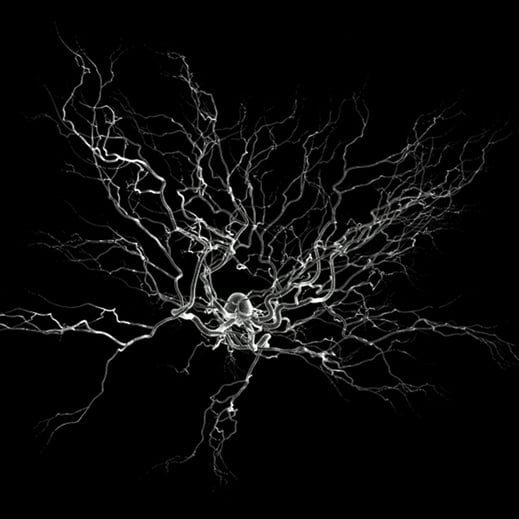

Over the past few decades, neuroscientists have made great progress in mapping the brain by deciphering the functions of individual neurons that perform very specific tasks, such as recognizing the location or color of an object.

But many neurons, especially in brain regions that perform sophisticated functions such as thinking and planning, don’t fit into this pattern. Instead of responding exclusively to one stimulus or task, these neurons react in different ways to a wide variety of things.

MIT neuroscientist Earl Miller first noticed these unusual activity patterns about 20 years ago, while recording the electrical activity of neurons in animals trained to perform complex tasks. During one task, such neurons might distinguish between colors, but under different conditions, they might issue a motor command.

At the time, Miller and colleagues proposed that this type of neuronal flexibility is key to cognitive flexibility, which gives the brain its ability to learn so many new things on the fly. At first, that theory encountered resistance “because it runs against the traditional idea that you can figure out the clockwork of the brain by figuring out the one thing each neuron does,” Miller says.

In a recent paper published in Nature, Miller and colleagues at Columbia University described a computer model they developed to determine more precisely what role these flexible neurons play in cognition. They found that the cells are critical to the human brain’s ability to learn a large number of complex tasks.

Columbia professor Stefano Fusi created the model using experimental data gathered by Miller and his former grad student Melissa Warden, PhD ‘06. The data came from electrical recordings from brain cells of monkeys trained to look at a sequence of two pictures and remember the pictures and the order in which they appeared.

The computer model revealed that flexible neurons are critical to performing this kind of complex task, and they also greatly expand the capacity to learn many different things. In the computer model, neural networks without these flexible neurons could learn about 100 tasks before running out of capacity. That capacity expanded to tens of millions of tasks as flexible neurons were added to the model. When they reached about 30 percent of the total, the network’s capacity became “virtually unlimited,” Miller says—just like that of a human brain.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.