Why IBM Made a Liquid Transistor

Researchers at IBM last week unveiled an experimental new way to store information or control the switching of an electronic circuit.

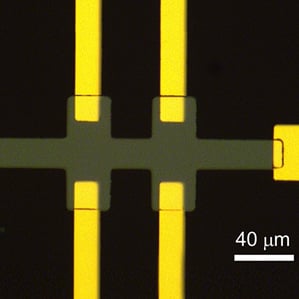

The researchers showed that passing a voltage across electrolyte-filled nanochannels pushes a layer of ions—or charged atoms—against an oxide material, a reversible process that switches that material between a conducting and nonconducting state, thus acting as a switch or storing a bit, or a basic “1” or “0” of digital information.

Although it’s at a very early stage, the method could someday allow for very energy-efficient computing, says Stuart Parkin, the IBM Research Fellow behind the work at the company’s Almaden Research Lab in San Jose, California. “Unlike today’s transistors, the devices can be switched ‘on’ and ‘off’ permanently without the need for any power to maintain these states,” he says. “This could be used to create highly energy-efficient memory and logic devices of the future.”

Even a small prototype circuit based on the idea is two to four years off, Parkin says. But ultimately, “we want to build devices, architecturally, which are quite different from silicon-based devices. Here, memory and logic are fully integrated,” he says.

Such a device could be reconfigurable, with individual elements strung together to create wires and circuits that could be reprogrammed. That’s in contrast to traditional chips, which are connected by copper wires that can’t be changed.

One problem is that, as a chemical reaction, the switching times for the early demonstration is slow—perhaps one or two orders of magnitude slower than existing technologies. However, shrinking their dimensions and packing them closely together will increase the effective speed, Parkin says. “Having a lot of these devices operating in parallel and using very little energy can create powerful computing devices,” he says.

Computing devices that are slow and based on chemistry have a long way to go to compete, says Douglas Natelson, a physicist at Rice University who investigates nanoscale phenomena and technologies. “This is a really nice piece of science but you have to wait and see how much impact it’s going to have on computing,” he says. “Silicon has a lot of legs left, I think.”

Parkin has previously been behind other experimental advances, including a technology known as racetrack memory, in which information is represented by magnetic stripes on nanoscale wires deposited on silicon (see “IBM Makes Revolutionary Racetrack Memory Using Existing Tools”). And he’s worked on technologies that leverage not only the charge of electrons, but their up-or-down “spin” (see “A New Spin on Silicon Chips”).

But the idea of using fluids to drive computing processes is in some ways even more radical, and presents obvious challenges. Eventual technologies built on such science, Parkin says, would leverage engineering advances made in the field of nanofluidics (see “Nanofluidics Get More Complex“) or the use and manipulation of fluid at tiny scales inside etched channels on silicon or glass wafers.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.