The Statistical Puzzle Over How Much Biomedical Research is Wrong

The claim that most biomedical research is wrong is being challenged by a new result suggesting that the real number is just 14 per cent

One of the most controversial ideas in modern science is that most biomedical research is wrong. The argument was first put forward by John Ioannidis at the University of Ioannina in Greece in 2005.

He argues that most biomedical studies have a low chance of success even before they begin. That’s because of the way they are conceived and designed, with factors such as small sample sizes, various biases and so on, all playing a part. So researchers end up testing far more false hypotheses than true ones, he says.

The standard statistical measure of hypothesis testing is called the p-value. It gives the probability of getting the observed measurement even if the hypothesis under investigation is false.

By convention, researchers agree that a result is significant if the p-value is less than 0.05. This implies that, all other things being equal, false hypotheses will appear significant 5 per cent of the time.

At the same time, true hypotheses ought to appear significant at a much higher rate. Many studies are designed so that this rate is about 0.8 or 80 per cent.

But here’s the important bit: Ioannidis argues that if researchers test many more false hypotheses than true ones, it’s inevitable that most significant results will be wrong. That’s because 5 per cent of a very large number is always going to be bigger than 80 per cent of a very small one.

Needless to say, many researchers are hugely upset by this argument, even though it seems entirely plausible.

Today, they may have some ammunition to fight back thanks to the work of Leah Jager at the United States Naval Academy in Annapolis and Jeffrey Leek at Johns Hopkins Bloomberg School of Public Health in Baltimore.

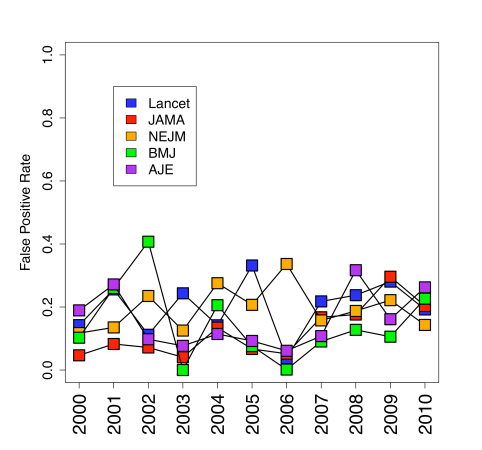

These guys have collected the p-values reported in the abstracts of 77,430 papers published between 2000 and 2010 in the world’s leading medical journals, such as The Lancet and The New England Journal of Medicine. These p-values are all less than 0.05, as required by convention for significant results.

Jager and Leek then create a mathematical model that estimates the number of false positives that are likely to be included in these results. This model cannot be solved directly to give the actual number of false positives. However, statisticians have developed an approach called the expectation-maximum algorithm that can find the most likely value for statistical properties like this.

This algorithm works by assuming a random number for the unknown parameters, calculating an answer and then using this to better estimate the parameters in question. It then repeats this process iteratively until it gets the most likely value for the parameters.

Jager and Leek say that using this method, they reckon the best estimate for the number of false positives is 14 per cent. That’s more than double the expected rate given the nature of the 0.05 p-value limit but it is significantly better than the Ioannidis claim.

Rather than most biomedical research being wrong, the new result implies that most of these results are actually correct.

That will come as a relief for many researchers but it is unlikely to settle the debate. Not by any means. The first question is whether the statistical model that Jager and Leek use and the algorithm behind it, are valid.

The second question arises because the new result flies in the face of other analyses. Last year, Amgen reported in Nature that its oncology and haematology researchers had failed to replicate 47 out of 53 highly promising results. And in 2011, the German drugs giant Bayer reported that it could not replicate about two thirds of published studies identifying drug targets.

That most biomedical research cannot be reproduced is increasingly seen by many pharmaceutical companies as a costly commercial reality of science.

That’s unlikely to change on the basis of Jager and Leek’s work. The question remains of why so much research cannot be reproduced later. It is one that clearly needs to be better understood.

Ref: arxiv.org/abs/1301.3718: Empirical Estimates Suggest Most Published Medical Research is True

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.