How Your Retina Screen is Helping Make Supercomputers More Powerful than Ever

Earlier this week, the “Titan” supercomputer at Oak Ridge National Laboratory in Tennessee was named the fastest supercomputer on earth. It was able to perform nearly 18 quadrillion floating-point calculations per second in the LINPACK benchmark (the high-performance computing industry’s standard “speedometer”) by accelerating its 560,640 CPUs with graphics processing units from NVIDIA. Intriguingly, this same “Kepler” GPU architecture also provides the graphics horsepower for Retina screens on the new Macbook Pro. This isn’t a coincidence. Without ordinary consumers’ relentless desire for next-generation user experiences – sharper screens for their laptops, better graphics for their games, longer-lasting batteries for their mobile devices – scientific supercomputing would be kind of screwed.

“It takes about a billion dollars to develop a high-performance processor,” says Steve Scott, Tesla CTO at NVIDIA. “The supercomputing market isn’t big enough to support the development of this hardware. Fortunately, NVIDIA is supported by millions and millions of gamers that want ever-faster processing power for their gaming. We can take the same processors that are designed to run graphics for video games and use them to perform the calculations necessary to simulate the climate or design more fuel efficient engines.”

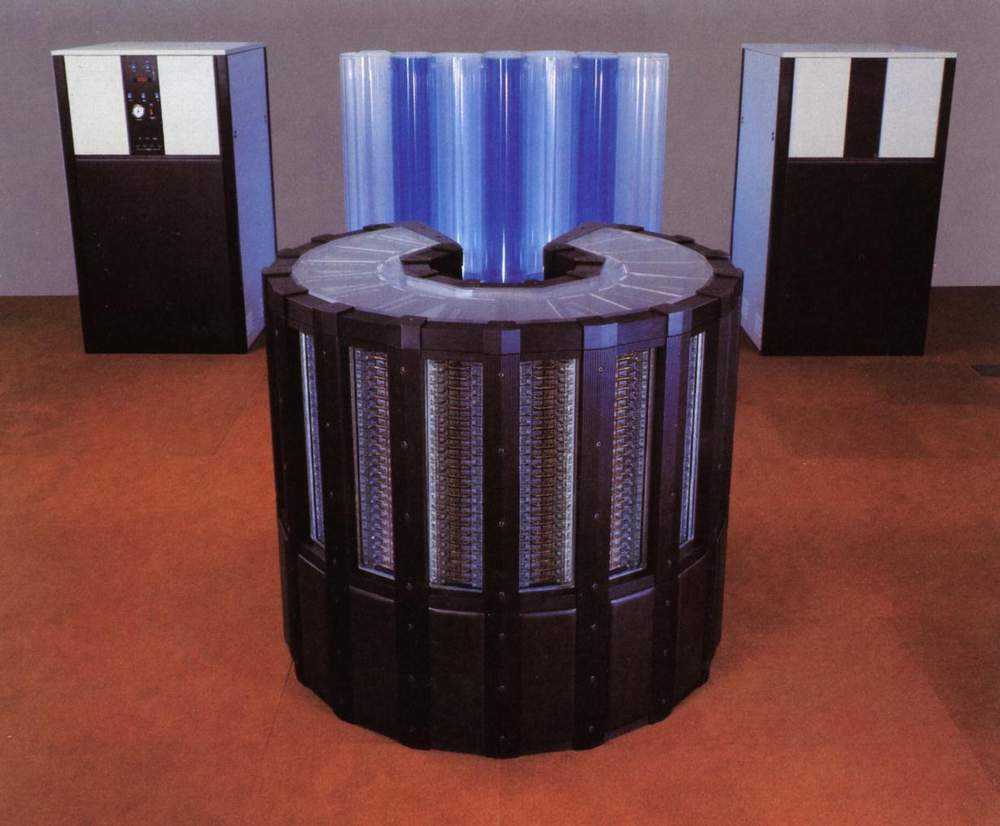

GPUs have been used to accelerate supercomputers for years. Before that, consumer off-the-shelf (COTS) chips revolutionized supercomputing by replacing the expensive custom CPUs that had made firms like Cray famous. In most areas of science, innovation trickles down from governments and academia to industry and consumer applications. But as high-performance computation rises to meet theory and experimentation as a “third pillar” of scientific discovery, the reverse is true. Legions of Skyrim-obsessed gamers and iPad-toting moms are the engine that drives technical innovation upward to scientists and researchers.

And what motivates all those gamers and moms to keep obsessing and desiring and upgrading and buying, year after year? User experience. Apple doesn’t develop Retina screens (and NVIDIA doesn’t create the GPUs to power them) “just because.” When Apple billed its Power Mac G4 as the first “desktop supercomputer” (because it could perform more than a billion floating-point calculations per second) more than a decade ago, it wasn’t because they wanted computational scientists to high-five them. It was because a gigaflop was enough computing power to ensure (at the time) a seamless, on-demand user experience for ordinary consumers.

The next big milestone for supercomputing to reach is the so-called exascale, where computers can execute 1018 calculations per second– a thousand times more powerful than today’s petascale machines like Titan, and enough (according to some researchers) to make magical feats of simulation possible, like screening potential drug designs against every known living protein class so that any possible side effect can be predetermined.

But the barrier to reaching the exascale isn’t processing power, it’s energy usage. And so once again, ordinary user experience may be what takes us to the next generation of supercomputing, as scientists and engineers experiment with using ultra-low-power mobile chip architectures such as ARM to wring ever more FLOPS per watt out of their machines. After all, the main reason the chip in your phone is much more energy-efficient than the one in your desktop is to ensure that your battery lasts more than 15 minutes. (And to make sure the phone doesn’t burn through your pants. That would be a pretty terrible user experience.)

Supercomputers themselves offer a ruthlessly primitive user experience: researchers often code their applications themselves using Fortran, and interact with them using the command line. As they should–none of those petaflops should be “wasted” on user-interface frivolities that the rest of us take for granted. But it’s those very frivolities–and our voracious desire for faster, stronger, better, prettier ones every year–that make supercomputing possible.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.