Shoveling Water

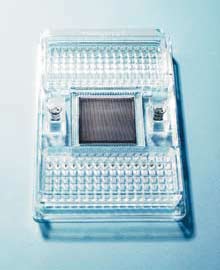

The new microfluidic chip fabricated by Fluidigm, a startup based in South San Francisco, represents a decade of successive inventions. This small square of spongy polymer–the same type used in contact lenses and window caulking–holds a complex network of microscopic channels, pumps, and valves. Minute volumes of liquid from, say, a blood sample can flow through the maze of channels to be segregated by the valves and pumps into nearly 10,000 tiny chambers. In each chamber, nanoliters (billionths of a liter) of the liquid can be analyzed.

The ability to move fluids around a chip on a microscopic scale is one of the most impressive achievements of biochemistry over the last 10 years. Microfluidic chips, which are now produced by a handful of startup companies and a similar number of university-based foundries, allow biologists and chemists to manipulate tiny amounts of fluid in a precise and highly automated way. The potential applications are numerous, including handheld devices to detect various diseases and machines that can rapidly analyze the content of a large number of individual cells (each holding about one picoliter of liquid) to identify, for example, rare and deadly cancerous mutations. But microfluidics also represents a fundamental breakthrough in how researchers can interact with the biological world. “Life is water flowing through pipes,” says George Whitesides, a chemist at Harvard University who has invented much of the technology used in microfluidics. “If we’re interested in life, we must be interested in fluids on small scales.”

By way of explaining the importance of the technology and the complexity of its microscopic apparatus, those involved in microfluidics often make comparisons to microprocessors and integrated circuits. Indeed, a microfluidic chip and an electronic microprocessor have similar architectures, with valves replacing transistors and channels replacing wires. But manipulating liquids through channels is far more difficult than routing electrons around an integrated circuit. Fluids are, well, messy. They can be hard to move around, they often consist of a complex stew of ingredients, and they can stick and leak.

Over the last decade, researchers have overcome many such challenges. But if microfluidics is ever to become truly comparable to microelectronics, it will need to overcome a far more daunting challenge: the transition from promising laboratory tool to widely used commercial technology. Can it be turned into products that scientists, medical technicians, and physicians will want to use? Biologists are increasingly interested in using microfluidic systems, Whitesides says. But, he adds, “do you go into the lab and find these devices everywhere? The answer is no. What’s interesting is that it hasn’t really taken off. The question is, why not?”

Things Reviewed

Biomark 96.96 Dynamic Array

Fluidigm

The Nature of Technology: What It Is and How It Evolves

By W. Brian Arthur

Free Press, 2009

A similar question could just as well be asked about at least two other important technologies that have emerged over the last decade: genomic-based medicine and nanotechnology. Each began this century with significant breakthroughs and much fanfare. The sequencing of the human genome was first announced in early 2001; the National Nanotechnology Initiative, which helped launch much of today’s nanotech research, got its first federal funding in 2000. While all three technologies have produced a smattering of new products, none has had the transformative effects many experts expected. Why does it take so long for a technology as obviously important and valuable as these to make an impact? How do you create popular products out of radically new technologies? And how do you attract potential users?

Patience, Patience

Despite the economic, social, and scientific importance of technology, the process of creating it is poorly understood. In particular, researchers have largely overlooked the question of how technologies develop over time. That’s the starting point of W. Brian Arthur’s The Nature of Technology, an attempt to develop a comprehensive theory of “what technology is and how it evolves.” Arthur set to work in the library stacks at Stanford University. “As I began to read, I was astonished that some of the key questions had not been very deeply thought about,” he recalled in a recent interview. While much has been written on the sociology of technology and engineering, and there’s plenty on the histories of various technologies, he said, “there were big gaps in the literature. How does technology actually evolve? How do you define technology?”

A patent map created by IPVision, based in Cambridge, MA, shows many of the key inventions by Stephen Quake and Fluidigm over the last decade that make possible the company’s microfluidic chips. The timeline shows several key initial advances and how today’s microfluidics use both advances in microfabrication and biochemistry. Such a complex network of inventions is not uncommon in the development of new bodies of technology.

Credit: IPVision

Arthur hopes to do for technology what Thomas Kuhn famously did for science in his 1962 The Structure of Scientific Revolutions, which described how scientific breakthroughs come about and how they are adopted. A key part of Arthur’s argument is that technology has its own characteristics and “nature,” and that it has too long been treated as subservient to science or simply as “applied science.” Science and technology are “completely interwoven” but different, he says: “Science is about understanding phenomena, whereas technology is really about harnessing and using phenomena. They build out of each other.”

Arthur, a former professor of economics and population studies at Stanford who is now an external professor at the Santa Fe Institute and a visiting researcher at the Palo Alto Research Center, is perhaps best known for his work on complexity theory and for his analysis of increasing returns, which helped explain how one company comes to dominate the market for a new technology. Whether he fulfills his goal of formulating a rigorous theory of technology is debatable. The book does, however, offer a detailed description of the characteristics of technologies, peppered with interesting historical tidbits. And it provides a context in which to begin understanding the often laborious and lengthy processes by which technologies are commercially exploited.

Particularly valuable are Arthur’s insights into how different “domains” of technology evolve differently compared to individual technologies. Domains, as Arthur defines them, are groups of technologies that fit together because they harness a common phenomenon. Electronics is a domain; its devices–capacitors, inductors, transistors–all work with electrons and thus naturally fit together. Likewise, in photonics, lasers, fiber-optic cables, and optical switches all manipulate light. Whereas an individual technology–say, the jet engine–is designed for a particular purpose, a domain is “a toolbox of useful components”–“a constellation of technologies”–that can be applied across many industries. A technology is invented, Arthur writes. A domain “emerges piece by piece from its individual parts.”

The distinction is critical, he argues, because users may quickly adopt an individual technology to replace existing devices, whereas new domains are “encountered” by potential users who must try to understand them, figure out how to use them, determine whether they are worthwhile, and create applications for them. Meanwhile, those developing the new domains must improve the tools in the toolbox and invent the “missing pieces” necessary for new applications. All this “normally takes decades,” Arthur says. “It is a very, very slow process.”

What Arthur touches on just briefly is that this evolution of a new body of technology is often matched by an even more familiar progression: enthusiasm about a new technology, investor and user disillusionment as the technology fails to live up to the hyperbole, and a slow reëmergence as the technology matures and begins to meet the market’s needs.

A Solution Looking for Problems

In the late 1990s, microfluidics (or, as it is sometimes called, “lab on a chip” technology) became another overhyped advance in an era notorious for them. Advocates talked up the potential of the chips. But the devices couldn’t perform the complex fluid manipulations required for many applications. “They were touted as a replacement for everything. That clearly didn’t pan out too well,” says Michael Hunkapiller, a venture capitalist at Alloy Ventures in Palo Alto, CA, who is now investing in several microfluidics startups, including Fluidigm. The technology’s capabilities in the 1990s, he says, “were far less universal than the hype.”

The problem, as Arthur might put it, was that the toolbox was missing key pieces. Prominent among the needed components were valves, which would allow the flow of liquids to be turned on and off at specific spots on the chip. Without valves, you merely have a hose; with valves you can build pumps and begin to think of ways to construct plumbing. The problem was solved in the lab of Stephen Quake, then a professor of applied physics at Caltech and now in the bioengineering department at Stanford. Quake and his Caltech coworkers found a simple way to make valves in microfluidic channels on a polymer slab. Within two years of publishing a paper on the valves, the group had learned how to create a microfluidic chip with thousands of valves and hundreds of reaction chambers. It was the first such chip worthy of being compared to an integrated circuit. The technology was licensed to Fluidigm, which Quake cofounded in 1999.

Meanwhile, other academic labs invented other increasingly complex ways to manipulate liquids in microfluidic devices. The result is a new generation of companies equipped with far more capable technologies. Still, many potential users remain skeptical. Once again, microfluidics finds itself in a familiar phase of technology development. As David Weitz, a physics professor at Harvard and cofounder of several microfluidics companies, explains: “It is a wonderful solution still looking for the best problems.”

There are plenty of possibilities. Biomedical researchers have begun to use microfluidics to look at how individual cells express genes. In one experiment, cancer researchers are using one of Fluidigm’s chips to analyze prostate tumor cells, seeking patterns that would help them select the drugs that will most effectively combat the tumor. Also, Fluidigm has recently introduced a chip designed to grow stem cells in a precisely controlled microenvironment. Currently, when stem cells are grown in the lab, it can be difficult to mimic the chemical conditions in a living animal. But tiny groups of stem cells could be partitioned in sections of a microfluidic chip and bathed in combinations of biochemicals, allowing scientists to optimize their growing conditions.

And microfluidics could make possible cheap and portable diagnostic devices for use in doctor’s offices or even remote clinics. In theory, a sample of, say, blood could be dropped on a microfluidic chip, which would perform the necessary bioassay–identifying a virus, detecting telltale cancer proteins, or finding biochemical signs of a heart attack. But in medical diagnostics as in biomedical research, microfluidics has yet to be widely adopted.

Again, Arthur’s analysis offers an explanation. Users who encounter the new tools must determine whether they are worthwhile. In the case of many diagnostic applications, biologists must better understand which biochemicals to detect in order to develop tests. Meanwhile, those developing microfluidic devices must make the devices easier to use. As Arthur reminds us, the science and technology must build on each other, and technologists must invent the missing pieces that users want; it is a slow, painstaking evolution.

It’s often hard to predict what those missing pieces will be. Hunkapiller recalls the commercialization history of the automated DNA sequencer, a machine that he and his colleagues invented at Caltech and that was commercialized in 1986 at Applied Biosystems. (The machine helped make possible the Human Genome Project.) “Sometimes, it is a strange thing that makes a technology take off,” he says. Automated sequencing didn’t become popular until around 1991 or 1992, he says, when the company introduced a sample preparation kit. Though it wasn’t a particularly impressive technical advance–certainly not on the level of the automated sequencer itself–the kit had an enormous impact because it made it easier to use the machines and led to more reliable results. Suddenly, he recalls, sales boomed: “It wasn’t a big deal to pay $100,000 for a machine anymore.”

In a recent interview, Whitesides demonstrated a microfluidic chip made out of paper in which liquids are wicked through channels to tiny chambers where test reactions are carried out. Then he pulled a new smart phone, still in its plastic wrapping, out of its box. What if, he mused, you could somehow use the phone’s camera to capture the microchip’s data and use its computational power to process the results, instead of relying on bulky dedicated readers? A simple readout on the phone could give the user the information he or she needs. But before that happens, he acknowledged, various other advances will be needed. Indeed, as if reminded of the difficult job ahead, Whitesides quickly slipped the smart phone back into the box.

David Rotman is Editor of Technology Review.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.