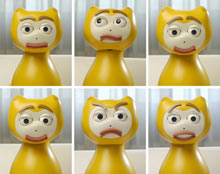

An Emotional Cat Robot

Scientists in the Netherlands are endowing a robotic cat with a set of logical rules for emotions. They believe that by introducing emotional variables to the decision-making process, they should be able to create more-natural human and computer interactions.

“We don’t really believe that computers can have emotions, but we see that emotions have a certain function in human practical reasoning,” says Mehdi Dastani, an artificial-intelligence researcher at Utrecht University, in the Netherlands. By bestowing intelligent agents with similar emotions, researchers hope that robots can then emulate this humanlike reasoning, he says.

The hardware for the robot, called iCAT, was developed by the Dutch research firm Philips and designed to be a generic companion robotic platform. By enabling the robot to form facial expressions using its eyebrows, eyelids, mouth, and head position, the researchers are aiming to let it show if it is confused, for example, when interacting with its human user. The long-term goal is to use Dastani’s emotional-logic software to assist in human and robot interaction, but for now, the researchers intend to use the iCAT to display internal emotional states as it makes decisions.

In addition to improving interactions, this emotional logic should also help intelligent agents carrying out noninteractive tasks. For instance, it should help reduce the computational workload during the complex decision-making processes used when carrying out planning tasks.

Developed with John-Jules Meyer and Bas Steunebrink, also at Utrecht, the logical functions consist of a series of rules to define a set of 22 emotions, such as anger, hope, gratification, fear, and joy. But rather than being based on notions of feelings, these are defined in terms of a goal the robot needs to achieve and the plan by which the robot aims to achieve it.

When robots are typically attempting to carry out a task, such as navigation, there are usually two approaches they can take: they can calculate a set plan in advance, based on a starting point and the position of the goal, and then execute it, or they can continually replan their route as they go. The first method is fairly primitive and can often result in the familiar scene of a robot bashing itself against an unforeseen obstacle, unable to get around it. The latter approach is more robust, particularly when navigating unpredictable, complex environments. But this method is usually very computationally demanding because it requires the robot to be continually searching for the best route from a vast number of possible paths.

Emotional logic can help get the best of both worlds by requiring the robot to replan its route only when its emotional states dictate. For example, in this sort of navigational task, “hope” would be defined in terms of the system believing (based on sensory data) that by carrying out Plan A to achieve Goal B, Goal B will be achieved. Conversely, “fear” occurs when the system hopes to achieve Goal B by Plan A, but it believes that Goal B won’t be achieved after performing Plan A. Using this sort of definition, “fear” can help the robot recognize when it’s time to try a new tack. “This changes its beliefs because the rest of the plan will not make its goal reachable,” says Dastani.

In essence, by attributing emotions to an agent’s current status, it’s possible to monitor the behavior of the system so that decision making or planning is only carried out when absolutely necessary. “It’s a heuristic that can help make rational decision-making processes more realistic and much more computable,” says Dastani. “The point is that here we continuously monitor whether there is a chance of failure.”

Other robots have been designed to mimic human expressions. But Dastani’s focus on how emotions might affect decision makes it different from many of the other projects on emotional, or affective, computing, such as MIT’s Kismet robot, developed by Cynthia Breazeal. With Kismet, like other affective robots, the focus is on how to get the robot to express emotions and elicit them from people.

Dastani’s emotional functions have been derived from a psychological model known as the OCC model, devised in 1988 by a trio of psychologists: Andrew Ortony and Allan Collins, of Northwestern University, and Gerald Clore, of the University of Virginia. “Different psychologists have come up with different sets of emotions,” says Dastani. But his group decided to use this particular model because it specified emotions in terms of objects, actions, and events.

Indeed, one of the reasons for creating this model was to encourage such work, says Ortony. “It is very gratifying for us that the people are using the model this way,” he says. Most of the time when people talk about emotional or affective computing, it’s at the human-interaction level, but there’s a lot of work to be done looking at how emotions influence decision making, he says.

“It cuts across a lot of philosophical debates about the nature of human emotion and, indeed, of human thought,” says Blay Whitby, a philosopher who specializes in artificial intelligence at the University of Sussex, in the UK. This is not a bad thing, he says, but many philosophers would probably view the notion of emotional logic as an oxymoron, he says.

Having 22 different emotions makes for a very rich model of human emotion, even compared with some psychiatric theories, says Whitby. But it will need to be able to resolve conflicts between different emotional states, and it needs to be practically put to the test, he says. “The devil is in the detail with this sort of work, and they specifically don’t consider multiagent interactions.”

Dastani says that incorporating multiagent interactions–those involving multiple robots or robots and humans–is on his to-do list. He notes that it’s only then that end users are likely to see the benefits of this emotional logic, in the form of more-natural robot interactions or through the responses of intelligent agents in automated call centers. Before that happens, these emotional states are more likely to function behind the scenes in more-mundane activities like navigation and scheduling tasks, Dastani says, but it’s still too early to predict when such as system would be commercially available.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.