Sponsored

A new paradigm for managing data

Open data lakehouse architectures speed insights and deliver self-service analytics capabilities.

In partnership withDremio

Regeneron Pharmaceuticals, a biotechnology company that develops life-transforming medicines, found itself inundated with vast volumes of data during the peak of the covid-19 pandemic. In order to derive actionable information from these disparate data sets, which ranged from clinical trial data to real-time supply chain information, the company needed new ways to join and relate them, regardless of what format they were in or where they came from.

A new paradigm for managing data

Shah Nawaz, chief technology officer and vice president of digital technology and engineering at Regeneron, says, “At the time, everybody in the world was reporting on their covid-19 findings from different countries and in different languages.” The challenge was how to make sense of these massive data sets in a timely manner, assisting researchers and clinicians, and ultimately getting the best treatments to patients faster. After all, he says, “when you’re dealing with large-scale data sets in hundreds, if not thousands, of locations, connecting the dots can be a complex problem.”

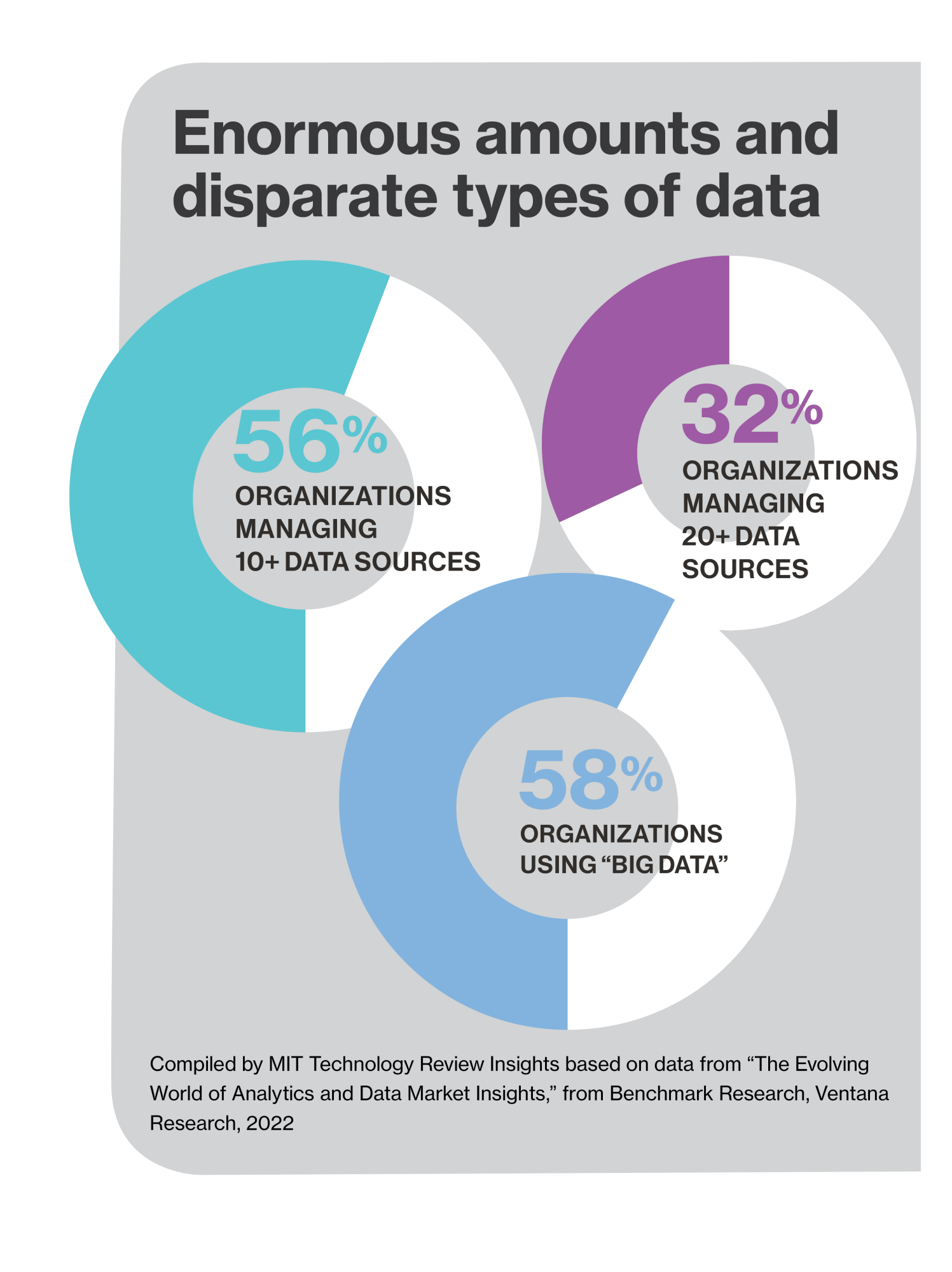

Regeneron isn’t the only company eager to derive more value from its data. Despite the enormous amounts of data they collect and the amount of capital they invest in data management solutions, business leaders are still not benefitting from their data. According to IDC research, 83% of CEOs want their organizations to be more data driven, but they struggle with the cultural and technological changes needed to execute an effective data strategy.

In response, many organizations, including Regeneron, are turning to a new form of data architecture as a modern approach to data management. In fact, by 2024, more than three-quarters of current data lake users will be investing in this type of hybrid “data lakehouse” architecture to enhance the value generated from their accumulated data, according to Matt Aslett, a research director with Ventana Research.

“Data lakehouse” is the term for a modern, open data architecture that combines the performance and optimization of a data warehouse with the flexibility of a data lake. But achieving the speed, performance, agility, optimization, and governance promised by this technology also requires embracing best practices that prioritize corporate goals and support enterprise-wide collaboration.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.

Deep Dive

Computing

Inside the hunt for new physics at the world’s largest particle collider

The Large Hadron Collider hasn’t seen any new particles since the discovery of the Higgs boson in 2012. Here’s what researchers are trying to do about it.

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

How Wi-Fi sensing became usable tech

After a decade of obscurity, the technology is being used to track people’s movements.

Algorithms are everywhere

Three new books warn against turning into the person the algorithm thinks you are.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.