How it feels to be sexually objectified by an AI

Plus: How US police use counterterrorism money to buy spy tech.

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here.

My social media feeds this week have been dominated by two hot topics: OpenAI’s latest chatbot, ChatGPT, and the viral AI avatar app Lensa. I love playing around with new technology, so I gave Lensa a go.

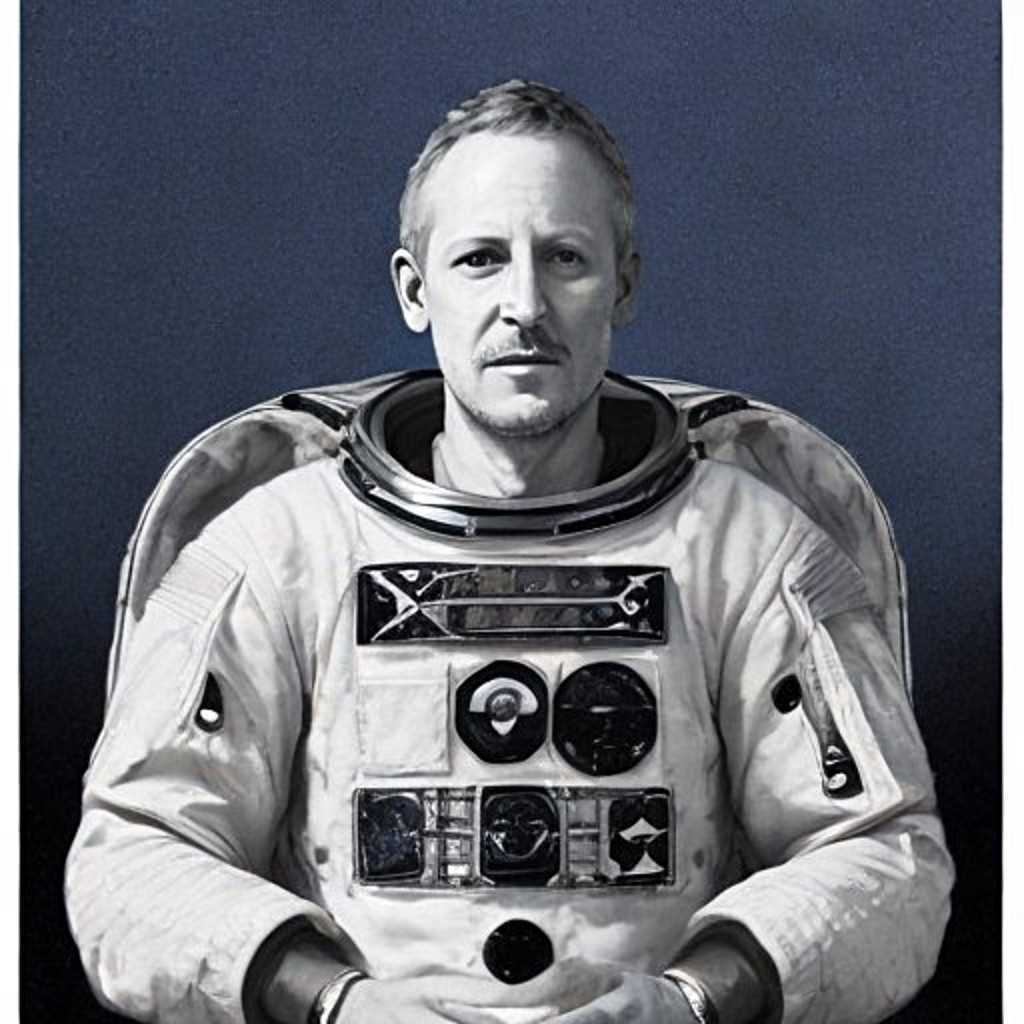

I was hoping to get results similar to my colleagues at MIT Technology Review. The app generated realistic and flattering avatars for them—think astronauts, warriors, and electronic music album covers.

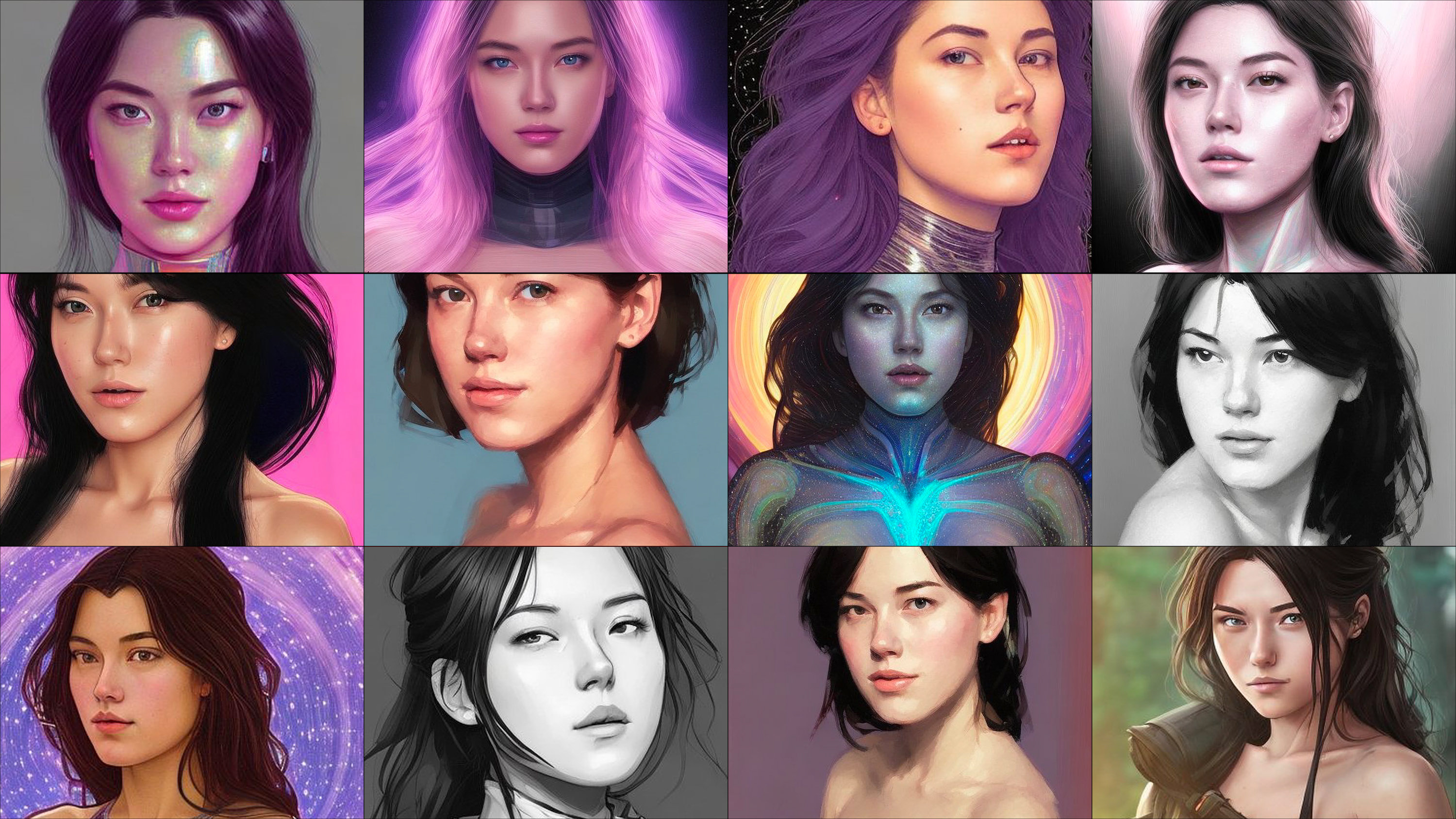

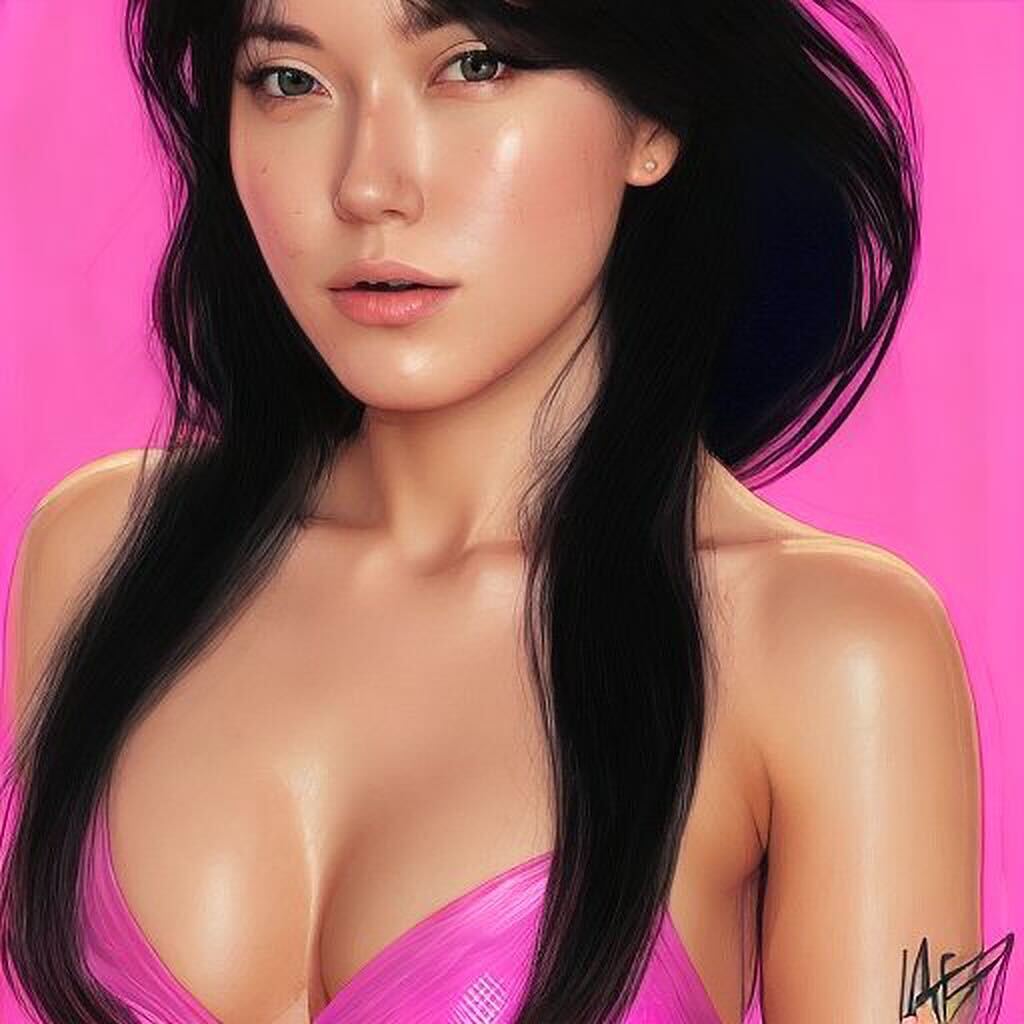

Instead, I got tons of nudes. Out of 100 avatars I generated, 16 were topless, and another 14 had me in extremely skimpy clothes and overtly sexualized poses. You can read my story here.

Lensa creates its avatars using Stable Diffusion, an open-source AI model that generates images based on text prompts. Stable Diffusion is trained on LAION-5B, a massive open-source data set that has been compiled by scraping images from the internet.

And because the internet is overflowing with images of naked or barely dressed women, and pictures reflecting sexist, racist stereotypes, the data set is also skewed toward these kinds of images.

As an Asian woman, I thought I’d seen it all. I’ve felt icky after realizing a former date only dated Asian women. I’ve been in fights with men who think Asian women make great housewives. I’ve heard crude comments about my genitals. I’ve been mixed up with the other Asian person in the room.

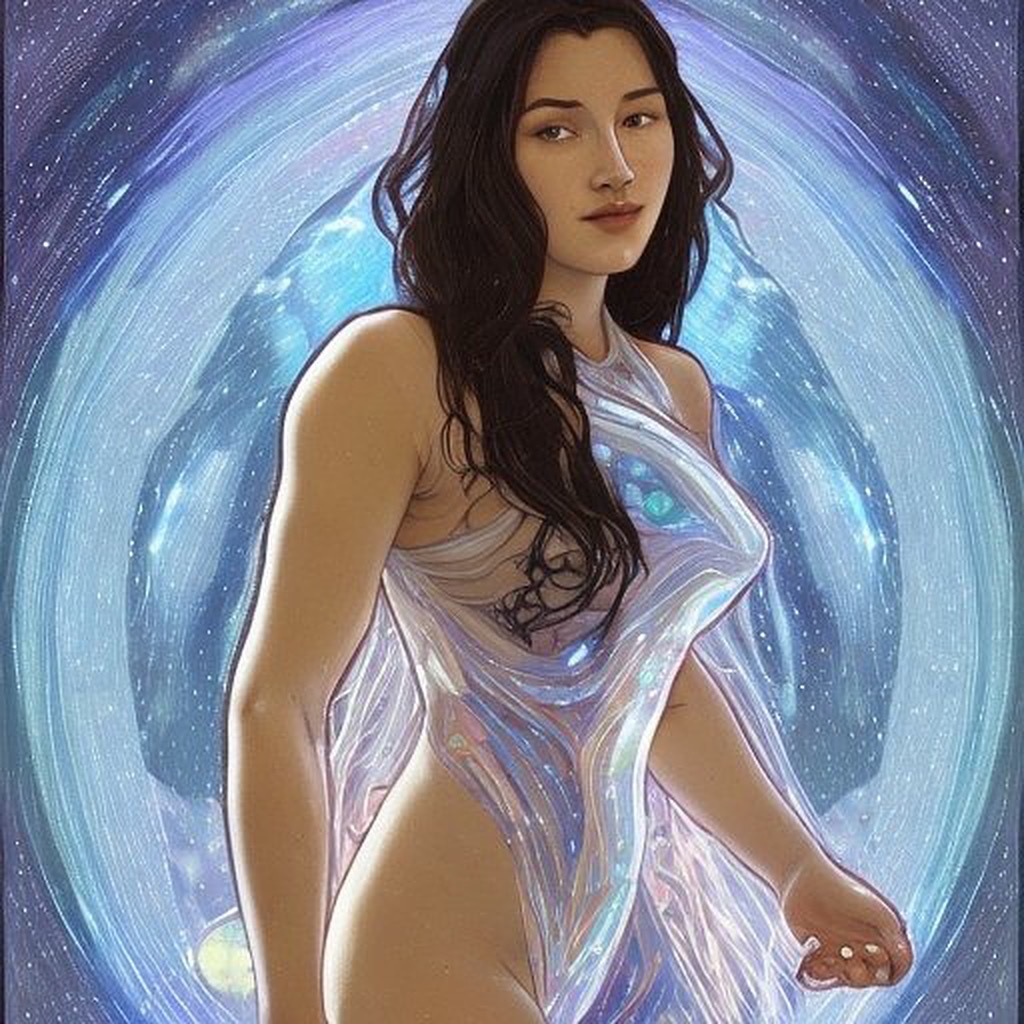

Being sexualized by an AI was not something I expected, although it is not surprising. Frankly, it was crushingly disappointing. My colleagues and friends got the privilege of being stylized into artful representations of themselves. They were recognizable in their avatars! I was not. I got images of generic Asian women clearly modeled on anime characters or video games.

Funnily enough, I found more realistic portrayals of myself when I told the app I was male. This probably applied a different set of prompts to images. The differences are stark. In the images generated using male filters, I have clothes on, I look assertive, and—most important—I can recognize myself in the pictures.

“Women are associated with sexual content, whereas men are associated with professional, career-related content in any important domain such as medicine, science, business, and so on,” says Aylin Caliskan, an assistant professor at the University of Washington who studies biases and representation in AI systems.

This sort of stereotyping can be easily spotted with a new tool built by researcher Sasha Luccioni, who works at AI startup Hugging Face, that allows anyone to explore the different biases in Stable Diffusion.

The tool shows how the AI model offers pictures of white men as doctors, architects, and designers while women are depicted as hairdressers and maids.

But it’s not just the training data that is to blame. The companies developing these models and apps make active choices about how they use the data, says Ryan Steed, a PhD student at Carnegie Mellon University, who has studied biases in image-generation algorithms.

“Someone has to choose the training data, decide to build the model, decide to take certain steps to mitigate those biases or not,” he says.

Prisma Labs, the company behind Lensa, says all genders face “sporadic sexualization.” But to me, that’s not good enough. Somebody made the conscious decision to apply certain color schemes and scenarios and highlight certain body parts.

In the short term, some obvious harms could result from these decisions, such as easy access to deepfake generators that create nonconsensual nude images of women or children.

But Aylin Caliskan sees even bigger longer-term problems ahead. As AI-generated images with their embedded biases flood the internet, they will eventually become training data for future AI models. “Are we going to create a future where we keep amplifying these biases and marginalizing populations?” she says.

That’s a truly frightening thought, and I for one hope we give these issues due time and consideration before the problem gets even bigger and more embedded.

Deeper Learning

How US police use counterterrorism money to buy spy tech

Grant money meant to help cities prepare for terror attacks is being spent on “massive purchases of surveillance technology” for US police departments, a new report by the advocacy organizations Action Center on Race and Economy (ACRE), LittleSis, MediaJustice, and the Immigrant Defense Project shows.

Shopping for AI-powered spytech: For example, the Los Angeles Police Department used funding intended for counterterrorism to buy automated license plate readers worth at least $1.27 million, radio equipment worth upwards of $24 million, Palantir data fusion platforms (often used for AI-powered predictive policing), and social media surveillance software.

Why this matters: For various reasons, a lot of problematic tech ends up in high-stake sectors such as policing with little to no oversight. For example, the facial recognition company Clearview AI offers “free trials” of its tech to police departments, which allows them to use it without a purchasing agreement or budget approval. Federal grants for counterterrorism don't require as much public transparency and oversight. The report’s findings are yet another example of a growing pattern in which citizens are increasingly kept in the dark about police tech procurement. Read more from Tate Ryan-Mosley here.

Bits and Bytes

ChatGPT, Galactica, and the progress trap

AI researchers Abeba Birhane and Deborah Raji write that the “lackadaisical approaches to model release” (as seen with Meta’s Galactica) and the extremely defensive response to critical feedback constitute a “deeply concerning” trend in AI right now. They argue that when models don’t “meet the expectations of those most likely to be harmed by them,” then “their products are not ready to serve these communities and do not deserve widespread release.” (Wired)

The new chatbots could change the world. Can you trust them?

People have been blown away by how coherent ChatGPT is. The trouble is, a significant amount of what it spews is nonsense. Large language models are no more than confident bullshitters, and we’d be wise to approach them with that in mind.

(The New York Times)

Stumbling with their words, some people let AI do the talking

Despite the tech’s flaws, some people—such as those with learning difficulties—are still finding large language models useful as a way to help express themselves.

(The Washington Post)

EU countries' stance on AI rules draws criticism from lawmakers and activists

The EU’s AI law, the AI Act, is edging closer to being finalized. EU countries have approved their position on what the regulation should look like, but critics say many important issues, such as the use of facial recognition by companies in public places, were not addressed, and many safeguards were watered down. (Reuters)

Investors seek to profit from generative-AI startups

It’s not just you. Venture capitalists also think generative-AI startups such as Stability.AI, which created the popular text-to-image model Stable Diffusion, are the hottest things in tech right now. And they’re throwing stacks of money at them. (The Financial Times)

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.