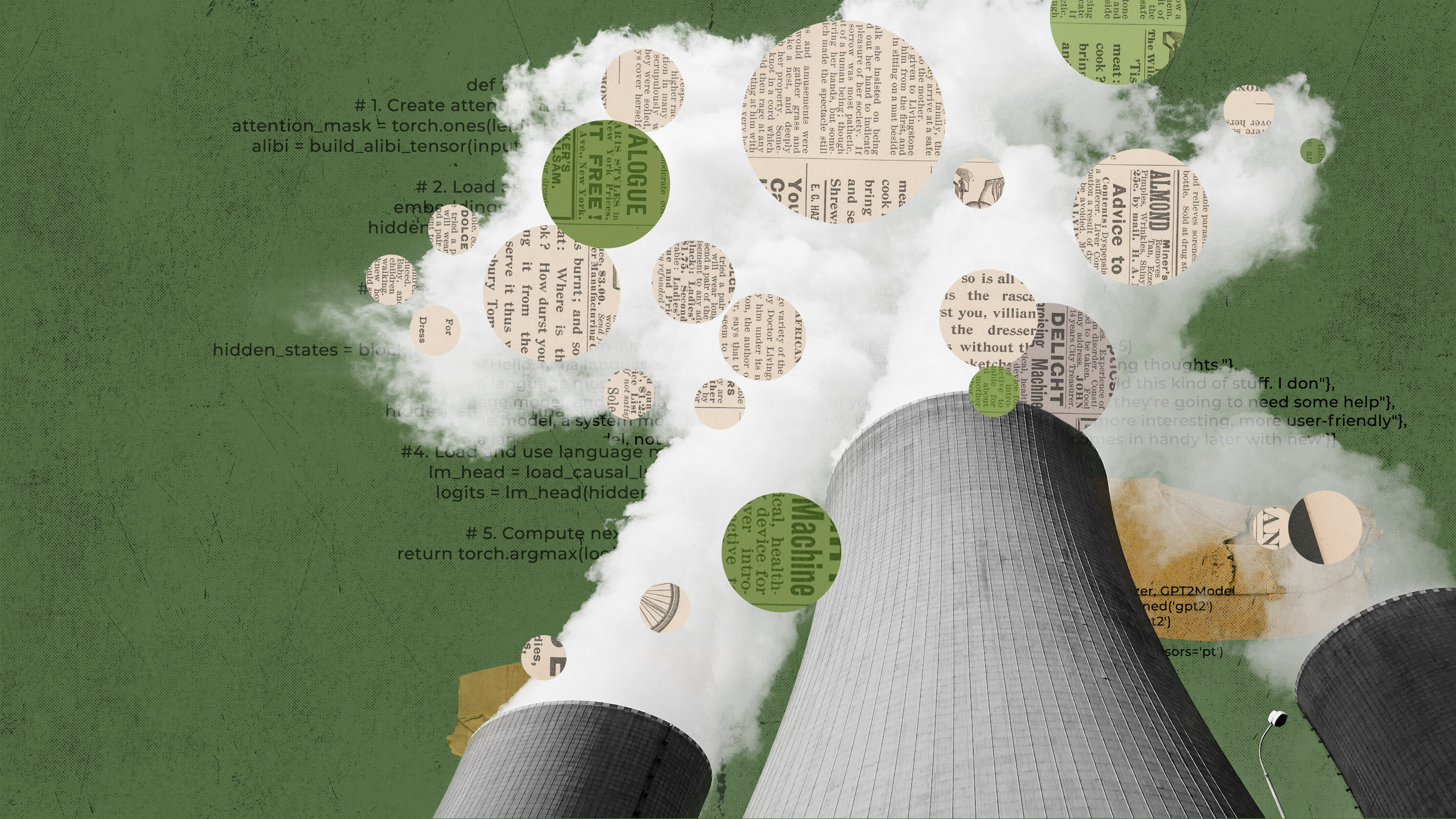

We’re getting a better idea of AI’s true carbon footprint

AI startup Hugging Face has undertaken the tech sector’s first attempt to estimate the broader carbon footprint of a large language model.

Large language models (LLMs) have a dirty secret: they require vast amounts of energy to train and run. What’s more, it’s still a bit of a mystery exactly how big these models’ carbon footprints really are. AI startup Hugging Face believes it’s come up with a new, better way to calculate that more precisely, by estimating emissions produced during the model’s whole life cycle rather than just during training.

It could be a step toward more realistic data from tech companies about the carbon footprint of their AI products at a time when experts are calling for the sector to do a better job of evaluating AI’s environmental impact. Hugging Face’s work is published in a non-peer-reviewed paper.

To test its new approach, Hugging Face estimated the overall emissions for its own large language model, BLOOM, which was launched earlier this year. It was a process that involved adding up lots of different numbers: the amount of energy used to train the model on a supercomputer, the energy needed to manufacture the supercomputer’s hardware and maintain its computing infrastructure, and the energy used to run BLOOM once it had been deployed. The researchers calculated that final part using a software tool called CodeCarbon, which tracked the carbon dioxide emissions BLOOM was producing in real time over a period of 18 days.

Hugging Face estimated that BLOOM’s training led to 25 metric tons of carbon dioxide emissions. But, the researchers found, that figure doubled when they took into account the emissions produced by the manufacturing of the computer equipment used for training, the broader computing infrastructure, and the energy required to actually run BLOOM once it was trained.

While that may seem like a lot for one model—50 metric tons of carbon dioxide emissions is the equivalent of around 60 flights between London and New York—it's significantly less than the emissions associated with other LLMs of the same size. This is because BLOOM was trained on a French supercomputer that is mostly powered by nuclear energy, which doesn’t produce carbon dioxide emissions. Models trained in China, Australia, or some parts of the US, which have energy grids that rely more on fossil fuels, are likely to be more polluting.

After BLOOM was launched, Hugging Face estimated that using the model emitted around 19 kilograms of carbon dioxide per day, which is similar to the emissions produced by driving around 54 miles in an average new car.

By way of comparison, OpenAI’s GPT-3 and Meta’s OPT were estimated to emit more than 500 and 75 metric tons of carbon dioxide, respectively, during training. GPT-3’s vast emissions can be partly explained by the fact that it was trained on older, less efficient hardware. But it is hard to say what the figures are for certain; there is no standardized way to measure carbon dioxide emissions, and these figures are based on external estimates or, in Meta’s case, limited data the company released.

“Our goal was to go above and beyond just the carbon dioxide emissions of the electricity consumed during training and to account for a larger part of the life cycle in order to help the AI community get a better idea of the their impact on the environment and how we could begin to reduce it,” says Sasha Luccioni, a researcher at Hugging Face and the paper’s lead author.

Hugging Face’s paper sets a new standard for organizations that develop AI models, says Emma Strubell, an assistant professor in the school of computer science at Carnegie Mellon University, who wrote a seminal paper on AI’s impact on the climate in 2019. She was not involved in this new research.

The paper “represents the most thorough, honest, and knowledgeable analysis of the carbon footprint of a large ML model to date as far as I am aware, going into much more detail … than any other paper [or] report that I know of,” says Strubell.

The paper also provides some much-needed clarity on just how enormous the carbon footprint of large language models really is, says Lynn Kaack, an assistant professor of computer science and public policy at the Hertie School in Berlin, who was also not involved in Hugging Face’s research. She says she was surprised to see just how big the numbers around life-cycle emissions are, but that still more work needs to be done to understand the environmental impact of large language models in the real world.

"That’s much, much harder to estimate. That’s why often that part just gets overlooked,” says Kaack, who co-wrote a paper published in Nature last summer proposing a way to measure the knock-on emissions caused by AI systems.

For example, recommendation and advertising algorithms are often used in advertising, which in turn drives people to buy more things, which causes more carbon dioxide emissions. It’s also important to understand how AI models are used, Kaack says. A lot of companies, such as Google and Meta, use AI models to do things like classify user comments or recommend content. These actions use very little power but can happen a billion times a day. That adds up.

It’s estimated that the global tech sector accounts for 1.8% to 3.9% of global greenhouse-gas emissions. Although only a fraction of those emissions are caused by AI and machine learning, AI’s carbon footprint is still very high for a single field within tech.

With a better understanding of just how much energy AI systems consume, companies and developers can make choices about the trade-offs they are willing to make between pollution and costs, Luccioni says.

The paper’s authors hope that companies and researchers will be able to consider how they can develop large language models in a way that limits their carbon footprint, says Sylvain Viguier, who coauthored Hugging Face’s paper on emissions and is the director of applications at Graphcore, a semiconductor company.

It might also encourage people to shift toward more efficient ways of doing AI research, such as fine-tuning existing models instead of pushing for models that are even bigger, says Luccioni.

The paper’s findings are a “wake-up call to the people who are using that kind of model, which are often big tech companies,” says David Rolnick, an assistant professor in the school of computer science at McGill University and at Mila, the Quebec AI Institute. He is one of the coauthors of the paper with Kaack and was not involved in Hugging Face’s research.

“The impacts of AI are not inevitable. They’re a result of the choices that we make about how we use these algorithms as well as what algorithms to use,” Rolnick says.

Update: A previous version of this story incorrectly attributed a quote to Lynn Kaack. It has now been amended. We apologize for any inconvenience.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.