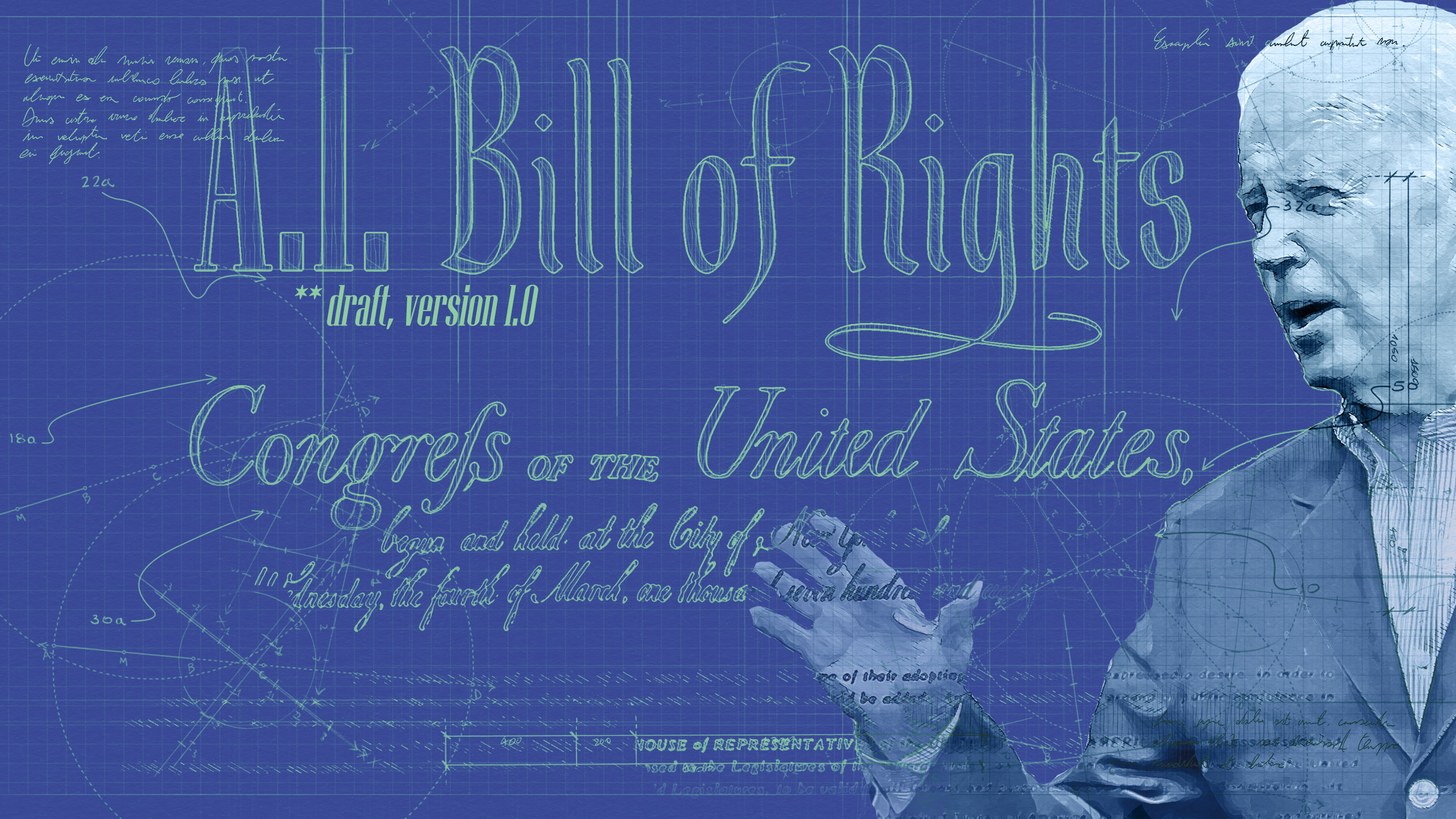

The White House just unveiled a new AI Bill of Rights

It's the first big step to hold AI to account.

The White House wants Americans to know: The age of AI accountability is coming.

President Joe Biden has today unveiled a new AI Bill of Rights, which outlines five protections Americans should have in the AI age.

Biden has previously called for stronger privacy protections and for tech companies to stop collecting data. But the US—home to some of the world’s biggest tech and AI companies—has so far been one of the only Western nations without clear guidance on how to protect its citizens against AI harms.

Today’s announcement is the White House’s vision of how the US government, technology companies, and citizens should work together to hold AI accountable. However, critics say the plan lacks teeth and the US needs even tougher regulation around AI.

In September, the administration announced core principles for tech accountability and reform, such as stopping discriminatory algorithmic decision-making, promoting competition in the technology sector, and providing federal protections for privacy.

The AI Bill of Rights, the vision for which was first introduced a year ago by the Office of Science and Technology Policy (OSTP), a US government department that advises the president on science and technology, is a blueprint for how to achieve those goals. It provides practical guidance to government agencies and a call to action for technology companies, researchers, and civil society to build these protections.

“These technologies are causing real harms in the lives of Americans—harms that run counter to our core democratic values, including the fundamental right to privacy, freedom from discrimination, and our basic dignity,” a senior administration official told reporters at a press conference.

AI is a powerful technology that is transforming our societies. It also has the potential to cause serious harm, which often disproportionately affects minorities. Facial recognition technologies used in policing and algorithms that allocate benefits are not as accurate for ethnic minorities, for example.

The new blueprint aims to redress that balance. It says that Americans should be protected from unsafe or ineffective systems; that algorithms should not be discriminatory and systems should be used as designed in an equitable way; and that citizens should have agency over their data and should be protected from abusive data practices through built-in safeguards. Citizens should also know whenever an automated system is being used on them and understand how it contributes to outcomes. Finally, people should always be able to opt out of AI systems in favor of a human alternative and have access to remedies when there are problems.

“We want to make sure that we are protecting people from the worst harms of this technology, no matter the specific underlying technological process used,” a second senior administration official said.

The OSTP’s AI Bill of Rights is “impressive,” says Marc Rotenberg, who heads the Center for AI and Digital Policy, a nonprofit that tracks AI policy.

“This is clearly a starting point. That doesn't end the discussion over how the US implements human-centric and trustworthy AI,” he says.” But it is a very good starting point to move the US to a place where it can carry forward on that commitment.”

Willmary Escoto, US policy analyst for the digital rights group Access Now, says the guidelines skillfully highlight the “importance of data minimization” while “naming and addressing the diverse harms people experience from other AI-enabled technologies, like emotion recognition.”

“The AI Bill of Rights could have a monumental impact on fundamental civil liberties for Black and Latino people across the nation,” says Escoto.

The tech sector welcomed the White House’s acknowledgment that AI can also be used for good.

Matt Schruers, president of the tech lobby CCIA, which counts companies including Google, Amazon, and Uber as its members, says he appreciates the administration’s “direction that government agencies should lead by example in developing AI ethics principles, avoiding discrimination, and developing a risk management framework for government technologists.”

Shaundra Watson, policy director for AI at the tech lobby BSA, whose members include Microsoft and IBM, says she welcomes the document’s focus on risks and impact assessments. “It will be important to ensure that these principles are applied in a manner that increases protections and reliability in practice,” Watson says.

While the EU is pressing on regulations that aim to prevent AI harms and hold companies accountable for harmful AI tech, and has a strict data protection regime, the US has been loath to introduce new regulations.

The newly outlined protections echo those introduced in the EU, but the document is nonbinding and does not constitute US government policy, because the OSTP cannot enact law. It will be up to lawmakers to propose new bills.

Russell Wald, director of policy for the Stanford Institute for Human-Centered AI, says the document lacks details or mechanisms for enforcement.

“It is disheartening to see the lack of coherent federal policy to tackle desperately needed challenges posed by AI, such as federally coordinated monitoring, auditing, and reviewing actions to mitigate the risks and harm brought by deployed or open-source foundation models,” he says.

Rotenberg says he’d prefer for the US to implement regulations like the EU’s AI Act, an upcoming law that aims to add extra checks and balances to AI uses that have the most potential to cause harm to humans.

“We’d like to see some clear prohibitions on AI deployments that have been most controversial, which include, for example, the use of facial recognition for mass surveillance,” he says.

The AI Bill of Rights may set the stage for future legislation, such as the passage of the Algorithmic Accountability Act or the establishment of an agency to regulate AI, says Sneha Revanur, who leads Encode Justice, an organization that focuses on young people and AI.

"Though it is limited in its ability to address the harms of the private sector, the AI Bill of Rights can live up to its promise if it is enforced meaningfully, and we hope that regulation with real teeth will follow suit,” she says.

This story has been updated to include Sneha Revanur's quote.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.