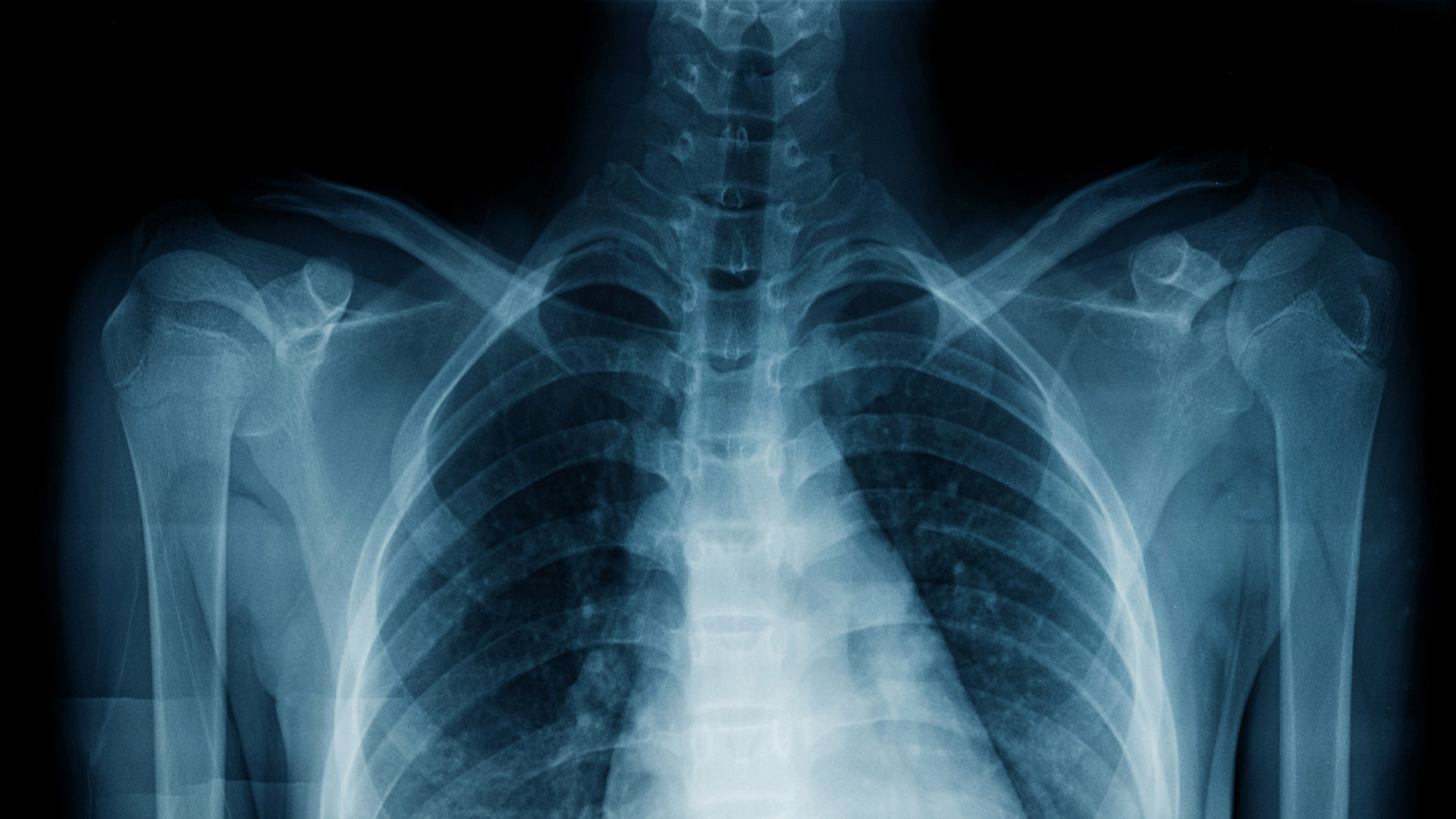

An AI used medical notes to teach itself to spot disease on chest x-rays

The model can diagnose problems as well as a human specialist, and doesn't need lots of labor-intensive training data.

After crunching through thousands of chest x-rays and the clinical reports that accompany them, an AI has learned to spot diseases in those scans as accurately as a human radiologist.

The majority of current diagnostic AI models are trained on scans labeled by humans, but that labeling is a time-consuming process. The new model, called CheXzero, can instead “learn” on its own from existing medical reports that specialists have written in natural language.

The findings suggest that labeling x-rays for the purpose of training AI models to interpret medical images isn’t necessary, which could save both time and money.

A team of researchers from Harvard Medical School trained the CheXzero model on a publicly available data set of more than 377,000 chest x-rays and more than 227,000 corresponding clinical reports. This taught it to associate certain types of images with their existing notes, rather than learning from structured data that had been manually labeled for the task.

CheXzero’s performance was then tested on separate data sets from two different institutions, one in another country, to check that it was capable of matching images with the corresponding notes even when the reports contained differing terminology.

The research, described in Nature Biomedical Engineering, found that the model was more effective at identifying issues such as pneumonia, collapsed lungs, and lesions than other self-supervised AI models. In fact, it was similar in accuracy to human radiologists.

While others have tried to use unstructured medical data in this manner, this is the first time a team’s AI model has learned from unstructured text and matched radiologists’ performance, and it has demonstrated the ability to predict multiple diseases from a given x-ray with a high degree of accuracy, says Ekin Tiu, an undergraduate student at Stanford and a visiting researcher who coauthored the report.

“We are the first to do that and demonstrate that effectively in this field,” he says.

The model’s code has been made publicly available to other researchers in the hope it could be applied to CT scans, MRIs, and echocardiograms to help detect a wider range of diseases in other parts of the body, says Pranav Rajpurkar, an assistant professor of biomedical informatics in the Blavatnik Institute at Harvard Medical School, who led the project.

“Our hope is that people are able to apply this out of the box to other chest x-ray data sets and diseases that they care about,” he says.

Rajpurkar is also optimistic that diagnostic AI models requiring minimal supervision could help increase access to health care in countries and communities where specialists are scarce.

“It makes a lot of sense to use the richer training signal from reports,” says Christian Leibig, director of machine learning at German startup Vara, which uses AI to detect breast cancer. “It’s quite an achievement to get to that level of performance.”

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.