Building a weather app for climate change

This project aims to get accurate local climate information into the hands of those who must ready their communities for wilder weather.

Climate Grand Challenges Flagship Project:

Bringing computation to the climate challenge

Part of a special report on MIT’s Climate Grand Challenges Initiative

Running the models that project the future climate takes supercomputers the size of basketball courts. These models divide Earth and its atmosphere into thousands of representative cells and then calculate the climate conditions in each one, factoring in everything from temperature and wind speed to vegetation and cloud cover. Building these models takes years, and dozens or even hundreds of scientists.

The complexity and expense of the process means that only scientists, working in large teams, can understand them. The information they provide isn’t easily accessible to the people who need it most—those deciding how to prepare their communities for the dangerous impacts of climate change.

To address that problem, the Climate Grand Challenges (CGC) flagship project led by Noelle Eckley Selin and Raffaele Ferrari will build climate models that are not only more accurate, but also more nimble and easier for governments, industries, and communities to use.

“The idea is to create a tool that gives the latest and greatest climate information, that uses advances in artificial intelligence and computational techniques to allow users to have access to the most advanced—and relevant—climate information,” says Selin, a professor in the Institute for Data, Systems, and Society and the Department of Earth, Atmospheric, and Planetary Sciences (EAPS). “We’re hoping it will be as easy as using a weather app.”

Models developed at MIT and elsewhere over six decades have given the average person access to short-term weather information that’s useful for everyday decisions, like what jacket to put on when leaving the house. But the limits of computing power mean that climate models, which work on time scales of decades and centuries, effectively ignore the finer processes that produce day-to-day weather. These models confirm that the climate will continue to change, but they lack the precision to make forecasts at the local and regional levels.

“There are still uncertainties in the projections,” says Ferrari, the Cecil and Ida Green Professor of Oceanography at EAPS and director of the Program in Atmospheres, Oceans, and Climate. “We don’t have computers powerful enough to resolve all the processes in the climate system. Individual clouds, individual plants on land—collectively they have a huge impact on the climate. But in the models, these processes fall through the cracks.”

Current models calculate climate futures in a grid of cells that approximate processes on land, water, and air. But their resolution is so coarse they can account for only limited features. Massachusetts, for example, would roughly equal four cells, and the model would have the capacity to represent only a single cloud in each one.

“Obviously there can be more than four clouds in the state of Massachusetts,” says Ferrari.

Simulating every cloud would require too much computing power. But it’s possible to run high-resolution simulations of individual clouds in small regions, verify the simulations against field studies, and then use them to simulate a range of situations that could occur around the world. These simulations can be used to train algorithms that reproduce the net impact of clouds in each cell of the model. Representing the net impact of small-scale processes is crucial to improving the fidelity of climate projections. Low-level clouds, for example, cool the climate by reflecting sunlight, so representing their net effect accurately in the model is critical. Increasing the fraction of the globe they cover by just 4% would cool Earth by 2 to 3 °C—and less cloud cover would have the opposite effect.

Ferrari is already working with the Climate Modeling Alliance (CliMA), a joint modeling effort between MIT, Caltech, and NASA’s Jet Propulsion Laboratory, to develop a next-generation climate model that will harness new, faster supercomputers and machine learning. This “digital twin” of Earth’s systems will be continuously updated with real-time data gleaned from space and ground observations. And it will automatically learn from both the real-time data and high-resolution simulation data.

While the CliMA twin focuses on the physics of the atmosphere, ocean, and land, the CGC project will add biogeochemistry to the model and factor in sea and land ice to improve projections of sea level rise and the impacts of carbon emissions. The CGC team is also developing the capability to automatically launch high-resolution simulations to generate reliable, localized projections of climate impacts and is working on making this improved model accessible and adaptable.

“We want to have some ways for stakeholders to interact with the model as they make decisions,” Ferrari says. “The coast of Massachusetts will experience flooding and more frequent and stronger storms, for example. You have to decide how to protect yourself, where to put your money, how to react.”

But the current models are difficult and time-consuming to run, and they provide information that may not be relevant to local and regional planners.

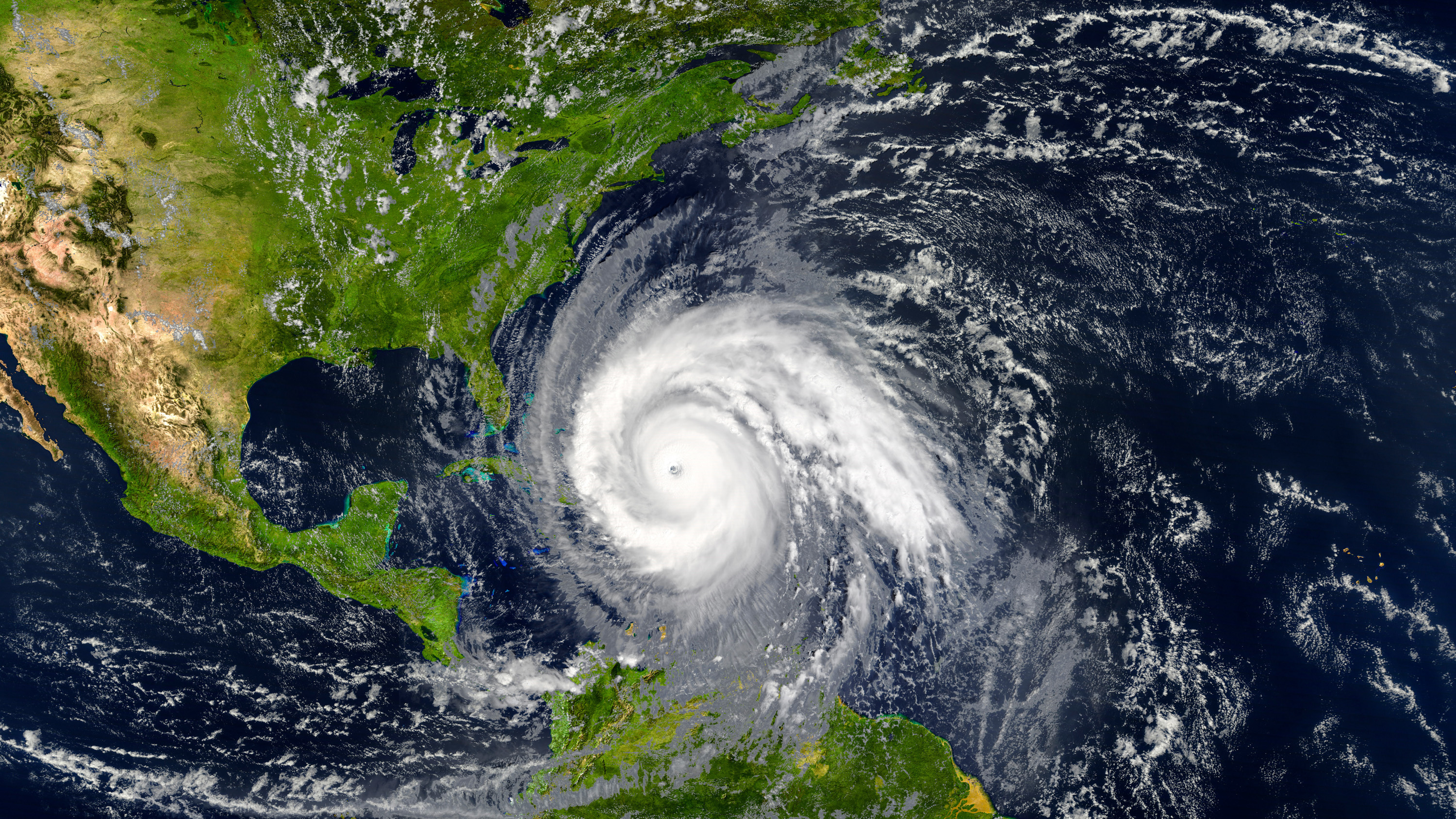

So the team will build emulators—smaller, more efficient climate models—that are based on the full climate system but can be customized to predict variables that are more useful to a particular place. These emulators, or “digital cousins,” will rely on machine learning to automate elements of the large, complex models so they can be used to assess a subset of variables that might influence a specific climate risk. For example, a coastal community in Florida that’s preparing for stronger hurricanes might want to know how carbon dioxide emissions will supercharge storms, or a town in the Southwest surrounded by tinder-dry forest might want to evaluate the impact of regional conservation policies in the face of prolonged drought.

“We’ll have state-of-the-art climate models that are going to be able to be updated and paired with emulators that we hope, in real time, can be tailored to a particular user—a particular set of outputs—so they can run what they care about,” Selin says. “They’ll have the flexibility to do their own runs of models without relying on teams of researchers far away.”

Within the five-year challenge period, the team plans to launch multiple pilot projects—focusing on, say, projecting heat impacts and air quality, or the consequences of extreme precipitation—and replicate them in other places soon after.

“We have a commitment to making the code available and open source,” Selin says. “We’re hoping that makes it possible for a broad range of users to take it and run with it.”

The team will also develop an undergraduate course on computational thinking and climate science that will use its new software platform. “We must train a new generation of students to be prepared to face the challenges posed by a changing climate,” Ferrari says.

Getting the tools in the hands of those who will decide where and how to protect communities is urgent, Selin adds; it needs to happen before the weather changes driven by global warming accelerate. “There have been a lot of computational advances, and advances in climate science, that aren’t necessarily getting taken up as quickly as they could be,” she says. “Our vision is really quite straightforward: democratize the information available from climate models.”

This story is part of a series, MIT Climate Grand Challenges: Hacking climate change.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.