How we covered the evolution of computing

To flip through the archives of MIT Technology Review is to see the development of the computer unfold as it happened.

February 1969

From “Man, Machine, and Information Flight Systems”: The flight of Apollo 8 to the moon involved obtaining and processing more bits of data than were used by all fighting forces in World War II. The technological achievement in developing advanced rockets for flying to the moon is reasonably well known. Much less understood, but perhaps of even greater significance, is the information management system. The work of thousands of people in real time, and the data processed by many powerful computers, is organized, processed, filtered, and channeled through one to three people in the cockpit in understandable and digestible form. With this information the pilots can take action with confidence knowing that they are in league with powerful logic systems and an overwhelmingly large number of cells of memory storage.

February 1986

From “The Multiprocessor Revolution: Harnessing Computers Together”: By harnessing many relatively inexpensive VLSI processors together into a multiprocessor system we may significantly reduce the cost of achieving today’s fastest computing speeds. Many of us harbor expectations that this new breed of machines will make possible some of our most romantic and ambitious aspirations: these new machines may recognize images, understand speech, and behave more intelligently. Even anthropomorphic evidence suggests that if computers are to perform intelligently, many processors must work together. Consider the human eye, where millions of neurons cooperate to help us see. What arrogant reasoning led us to believe that a single processor capable of only a few million instructions per second could ever exhibit intelligence?

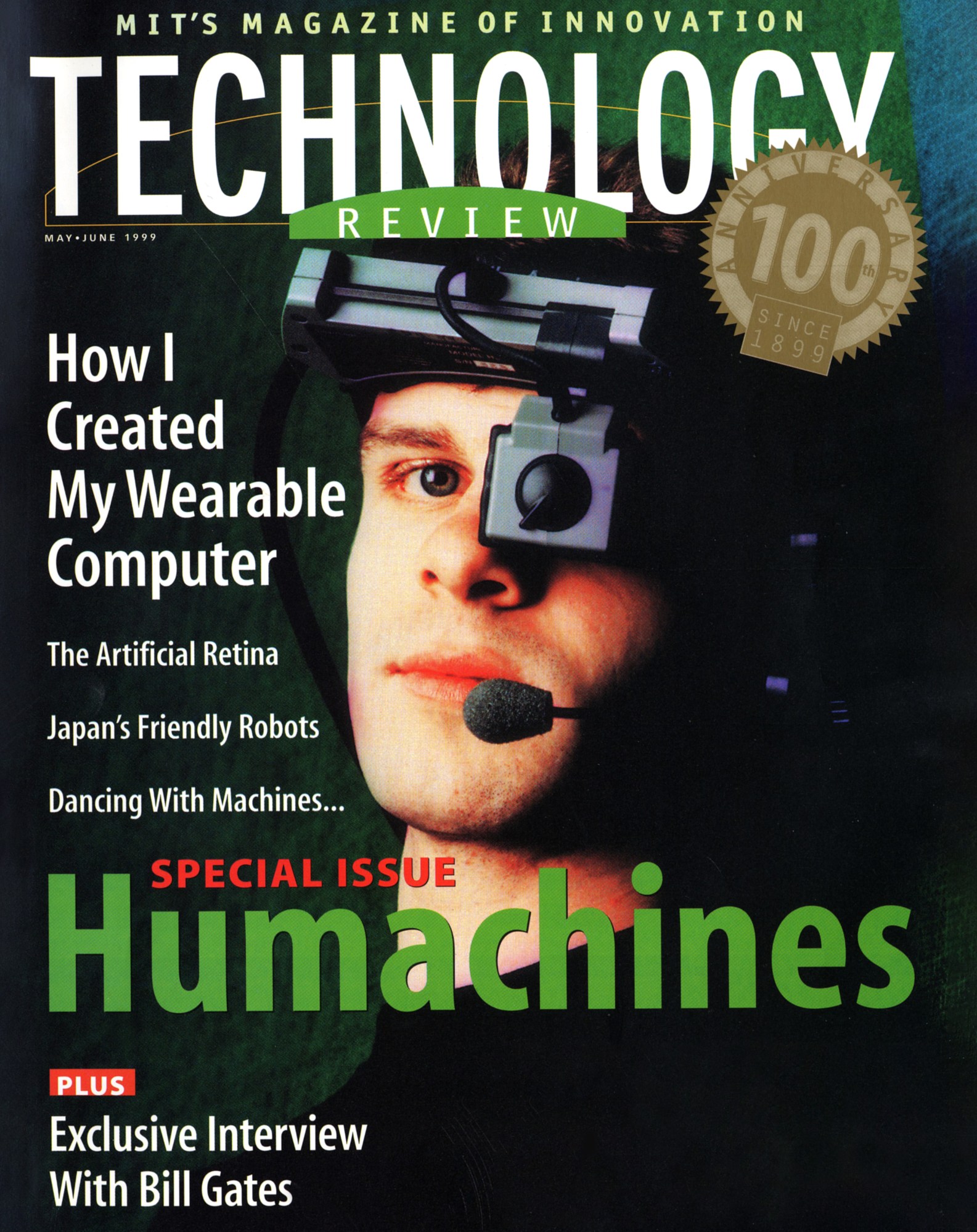

May 1999

From “Cyborg Seeks Community”: People find me peculiar. They think it’s odd that I spend most of my waking hours wearing eight or nine Internet-connected computers sewn into my clothing and that I wear opaque wrap-around glasses day and night, inside and outdoors. They find it odd that to sustain wireless communications during my travels, I will climb to the hotel roof to rig my room with an antenna and Internet connection. They wonder why I sometimes seem detached and lost, but at other times I exhibit vast knowledge of their specialty. A physicist once said he felt that I had the intelligence of a dozen experts in his discipline; a few minutes later, someone else said they thought l was mentally handicapped. Despite the peculiar glances I draw, I wouldn’t live any other way.

Deep Dive

Computing

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

How Wi-Fi sensing became usable tech

After a decade of obscurity, the technology is being used to track people’s movements.

Why it’s so hard for China’s chip industry to become self-sufficient

Chip companies from the US and China are developing new materials to reduce reliance on a Japanese monopoly. It won’t be easy.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.