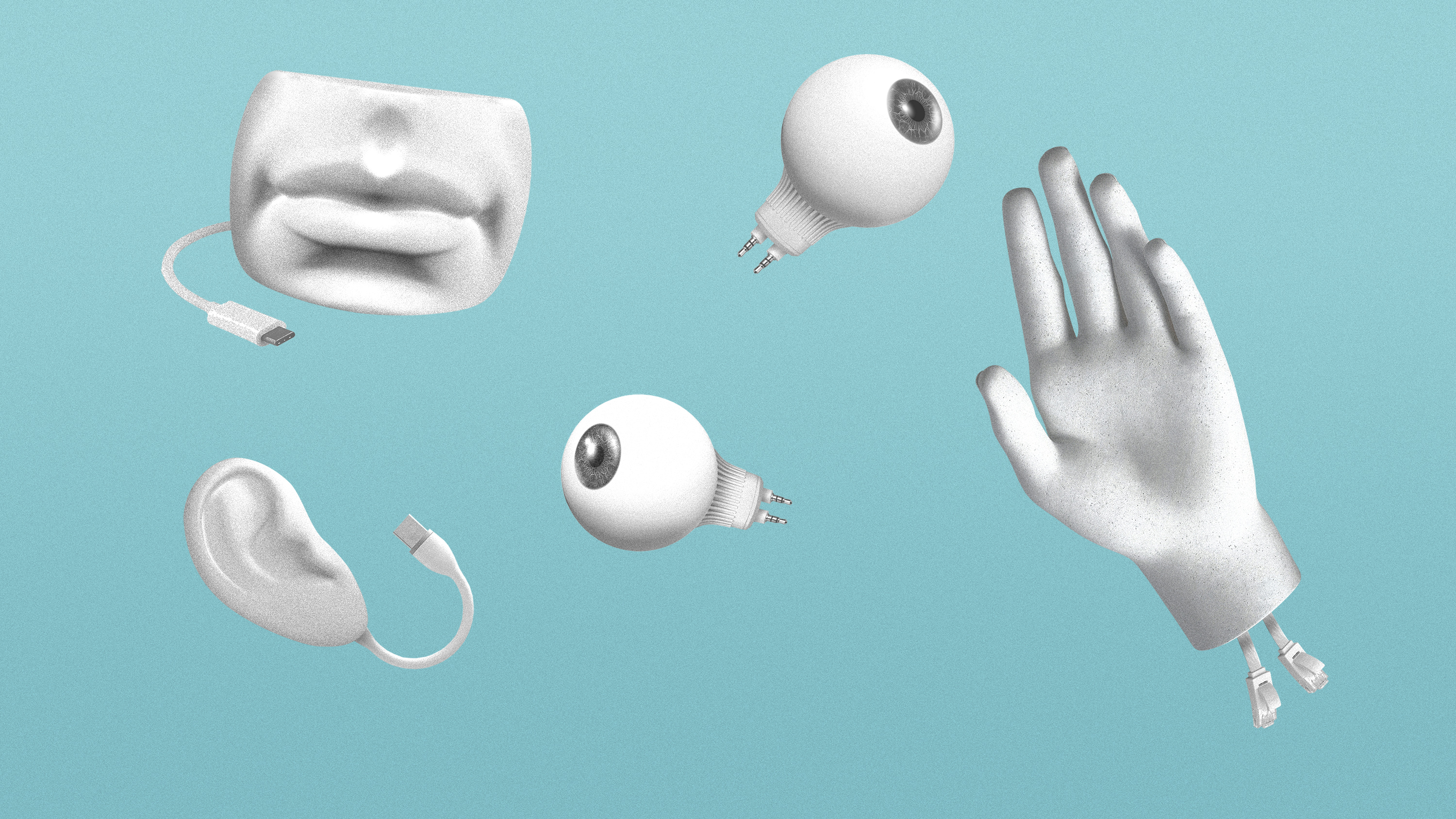

AI armed with multiple senses could gain more flexible intelligence

Human intelligence emerges from our combination of senses and language abilities. Maybe the same is true for artificial intelligence.

Why it matters:AI that can sense and speak will be much better at navigating new challenges and working alongside people.

Key players:• OpenAI

• AI2

• Facebook

Availability:Now

In late 2012, AI scientists first figured out how to get neural networks to “see.” They proved that software designed to loosely mimic the human brain could dramatically improve existing computer-vision systems. The field has since learned how to get neural networks to imitate the way we reason, hear, speak, and write.

But while AI has grown remarkably human-like—even superhuman—at achieving a specific task, it still doesn’t capture the flexibility of the human brain. We can learn skills in one context and apply them to another. By contrast, though DeepMind’s game-playing algorithm AlphaGo can beat the world’s best Go masters, it can’t extend that strategy beyond the board. Deep-learning algorithms, in other words, are masters at picking up patterns, but they cannot understand and adapt to a changing world.

Researchers have many hypotheses about how this problem might be overcome, but one in particular has gained traction. Children learn about the world by sensing and talking about it. The combination seems key. As kids begin to associate words with sights, sounds, and other sensory information, they are able to describe more and more complicated phenomena and dynamics, tease apart what is causal from what reflects only correlation, and construct a sophisticated model of the world. That model then helps them navigate unfamiliar environments and put new knowledge and experiences in context.

AI systems, on the other hand, are built to do only one of these things at a time. Computer-vision and audio-recognition algorithms can sense things but cannot use language to describe them. A natural-language model can manipulate words, but the words are detached from any sensory reality. If senses and language were combined to give an AI a more human-like way to gather and process new information, could it finally develop something like an understanding of the world?

The hope is that these “multimodal” systems, with access to both the sensory and linguistic “modes” of human intelligence, should give rise to a more robust kind of AI that can adapt more easily to new situations or problems. Such algorithms could then help us tackle more complex problems, or be ported into robots that can communicate and collaborate with us in our daily life.

New advances in language-processing algorithms like OpenAI’s GPT-3 have helped. Researchers now understand how to replicate language manipulation well enough to make combining it with sensing capabilities more potentially fruitful. To start with, they are using the very first sensing capability the field achieved: computer vision. The results are simple bimodal models, or visual-language AI.

In the past year, there have been several exciting results in this area. In September, researchers at the Allen Institute for Artificial Intelligence, AI2, created a model that can generate an image from a text caption, demonstrating the algorithm’s ability to associate words with visual information. In November, researchers at the University of North Carolina, Chapel Hill, developed a method that incorporates images into existing language models, which boosted the models’ reading comprehension.

OpenAI then used these ideas to extend GPT-3. At the start of 2021, the lab released two visual-language models. One links the objects in an image to the words that describe them in a caption. The other generates images based on a combination of the concepts it has learned. You can prompt it, for example, to produce “a painting of a capybara sitting in a field at sunrise.” Though it may have never seen this before, it can mix and match what it knows of paintings, capybaras, fields, and sunrises to dream up dozens of examples.

Achieving more flexible intelligence wouldn’t just unlock new AI applications: it would make them safer, too.

More sophisticated multimodal systems will also make possible more advanced robotic assistants (think robot butlers, not just Alexa). The current generation of AI-powered robots primarily use visual data to navigate and interact with their surroundings. That’s good for completing simple tasks in constrained environments, like fulfilling orders in a warehouse. But labs like AI2 are working to add language and incorporate more sensory inputs, like audio and tactile data, so the machines can understand commands and perform more complex operations, like opening a door when someone is knocking.

In the long run, multimodal breakthroughs could help overcome some of AI’s biggest limitations. Experts argue, for example, that its inability to understand the world is also why it can easily fail or be tricked. (An image can be altered in a way that’s imperceptible to humans but makes an AI identify it as something completely different.) Achieving more flexible intelligence wouldn’t just unlock new AI applications: it would make them safer, too. Algorithms that screen résumés wouldn’t treat irrelevant characteristics like gender and race as signs of ability. Self-driving cars wouldn’t lose their bearings in unfamiliar surroundings and crash in the dark or in snowy weather. Multimodal systems might become the first AIs we can really trust with our lives.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.