These virtual robot arms get smarter by training each other

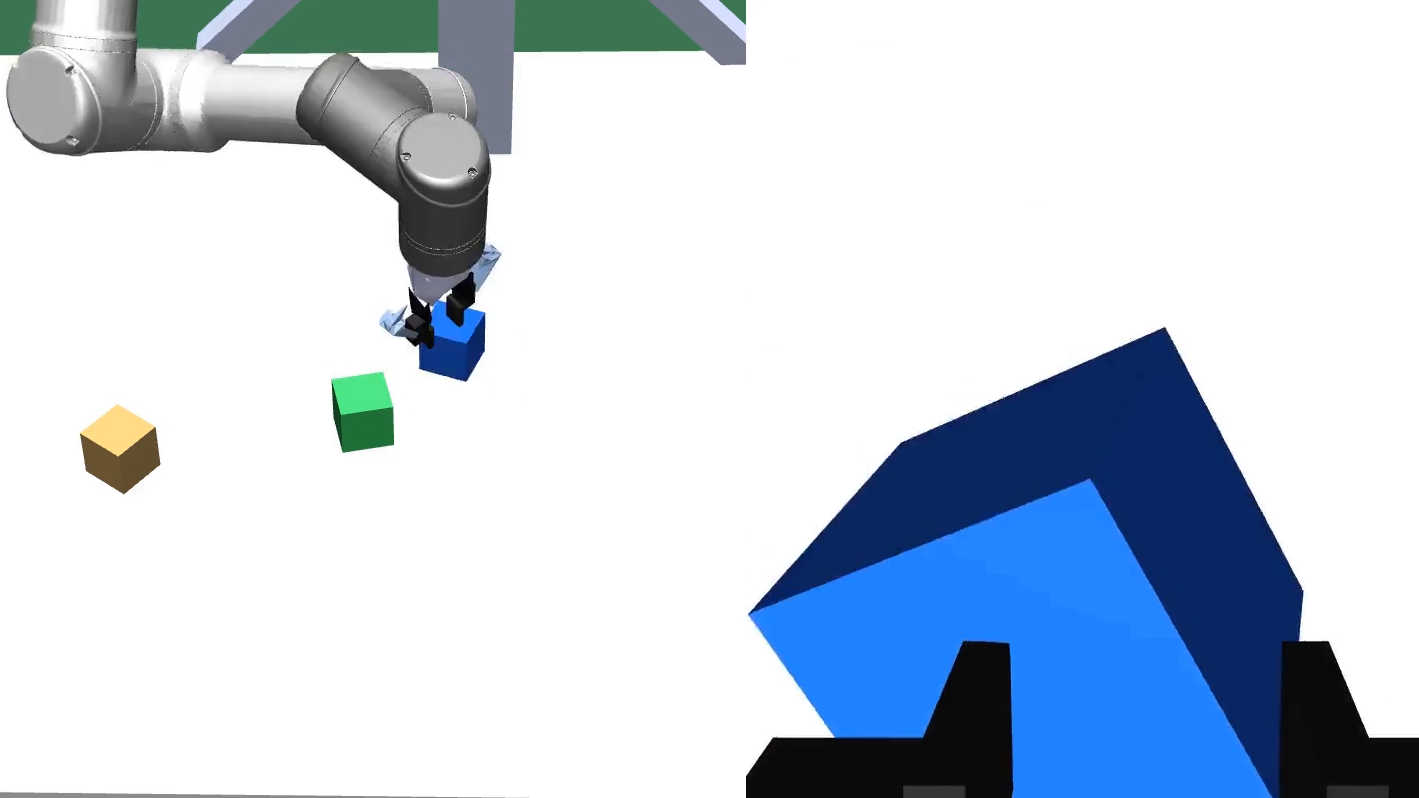

A virtual robot arm has learned to solve a wide range of different puzzles—stacking blocks, setting the table, arranging chess pieces—without having to be retrained for each task. It did this by playing against a second robot arm that was trained to give it harder and harder challenges.

Self play: Developed by researchers at OpenAI, the identical robot arms—Alice and Bob—learn by playing a game against each other in a simulation, without human input. The robots use reinforcement learning, a technique in which AIs are trained by trial and error what actions to take in different situations to achieve certain goals. The game involves moving objects around on a virtual tabletop. By arranging objects in specific ways, Alice tries to set puzzles that are hard for Bob to solve. Bob tries to solve Alice’s puzzles. As they learn, Alice sets more complex puzzles and Bob gets better at solving them.

Multitasking: Deep-learning models typically have to be retrained between tasks. For example, AlphaZero (which also learns by playing games against itself) uses a single algorithm to teach itself to play chess, shogi and Go—but only one game at a time. The chess-playing AlphaZero cannot play Go and the Go-playing one cannot play shogi. Building machines that really can multitask is a big unsolved problem on the road to more general AI.

AI dojo: One issue is that training an AI to multitask requires a vast number of examples. OpenAI avoids this by training Alice to generate the examples for Bob, using one AI to train another. Alice learned to set goals such as building a tower of blocks, then picking up it up and balancing it. Bob learned to use properties of the (virtual) environment, such as friction, to grasp and rotate objects.

Virtual reality: So far the approach has only been tested in a simulation but researchers at OpenAI and elsewhere are getting better at transferring models trained in virtual environments to physical ones. A simulation lets AIs churn through large datasets in a short amount of time, before being fine-tuned for real-world settings.

Overall ambition: The researchers say that their ultimate aim is to train a robot to solve any task that a person might ask it to. Like GPT-3, a language model that can use language in a wide variety of different ways, these robot arms are part of OpenAI’s overall ambition to build a multitasking AI. Using one AI to train another could be a key part of that.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.