Sponsored

A new horizon: Expanding the AI landscape

Organizations are using AI to drive business and improve processes. That’s spurring changes in how they’re deploying the technology and governing its use.

In association withArm

For all of its upheaval, the deadly 2020 coronavirus pandemic—and efforts to stop it—has taught a valuable lesson: organizations that invest in technology survive. IT infrastructure initiatives put in place before the crisis have allowed countless businesses to shift to online commerce and remote working. In other words, operate during it.

A new horizon: Expanding the AI landscape

The pandemic has taught a similar lesson about artificial intelligence (AI): Organizations are either on the right track with their AI strategies or, if anything, need to dramatically step up the pace of investment. Children’s Hospital chief information officer Dan Nigrin points out that AI applications that promote telehealth, for example, “are not necessarily covid-related, but certainly the pandemic has accelerated the consideration and use of these kinds of tools.”

In a recent MIT Technology Review Insights survey of 301 business and technology leaders, 38% report their AI investment plans are unchanged as a result of the pandemic, and 32% indicate the crisis has accelerated their plans. The percentages of unchanged and revved-up AI plans are greater at organizations that had an AI strategy already in place.

Consumers and business decision-makers are realizing there are many ways that AI augments human effort and experience. Technology leaders in most organizations regard AI as a critical capability that has accelerated efforts to increase operational efficiency, gain deeper insight about customers, and shape new areas of business innovation.

AI is not a new addition to the corporate technology arsenal: 62% of survey respondents are using AI technologies. Respondents from larger organizations (those with more than $500 million in annual revenue) have, at nearly 80%, higher deployment rates. Small organizations (with less than $5 million in revenue) are at 58%, slightly below the average.

But most organizations haven’t developed plans to guide them: a little more than a third (35%) of respondents indicate that they’re developing their AI capabilities under the auspices of a formal strategy. AI plans are more common at big organizations (42%), and even small businesses are, at 38%, slightly above the mean.

Of those without current AI deployments, a quarter say they will deploy the technology in the next two years, and less than 15% indicate no plans at all. Here, the divide between large and small widens: less than 5% of big organizations have no AI plans, compared with 18% of smaller ones.

More applications are moving closer to the source

Increasingly, organizations are moving their IT infrastructure to cloud-based resources—for myriad reasons, including cost-efficiency and computing performance. At energy management company Schneider Electric, the cloud has been imperative “not only to transform our company digitally but also to transform our customers’ businesses digitally,” says Ibrahim Gokcen, who was until recently chief technology officer at Schneider. “It was a clear, strategic area of investment for us before the crisis.”

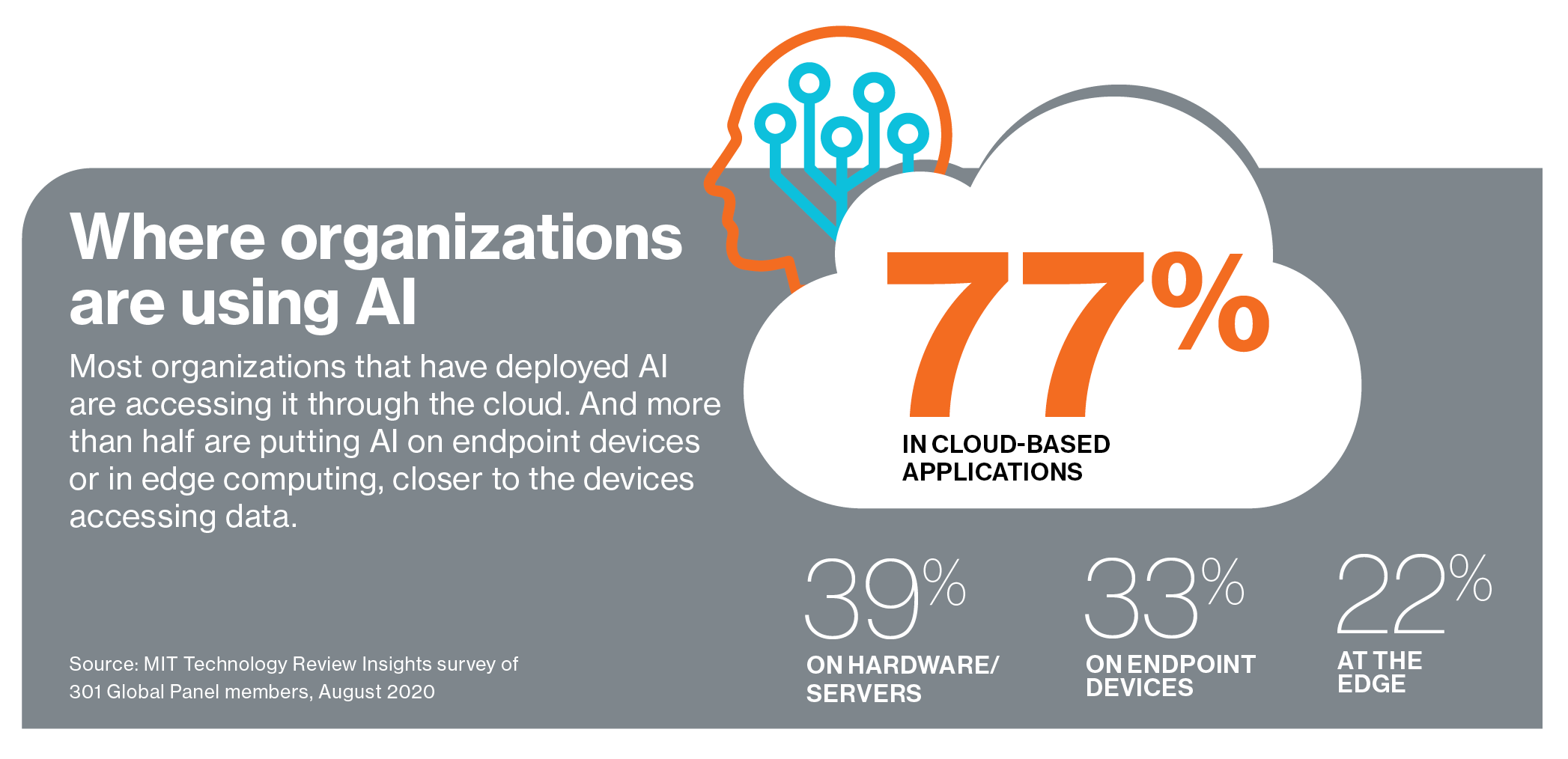

As such, it is unsurprising that most organizations are putting AI in the cloud: 77% are deploying cloud-based AI applications. That makes cloud resources far more popular than hosting on servers or directly on endpoint devices, such as laptops or smartphones.

Cloud-based AI also allows organizations to operate in an ecosystem of collaborators that includes application developers, analytics companies, and customers themselves. Nigrin describes how the cloud allows one of Boston Children’s Hospital’s partners, Israeli medical technology developer DreaMed Diabetes, to “inject AI smarts” into remote insulin management. First, patients upload insulin-pump or glucometer data to the cloud. “The patient provides access to that data to the hospital, which in turn uses software—also in the cloud—to crunch the data and use their algorithmic approach to propose tweaks to the insulin regimen that that patient is on,” offering tremendous time savings and added insight for physicians.

But while the cloud provides significant AI-fueled advantages for organizations, an increasing number of applications have to make use of the infrastructural capabilities of the “edge,” the intermediary computing layer between the cloud and the devices that need computational power. The advantage is these computing and storage resources, housed in edge servers, are closer to a device than cloud computing’s data centers, which can be thousands of miles away. That means latency is lower—so if someone uses a device to access an application, the time delay will be minimal. And while edge computing doesn’t have the infinite scalability of the cloud, it’s mighty enough to handle data-hungry applications like AI.

Download the full report.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.