The hack that could make face recognition think someone else is you

Researchers have demonstrated that they can fool a modern face recognition system into seeing someone who isn’t there.

A team from the cybersecurity firm McAfee set up the attack against a facial recognition system similar to those currently used at airports for passport verification. By using machine learning, they created an image that looked like one person to the human eye, but was identified as somebody else by the face recognition algorithm—the equivalent of tricking the machine into allowing someone to board a flight despite being on a no-fly list.

“If we go in front of a live camera that is using facial recognition to identify and interpret who they're looking at and compare that to a passport photo, we can realistically and repeatedly cause that kind of targeted misclassification,” said the study’s lead author, Steve Povolny.

How it works

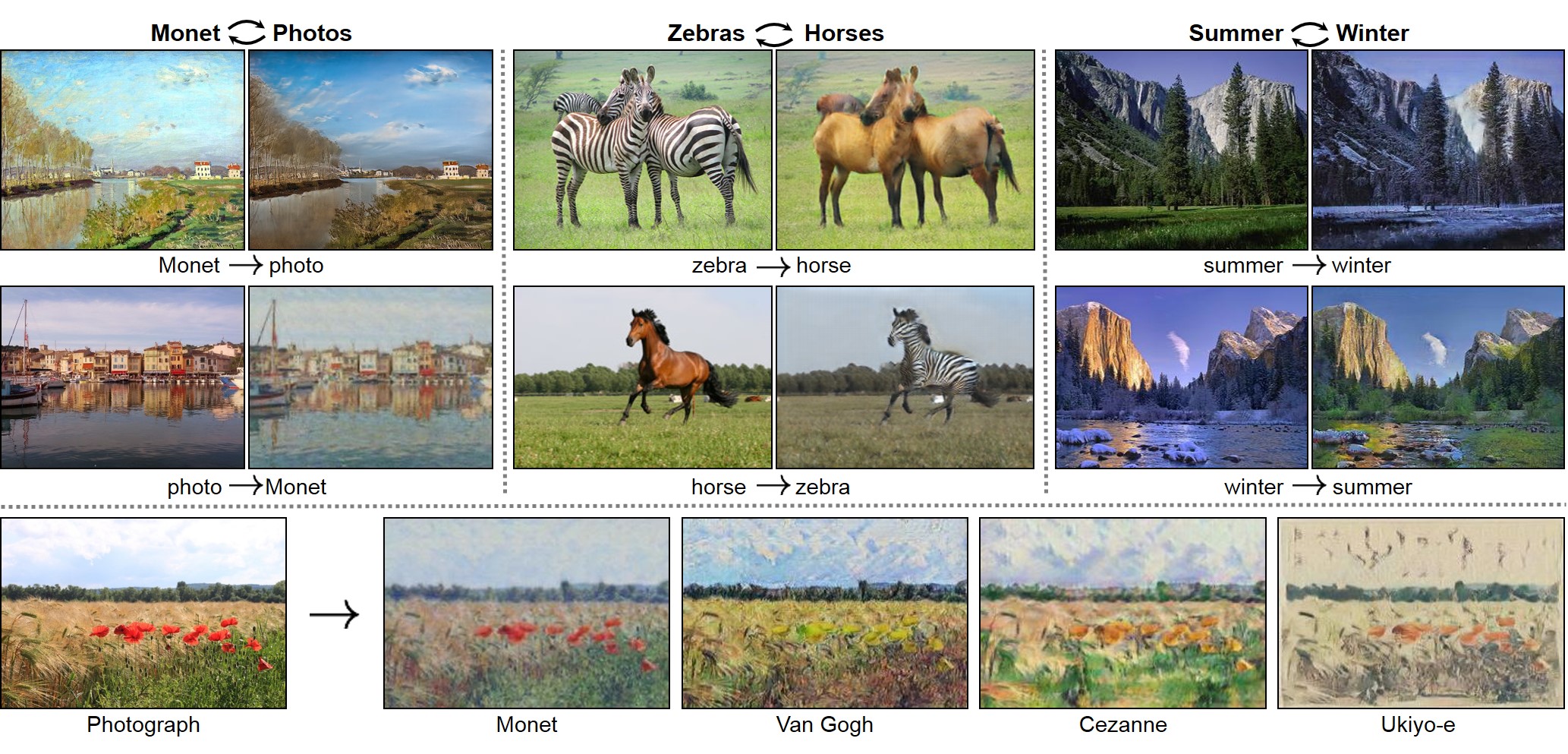

To misdirect the algorithm, the researchers used an image translation algorithm known as CycleGAN, which excels at morphing photographs from one style into another. For example, it can make a photo of a harbor look as if it were painted by Monet, or make a photo of mountains taken in the summer look like it was taken in the winter.

The McAfee team used 1,500 photos of each of the project’s two leads and fed the images into a CycleGAN to morph them into one another. At the same time, they used the facial recognition algorithm to check the CycleGAN’s generated images to see who it recognized. After generating hundreds of images, the CycleGAN eventually created a faked image that looked like person A to the naked eye but fooled the face recognition into thinking it was person B.

While the study raises clear concerns about the security of face recognition systems, there are some caveats. First, the researchers didn’t have access to the actual system that airports use to identify passengers and instead approximated it with a state-of-the-art, open-source algorithm. “I think for an attacker that is going to be the hardest part to overcome,” Povolny says, “where [they] don’t have access to the target system.” Nonetheless, given the high similarities across face recognition algorithms, he thinks it’s likely that the attack would work even on the actual airport system.

How GANs work

Generative Adversarial Networks are a class of algorithm that cleverly pit neural networks against each other to generate better results.

In a traditional GAN, there are only two networks: a generator that trains on a data set, say summer landscape, to spit out more summer landscapes; and a discriminator that compares the generated landscapes against the same data set to decide whether they’re real or fake.

CycleGAN modifies this process by having two generators and two discriminators. There are also two image sets, such as summer landscapes and winter landscapes, representing the types of photos you’d like to translate between.

This time the first generator trains on images of the summer landscapes with the goal of trying to generate winter landscapes. The second generator, meanwhile, trains on images of the winter landscapes to generate summer ones. Both discriminators once again work hard to catch the fakery until the faked landscapes are indistinguishable from the real ones.

Second, today such an attack requires lots of time and resources. CycleGANs need powerful computers and expertise to train and execute.

But face recognition systems and automated passport control are increasingly used for airport security around the world, a shift that has beenaccelerated by the covid-19 pandemic and the desire for touchless systems. The technology is also already widely used by governments and corporations in areas such as law enforcement, hiring, and event security—although many groups have called for a moratorium on such developments, and some cities have banned the technology.

There are other technical attempts to subvert face recognition. A University of Chicago team recently released Fawkes, a tool meant to “cloak” faces by slightly altering your photos on social media so as to fool the AI systems relying on scraped databases of billions of such pictures. Researchers from the AI firm Kneron also showed how masks can fool the face recognition systems already in use around the world.

The McAfee researchers say their goal is ultimately to demonstrate the inherent vulnerabilities in these AI systems and make clear that human beings must stay in the loop.

“AI and facial recognition are incredibly powerful tools to assist in the pipeline of identifying and authorizing people,” Povolny says. “But when you just take them and blindly replace an existing system that relies entirely on a human without having some kind of a secondary check, then you all of a sudden have introduced maybe a greater weakness than you had before.”

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.