AI still doesn’t have the common sense to understand human language

Natural-language processing has taken great strides recently—but how much does AI really understand of what it reads? Less than we thought.

Until pretty recently, computers were hopeless at producing sentences that actually made sense. But the field of natural-language processing (NLP) has taken huge strides, and machines can now generate convincing passages with the push of a button.

These advances have been driven by deep-learning techniques, which pick out statistical patterns in word usage and argument structure from vast troves of text. But a new paper from the Allen Institute of Artificial Intelligence calls attention to something still missing: machines don’t really understand what they’re writing (or reading).

This is a fundamental challenge in the grand pursuit of generalizable AI—but beyond academia, it’s relevant for consumers, too. Chatbots and voice assistants built on state-of-the-art natural-language models, for example, have become the interface for many financial institutions, health-care providers, and government agencies. Without a genuine understanding of language, these systems are more prone to fail, slowing access to important services.

This story is only available to subscribers.

Don’t settle for half the story.

Get paywall-free access to technology news for the here and now.

Subscribe now

Already a subscriber?

Sign in

The researchers built off the work of the Winograd Schema Challenge, a test created in 2011 to evaluate the common-sense reasoning of NLP systems. The challenge uses a set of 273 questions involving pairs of sentences that are identical except for one word. That word, known as a trigger, flips the meaning of each sentence’s pronoun, as seen in the example below:

- The trophy doesn’t fit into the brown suitcase because it’s too large.

- The trophy doesn’t fit into the brown suitcase because it’s too small.

To succeed, an NLP system must figure out which of two options the pronoun refers to. In this case, it would need to select “trophy” for the first and “suitcase” for the second to correctly solve the problem.

The test was originally designed with the idea that such problems couldn’t be answered without a deeper grasp of semantics. State-of-the-art deep-learning models can now reach around 90% accuracy, so it would seem that NLP has gotten closer to its goal. But in their paper, which will receive the Outstanding Paper Award at next month’s AAAI conference, the researchers challenge the effectiveness of the benchmark and, thus, the level of progress that the field has actually made.

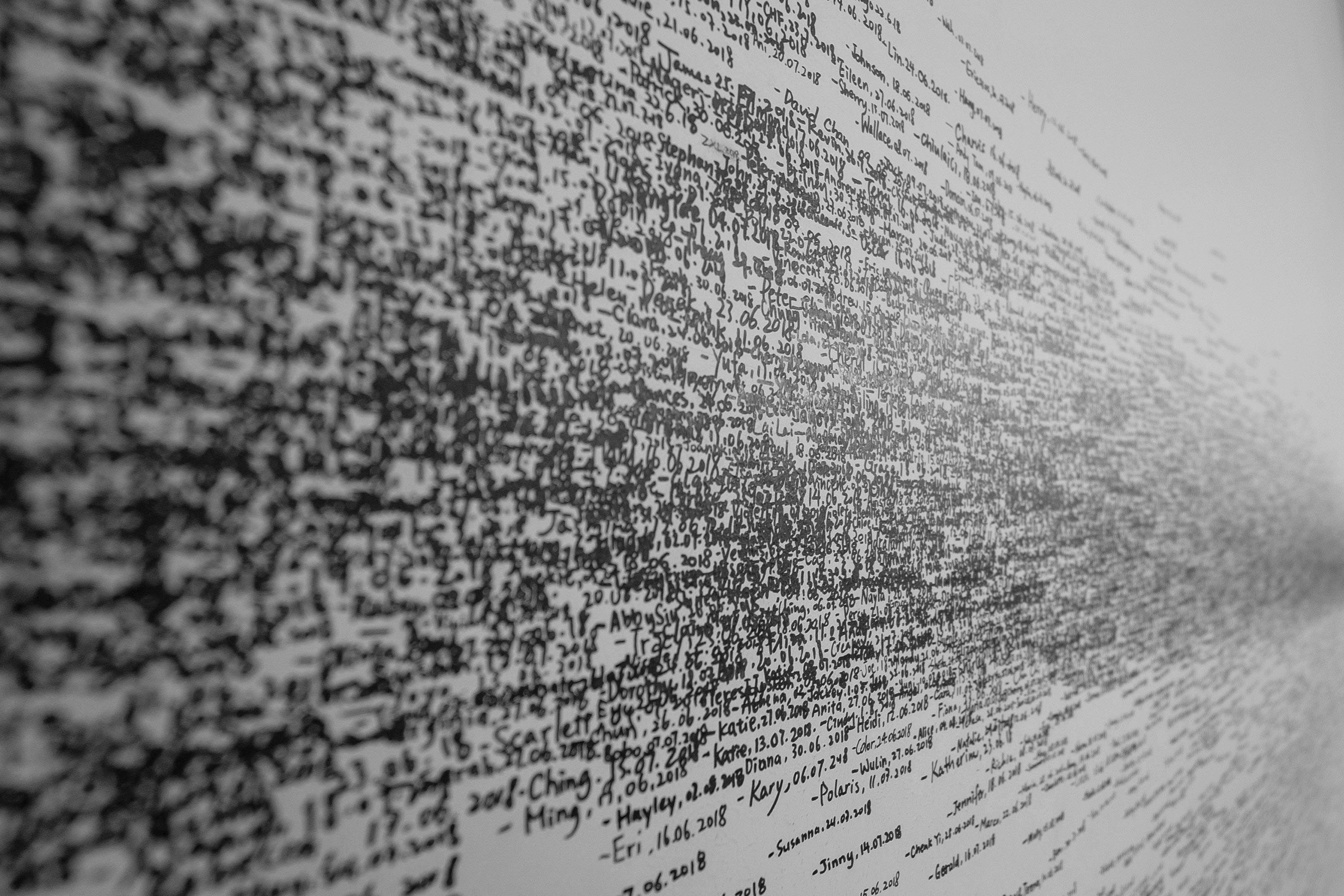

They created a significantly larger data set, dubbed WinoGrande, with 44,000 of the same types of problems. To do so, they designed a crowdsourcing scheme to quickly create and validate new sentence pairs. (Part of the reason the Winograd data set is so small is that it was hand-crafted by experts.) Workers on Amazon Mechanical Turk created new sentences with required words selected through a randomization procedure. Each sentence pair was then given to three additional workers and kept only if it met three criteria: at least two workers selected the correct answers, all three deemed the options unambiguous, and the pronoun’s references couldn’t be deduced through simple word associations.

As a final step, the researchers also ran the data set through an algorithm to remove as many “artifacts” as possible—unintentional data patterns or correlations that could help a language model arrive at the right answers for the wrong reasons. This reduced the chance that a model could learn to game the data set.

When they tested state-of-the-art models on these new problems, performance fell to between 59.4% and 79.1%. By contrast, humans still reached 94% accuracy. This means a high score on the original Winograd test is likely inflated. “It’s just a data-set-specific achievement, not a general-task achievement,” says Yejin Choi, an associate professor at the University of Washington and a senior research manager at AI2, who led the research.

Choi hopes the data set will serve as a new benchmark. But she also hopes it will inspire more researchers to look beyond deep learning. The results emphasized to her that true common-sense NLP systems must incorporate other techniques, such as structured knowledge models. Her previous work has shown significant promise in this direction. “We somehow need to find a different game plan,” she says.

The paper has received some criticism. Ernest Davis, one of the researchers who worked on the original Winograd challenge, says that many of the example sentence pairs listed in the paper are “seriously flawed,” with confusing grammar. “They don’t correspond to the way that people speaking English actually use pronouns,” he wrote in an email.

But Choi notes that truly robust models shouldn’t need perfect grammar to understand a sentence. People who speak English as a second language sometimes mix up their grammar but still convey their meaning.

“Humans can easily understand what our questions are about and select the correct answer,” she says, referring to the 94% performance accuracy. “If humans should be able to do that, my position is that machines should be able to do that too.”

To have more stories like this delivered directly to your inbox, sign up for our Webby-nominated AI newsletter The Algorithm. It’s free.