Welcome to robot university (only robots need apply)

One of the unsung heroes of the AI revolution is a little-known database called ImageNet. Created by researchers at Princeton University, ImageNet contains some 14 million images, each of them annotated by crowdsourced text that explains what the image shows.

ImageNet is important because it is the database that many of today’s powerful neural networks cut their teeth on. Neural networks learn by looking at the images and accompanying text—and the bigger the database, the better they learn. Without ImageNet and other visual data sets like it, even the most powerful neural networks would be unable to recognize anything.

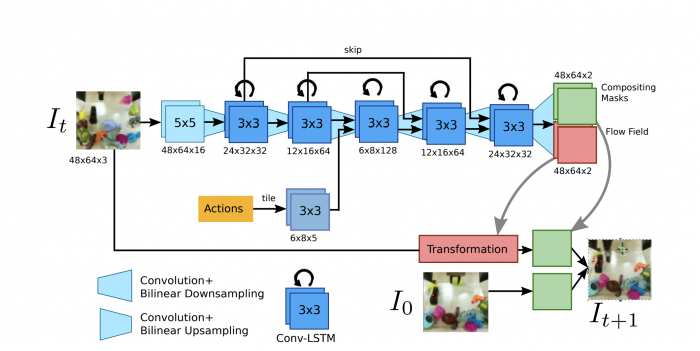

Now roboticists say they want to try a similar approach with video to teach their charges how to interact with the environment. Sudeep Dasari at the University of California, Berkeley, and colleagues are creating a database called RoboNet, consisting of annotated video data of robots in action. For example, the data might include numerous instances of a robot moving a cup across a table. The idea is that anybody can download this data and use it train a robot’s neural network to move a cup too, even if it has never interacted with a cup before.

Dasari and co hope to build their database into a resource that can pre-train almost any robot to do almost any task—a kind of robot university, which the team calls RoboNet.

Until now, roboticists have had limited success in teaching their charges how to navigate and interact with the environment. Their approach is the standard machine-learning technique that ImageNet helped popularize.

They start by recording the way a robot interacts with, say, a brush to move it across a surface. Then they take many more videos of its motion and use the data to train a neural network on how best to perform the action.

The trick, of course, is to have lots of data—in other words, countless hours of video to learn from. And once a robot has mastered brush-moving, it must go through the same learning procedure to move other almost anything else, be it a spoon or a pair of spectacles. If the environment changes, these learning systems generally have to start all over again.

“The common practice of re-collecting data from scratch for every new environment essentially means re-learning basic knowledge about the world—an unnecessary effort,” say Dasari and co.

RoboNet gets around this. “We propose RoboNet, an open database for sharing robotic experience,” they say. So any robot can learn from the experience of another.

To kick-start the database, the team has already recorded some 15 million video frames of tasks using seven different types of robot with different grippers in a variety of environments.

Dasari and co go on to show how to use this database to pre-train robots for tasks they have never before attempted. And they say robots trained with this approach perform better than those that have been conventionally trained on even more data.

The RoboNet data is available for anyone to use. And of course, Dasari and co hope other research teams will start contributing their own to make RoboNet a vast resource of robo-learning.

That’s impressive work that has significant potential. “This work takes the first step towards creating robotic agents that can operate in a wide range of environments and across different hardware,” say the team.

Of course, there are significant challenges ahead. For example, researchers must work out how best to use the data—the jury is still out on the most effective training regimes. “We hope that RoboNet will inspire the broader robotics and reinforcement learning communities to investigate how to scale reinforcement learning algorithms to meet the complexity of the real world,” they say.

The result is both impressive and thought-provoking: a kind of robot university that can give any robot the skills it needs to learn.

ImageNet has been a key factor in making machine vision as good as humans at recognizing objects. If RoboNet is only half as successful, it will be an impressive gain.

Ref: arxiv.org/abs/1910.11215 : RoboNet: Large-Scale Multi-Robot Learning

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.