Facebook should at least label lying political ads

Yesterday, Facebook revealed its plan for fighting disinformation ahead of the 2020 US election. It includes spending $2 million on a media literacy project, making it easier to research political ads, and using more prominent fact-checking labels. Each step is commendable, but it all seems hypocritical coming from a company that refuses to do anything about political ads that contain false information.

The message seems to be that Facebook is very concerned with preventing falsehoods—but only when they are spread by regular users and not by the people who might be elected to positions of real power. At the same time, CEO Mark Zuckerberg was right when he said during a speech last week that “I don’t think most people want to want to live in a world where you can only post things that tech companies judge to be 100% true.”

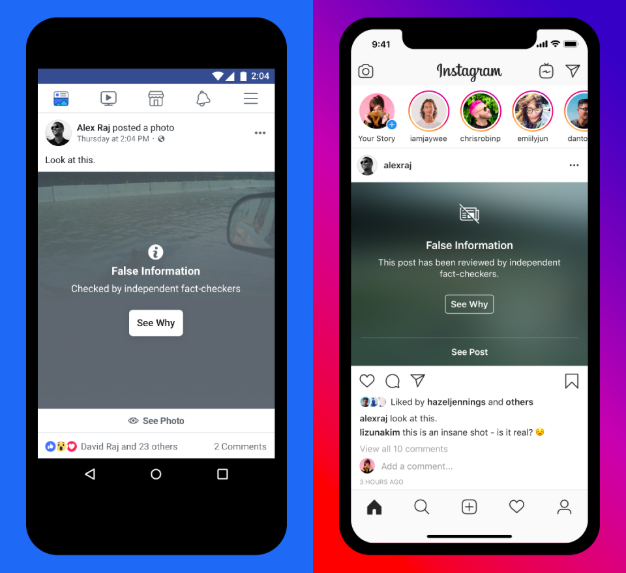

But there’s a middle ground between Facebook deciding what everyone is allowed to see and letting politicians lie as they wish. Facebook should revisit its policy of not touching political content and instead put one of those new, prominent labels on top of political ads that contain false information (like the Trump campaign ad that lied about Joe Biden, or the fake Facebook ad that Elizabeth Warren bought to goad Zuckerberg). That way, the company can keep the ads up without letting falsehoods spread unnoticed, which is especially important because political ads are often microtargeted at communities that might be most likely to believe them.

To be clear, Facebook’s third-party fact-checking program has not been a panacea for the problem of disinformation. An enormous amount of content is posted every day, far too much for everything to be fact-checked. There are people who won’t trust the fact-checkers, and so a label is meaningless to them.

Facebook’s own execution leaves much to be desired as well. In July, the fact-checking platform Full Fact, one of Facebook’s partners, released a report criticizing the company for not sharing enough data and not responding quickly enough to content flagged as false. But to the extent that fact-checking is valuable (and the Full Fact report concluded that it was), political ads should be among the most carefully fact-checked, not the least.

Zuckerberg argues that the company avoids fact-checking politicians “because we think people should be able to see for themselves what politicians are saying.” But most people are not going to bother to fact-check a political ad or seek out journalism elsewhere debunking it. As a result, Facebook’s hands-off policy is not actually neutral. It favors, and helps support, candidates who have no qualms about lying and spreading conspiracy theories. The worst players win.

Having a specific fact-checking team dedicated to political ads could address many of these issues. Facebook already knows which ads are paid for by political campaigns. It’s not an endless content stream. Fact-checking ads wouldn’t make Facebook a censor. It also wouldn’t “prevent a politician’s speech from reaching its audience,” as Facebook spokesperson Nick Clegg fears. It would ensure that the people who come across the ad are able to “see for themselves” what politicians are saying, and also see for themselves which politicians are comfortable with bald-faced lies.

Deep Dive

Humans and technology

Building a more reliable supply chain

Rapidly advancing technologies are building the modern supply chain, making transparent, collaborative, and data-driven systems a reality.

Building a data-driven health-care ecosystem

Harnessing data to improve the equity, affordability, and quality of the health care system.

Let’s not make the same mistakes with AI that we made with social media

Social media’s unregulated evolution over the past decade holds a lot of lessons that apply directly to AI companies and technologies.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.