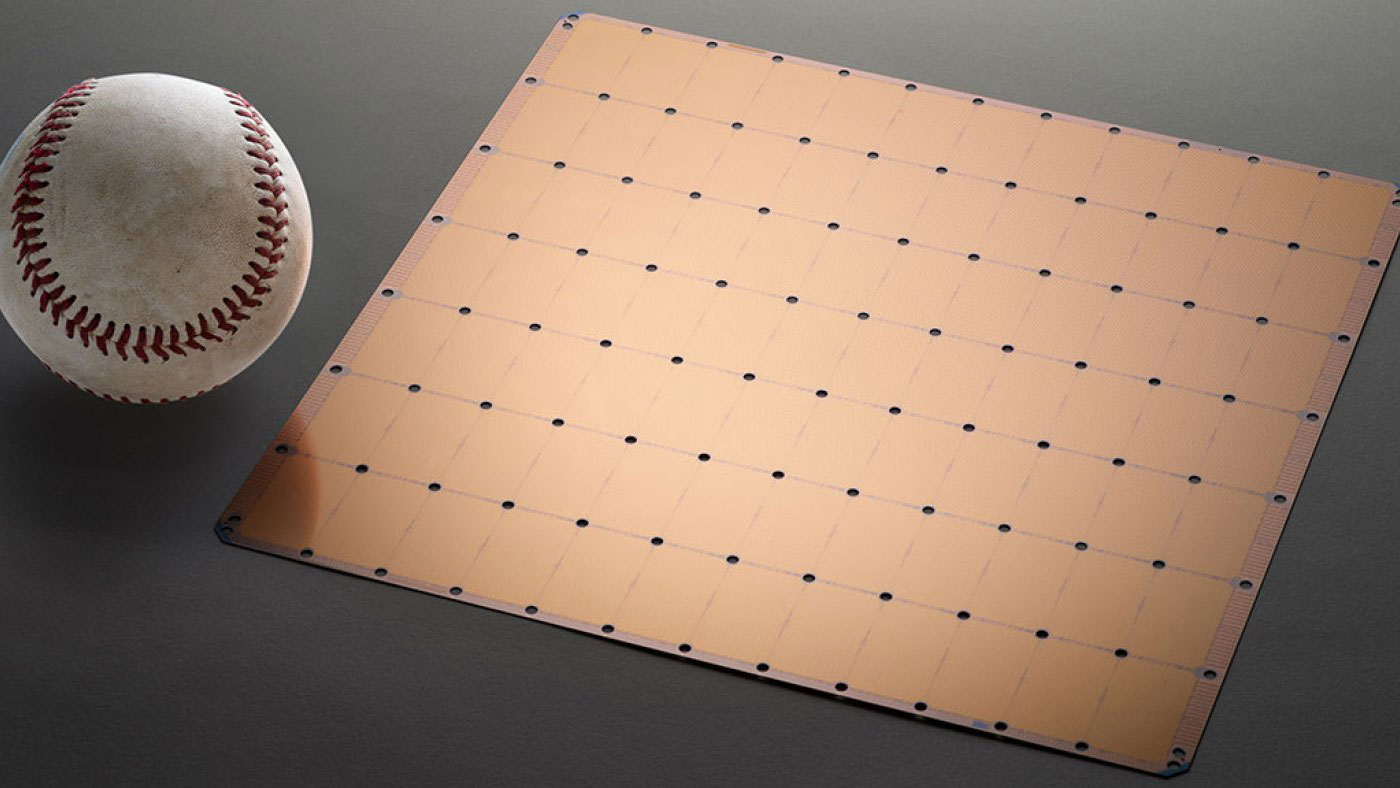

The world’s biggest chip is bigger than an iPad and will help train AI

Cerebras Systems’ new semiconductor boasts 1.2 trillion transistors—and will turbocharge AI applications.

The news: At the Hot Chips conference in Silicon Valley this week, Cerebras is unveiling the Cerebras Wafer Scale Engine. The chip is almost 57 times bigger than Nvidia’s largest general processing unit, or GPU, and boasts 3,000 times the on-chip memory. GPUs are silicon workhorses that power many of today’s AI applications, crunching the data needed to train AI models.

Does size matter? The world’s semiconductor companies have spent decades developing ever tinier chips. These can be bundled together to create super-powerful processors, so why create a standalone AI mega-chip?

The answer, according to Cerebras, is that hooking lots of small chips together creates latencies that slow down training of AI models—a huge industry bottleneck. The company’s chip boasts 400,000 cores, or parts that handle processing, tightly linked to one another to speed up data-crunching. It can also shift data between processing and memory incredibly fast.

Fault tolerance: But if this monster chip is going to conquer the AI world, it will have to show it can overcome some big hurdles. One of these is in manufacturing. If impurities sneak into a wafer being used to make lots of tiny chips, some of these may not be affected; but if there’s only one mega-chip on a wafer, the entire thing may have to be tossed. Cerebras claims it’s found innovative ways to ensure that impurities won’t jeopardize an entire chip, but we don’t yet know if these will work in mass production.

Power play: Another challenge is energy efficiency. AI chips are notoriously power hungry, which has both economic and environmental implications. Shifting data between lots of tiny AI chips is a huge power suck, so Cerebras should have an advantage here. If it can help crack this energy challenge, then the startup’s chip could prove that for AI, big silicon really is beautiful.

Deep Dive

Computing

Inside the hunt for new physics at the world’s largest particle collider

The Large Hadron Collider hasn’t seen any new particles since the discovery of the Higgs boson in 2012. Here’s what researchers are trying to do about it.

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

How Wi-Fi sensing became usable tech

After a decade of obscurity, the technology is being used to track people’s movements.

Algorithms are everywhere

Three new books warn against turning into the person the algorithm thinks you are.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.