A new tool uses AI to spot text written by AI

AI algorithms can generate text convincing enough to fool the average human—potentially providing a way to mass-produce fake news, bogus reviews, and phony social accounts. Thankfully, AI can now be used to identify fake text, too.

The news: Researchers from Harvard University and the MIT-IBM Watson AI Lab have developed a new tool for spotting text that has been generated using AI. Called the Giant Language Model Test Room (GLTR), it exploits the fact that AI text generators rely on statistical patterns in text, as opposed to the actual meaning of words and sentences. In other words, the tool can tell if the words you’re reading seem too predictable to have been written by a human hand.

The context: Misinformation is increasingly being automated, and the technology required to generate fake text and imagery is advancing fast. AI-powered tools such as this may become valuable weapons in the fight to catch fake news, deepfakes, and twitter bots.

Faking it: Researchers at OpenAI recently demonstrated an algorithm capable of dreaming up surprisingly realistic passages. They fed huge amounts of text into a large machine-learning model, which learned to pick up statistical patterns in those words. The Harvard team developed their tool using a version of the OpenAI code that was released publicly.

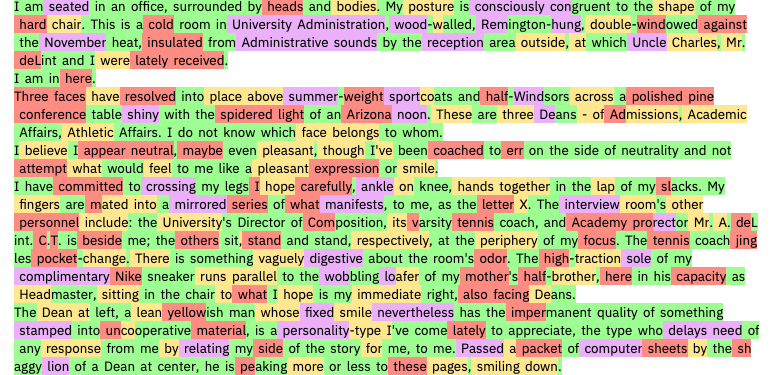

How predictable: GLTR highlights words that are statistically likely to appear after the preceding word in the text. As shown in the passage above (from Infinite Jest), the most predictable words are green; less predictable are yellow and red; and least predictable are purple. When tested on snippets of text written by OpenAI’s algorithm, it finds a lot of predictability. Genuine news articles and scientific abstracts contain more surprises.

Mind and machine: The researchers behind GLTR carried out another experiment as well. They asked Harvard students to identify AI-generated text—first without the tool, and then with the help of its highlighting. The students were able to spot only half of all fakes on their own, but 72% when given the tool. “Our goal is to create human and AI collaboration systems,” says Sebastian Gehrmann, a PhD student involved in the work.

If you're interested, you can try it out for yourself.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.