Superconducting neurons could match the power efficiency of the brain

The human brain is by far the most impressive computing device known to science. The brain operates at a clock speed of just a few hertz, snail-like in comparison to modern microprocessors that run at gigahertz speeds.

But it gets its power by carrying out many calculations at the same time—a billion billion calculations per second. This parallelism allows it to solve problems with ease that conventional computers have yet to tackle: driving, walking, conversing, and so on.

More impressive still is that it does all this powered by little more than a bowl of porridge. By contrast, the world’s most powerful supercomputers use more power than large towns.

That’s why computer scientists want to copy the computing performance of the human brain using neural networks as computational workhorses.

That’s easier said than done. Ordinary chips can be programmed to behave like neural networks, but this is computationally demanding and energy draining.

Instead, computer scientists want to build artificial neurons and connect them together in brain-like networks. That has the potential to be significantly more energy efficient, but nobody has come up with a design that comes close to the efficiency of the brain.

Until today. Enter Emily Toomey at MIT and a couple of colleagues, who have designed a superconducting neuron made from nanowires that in many ways behaves like a real one. They say their device matches the energy efficiency of the brain (at least in theory) and is the building block of a new generation of superconducting neural networks that will be vastly more efficient than conventional computing machinery.

First some background. Neurons encode information in the form of electrical spikes, or action potentials, that travel along the length of the nerve. In brain-like networks, neurons are separated from each other by gaps called synapses.

The information can jump across these synapses, thereby influencing other neurons, causing them to fire or inhibiting them in a way that prevents them from firing. Indeed, this allows neurons to act like logic gates, producing a single output in response to multiple inputs.

Biological neurons have a number of important properties that make this possible. For example, they do not fire unless the input signal exceeds some threshold level, and they cannot fire again until a certain time has passed, a stretch known as the refractory period. And the time for a spike to travel along an axon—the body of a neuron—is important too, since it encodes the distance the spike has traveled.

An artificial neuron must be able to reproduce as many of these features as possible. That usually requires some complex circuitry.

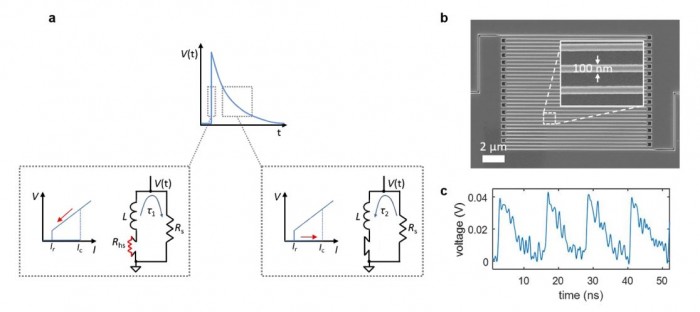

But Toomey and co point out that superconducting nanowires have a peculiar nonlinear property that allows them to act like neurons. This property comes about because the nanowire’s superconductivity breaks down when the current flowing through it exceeds some threshold value.

When this happens, the resistance suddenly increases, creating a voltage pulse. This pulse is analogous to the action potential in a neuron. Using it to modulate yet another pulse produced by a second superconducting nanowire makes the simulation even more realistic.

This creates a simple superconducting circuit that has many of the properties of biological neurons. Toomey and co have shown a superconducting neuron has a firing threshold, a refractory period, and a travel time that can be adjusted according to the circuit properties, among other properties.

Crucially, this superconducting neuron can also be used to trigger or inhibit other neurons. And this “fanout” property is key to creating networks. That’s something other superconducting neuron designs have never been able to achieve.

And because superconducting circuits use very little power, Toomey and co’s calculations suggest, this kind of superconducting neural network could match the efficiency of biological neural networks.

The figure of merit is the number of synaptic operations that the neural network can perform each second using a watt of power. Toomey and co say that their proposed network should be able to match the human brain in managing roughly 1014 synaptic operations per second per watt. “The nanowire neuron can be a highly competitive technology from a power and speed prospective,” they say.

Of course, there are limitations. Perhaps the most significant is that the superconducting neuron can connect to just a handful of other neurons. By contrast, each neuron in the human brain connects to thousands of neighbors. And for the moment, Toomey and co’s design remains just that—a design.

Nevertheless, the simulations are promising. “The analysis performed here suggests that the nanowire neuron is a promising candidate for the advancement of low-power artificial neural networks,” say the team.

And the potential is significant. Toomey and co say superconducting neural networks could be the basis of entirely new computer hardware in the form of superconducting neural nets. These chips could be networked together using superconducting interconnect, which would result in no heat dissipation.

“The result would be a large-scale neuromorphic processor which could be trained as a spiking neural network to perform tasks like pattern recognition or used to simulate the spiking dynamics of a large, biologically-realistic network,” they say.

That’s interesting work, although it needs a proof-of-principle demonstration before the excitement can begin to build.

Ref: arxiv.org/abs/1907.00263 : A Power Efficient Artificial Neuron Using Superconducting Nanowires

Deep Dive

Computing

Inside the hunt for new physics at the world’s largest particle collider

The Large Hadron Collider hasn’t seen any new particles since the discovery of the Higgs boson in 2012. Here’s what researchers are trying to do about it.

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

How Wi-Fi sensing became usable tech

After a decade of obscurity, the technology is being used to track people’s movements.

Algorithms are everywhere

Three new books warn against turning into the person the algorithm thinks you are.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.