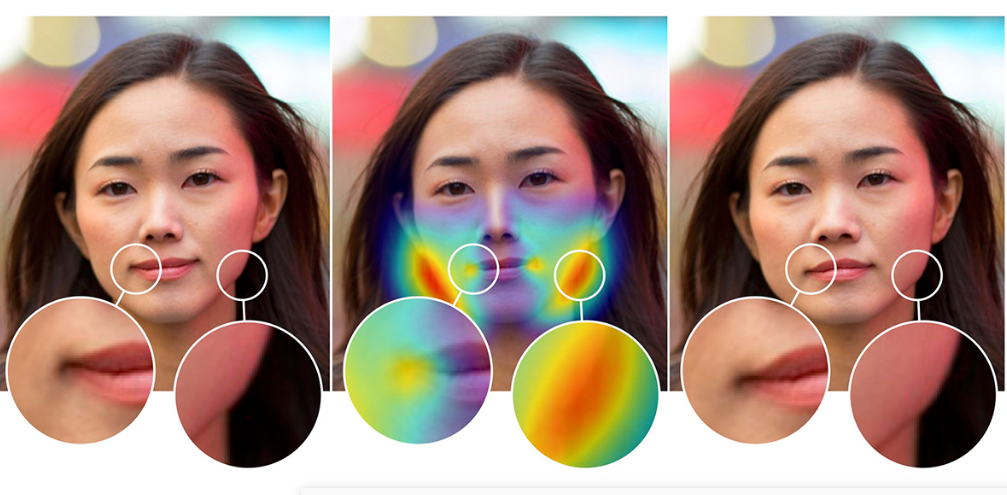

Adobe’s new AI tool can spot when a face has been Photoshopped

It was nearly twice as good at identifying manipulated images as humans.

The research: Researchers from Adobe and UC Berkeley have created a tool that uses machine learning to identify when photos of people’s faces have been altered. The deep-learning tool was trained on thousands of images scraped from the internet. In a series of experiments, it was able to correctly identify edited faces 99% of the time, compared with a 53% success rate for humans.

The context: There’s growing concern over the spread of fake images and “deepfake” videos. However, machine learning could be a useful weapon in the detection (as well as the creation) of fakes.

Some caveats: It’s understandable that Adobe wants to be seen acting on this issue, given that its own products are used to alter pictures. The downside is that this tool works only on images that were made using Adobe Photoshop’s Face Aware Liquify feature.

It's just a prototype, but the company says it plans to take this research further and provide tools to identify and discourage the misuse of its products across the board.

This story first appeared in our daily newsletter The Download. Sign up here to get your dose of the latest must-read news from the world of emerging tech.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.