Deepfakes may be a useful tool for spies

A spy may have used an AI-generated face to deceive and connect with targets on social media.

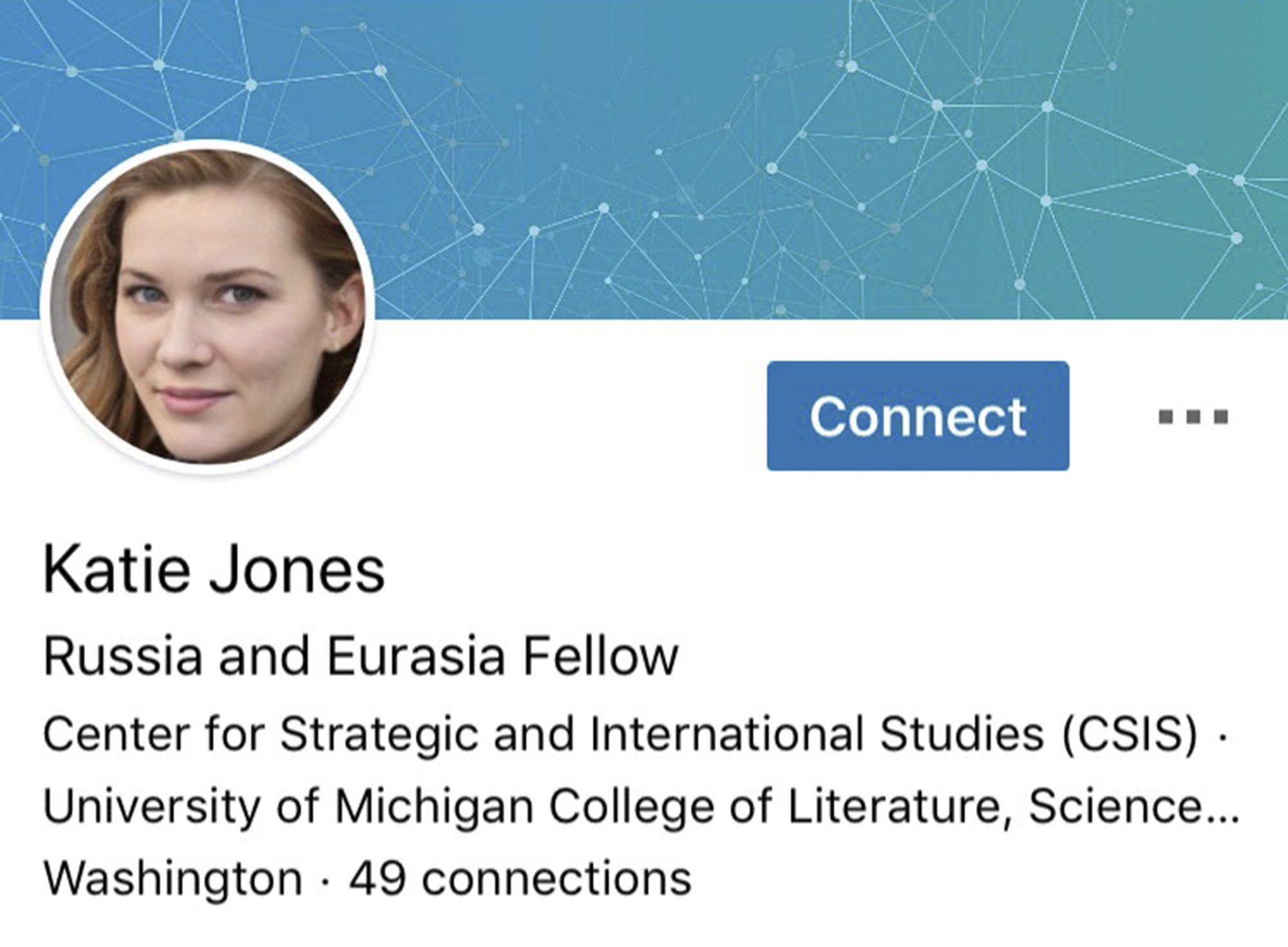

The news: A LinkedIn profile under the name Katie Jones has been identified by the AP as a likely front for AI-enabled espionage. The persona is networked with several high-profile figures in Washington, including a deputy assistant secretary of state, a senior aide to a senator, and an economist being considered for a seat on the Federal Reserve. But what’s most fascinating is the profile image: it demonstrates all the hallmarks of a deepfake, according to several experts who reviewed it.

Easy target: LinkedIn has long been a magnet for spies because it gives easy access to people in powerful circles. Agents will routinely send out tens of thousands of connection requests, pretending to be different people. Only last month, a retired CIA officer was sentenced to 20 years in prison for leaking classified information to a Chinese agent who made contact by posing as a recruiter on the platform.

Weak defense: So why did “Katie Jones” take advantage of AI? Because it removes an important line of defense for detecting impostors: doing a reverse image search on the profile photo. It’s yet another way that deepfakes are eroding our trust in truth as they rapidly advance into the mainstream.

To have more stories like this delivered directly to your inbox, sign up for our Webby-nominated AI newsletter The Algorithm. It's free.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.