An AI for generating fake news could also help detect it

Last month OpenAI rather dramatically withheld the release of its newest language model, GPT-2, because it feared it could be used to automate the mass production of misinformation. The decision also accelerated the AI community’s ongoing discussion about how to detect this kind of fake news. In a new experiment, researchers at the MIT-IBM Watson AI Lab and HarvardNLP considered whether the same language models that can write such convincing prose can also spot other model-generated passages.

The idea behind this hypothesis is simple: language models produce sentences by predicting the next word in a sequence of text. So if they can easily predict most of the words in a given passage, it’s likely it was written by one of their own.

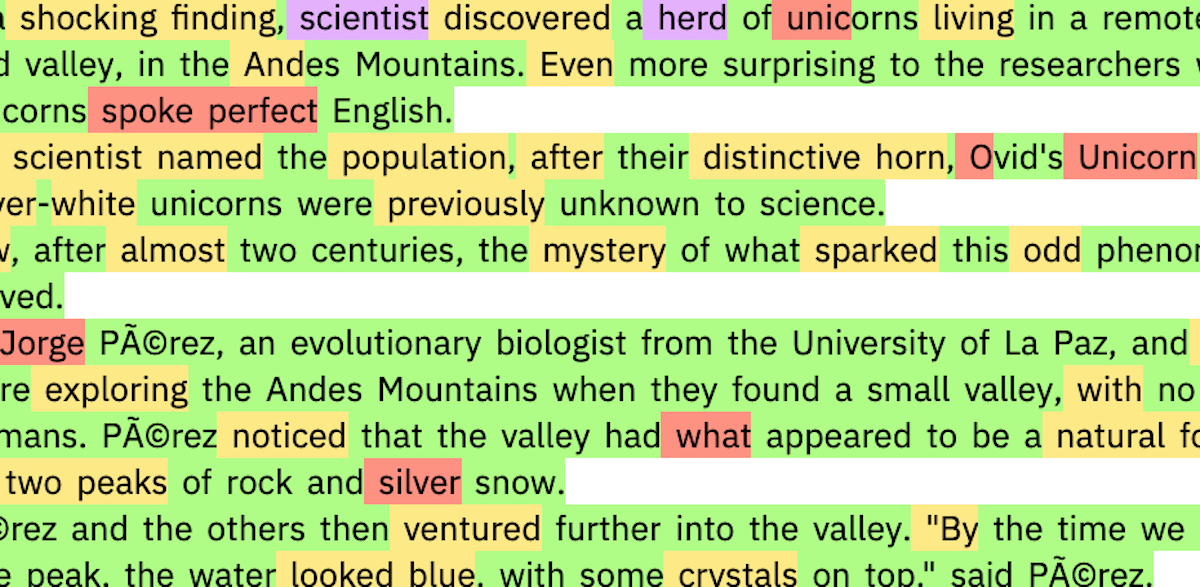

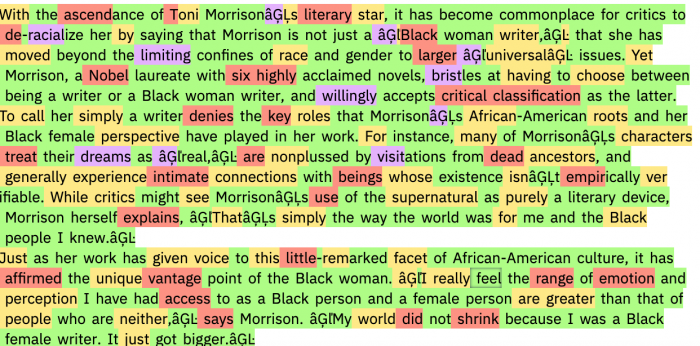

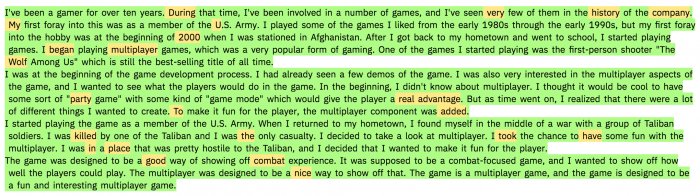

The researchers tested their idea by building an interactive tool based on the publicly accessible downgraded version of OpenAI’s GPT-2. When you feed the tool a passage of text, it highlights the words in green, yellow, or red to indicate decreasing ease of predictability; it highlights them in purple if it wouldn’t have predicted them at all. In theory, the higher the fraction of red and purple words, the higher the chance the passage was written by a human; the greater the share of green and yellow words, the more likely it was written by a language model.

Indeed, the researchers found that passages written by the downgraded and full versions of GPT-2 came out almost completely green and yellow, while scientific abstracts written by humans and text from reading comprehension passages in US standardized tests had lots of red and purple.

But not so fast. Janelle Shane, a researcher who runs the popular blog Letting Neural Networks Be Weird and who was uninvolved in the initial research, put the tool to a more rigorous test. Rather than just feed it text generated by GPT-2, she fed it passages written by other language models as well, including one trained on Amazon reviews and another trained on Dungeons and Dragons biographies. She found that the tool failed to predict a large chunk of the words in each of these passages, and thus it assumed they were human-written. This identifies an important insight: a language model might be good at detecting its own output, but not necessarily the output of others.

This story originally appeared in our AI newsletter The Algorithm. To have it directly delivered to your inbox, sign up here for free.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.