Revealing the invisible

Small imperfections in a wine glass or tiny creases in a contact lens can be tricky to make out, even in good light. But MIT engineers have developed a machine-learning technique that can reveal these “invisible” features and objects in the dark.

The key was a neural network, a type of software that can be trained to associate certain inputs with specific outputs—in this case, dark, grainy images of transparent objects and the objects themselves.

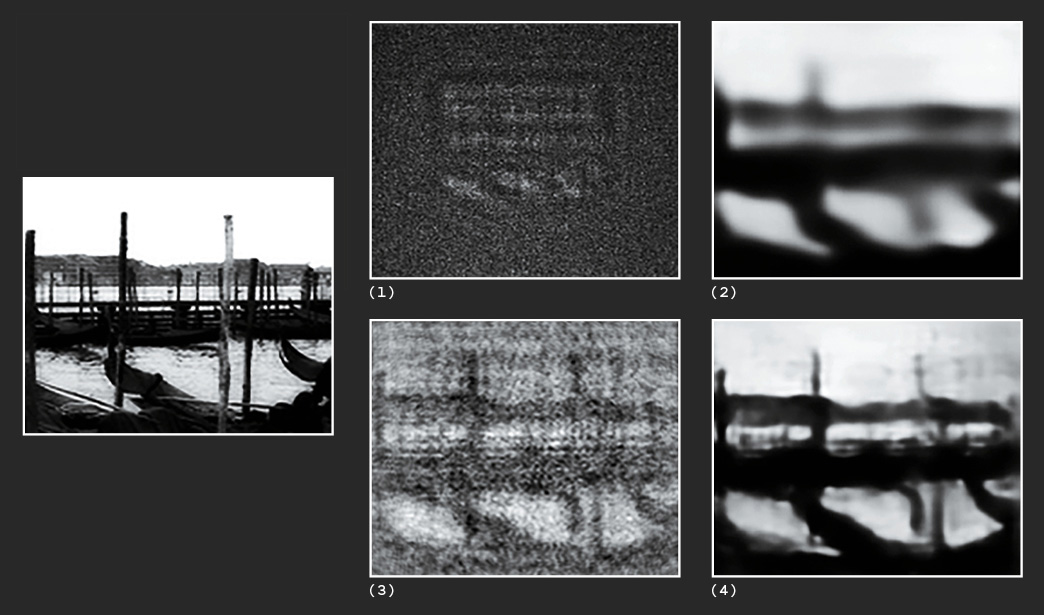

The team fed the network extremely grainy images of more than 10,000 transparent etching patterns from integrated circuits. The images were taken in very low lighting conditions, with about one photon per pixel—far less light than a camera would register in a dark, sealed room. Then they showed the neural network a new grainy image, not included in the training data, and found that it was able to reconstruct the transparent object that the darkness had obscured.

The researchers set their camera to take images slightly out of focus, which provides evidence, in the form of ripples in the detected light, that a transparent object may be present.

But defocusing also creates blur, which can muddy a neural network’s computations. To produce a sharper, more accurate image, the researchers incorporated into the neural network a law in physics that describes how light creates a blurring effect when a camera is defocused.

The team repeated their experiments with another 10,000 images of more general and varied objects, including people, places, and animals. Again, the neural network with the embedded physics algorithm was able to re-create an image of a transparent etching that had been taken in the dark.

The results demonstrate that neural networks may be used to illuminate transparent features, such as biological tissues and cells, in images taken with very little light.

“If you blast biological cells with light, you burn them, and there is nothing left to image,” says George Barbastathis, a professor of mechanical engineering. “If you expose a patient to x-rays, you increase the danger they may get cancer.

What we’re doing here [means] you can get the same image quality, but with a lower exposure to the patient. And in biology, you can reduce the damage to biological specimens when you want to sample them.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.