Neural Networks Are Learning What to Remember and What to Forget

Deep learning is changing the way we use and think about machines. Current incarnations are better than humans at all kinds of tasks, from chess and Go to face recognition and object recognition.

But many aspects of machine learning lag vastly behind human performance. In particular, humans have the extraordinary ability to constantly update their memories with the most important knowledge while overwriting information that is no longer useful.

That’s an important skill. The world provides a never-ending source of data, much of which is irrelevant to the tricky business of survival, and most of which is impossible to store in a limited memory. So humans and other creatures have evolved ways to retain important skills while forgetting irrelevant ones.

The same cannot be said of machines. Any skill they learn is quickly overwritten, regardless of how important it is. There is currently no reliable mechanism they can use to prioritize these skills, deciding what to remember and what to forget.

Today that looks set to change thanks to the work of Rahaf Aljundi and pals at the University of Leuven in Belgium and at Facebook AI Research. These guys have shown that the approach biological systems use to learn, and to forget, can work with artificial neural networks too.

The key is a process known as Hebbian learning, first proposed in the 1940s by the Canadian psychologist Donald Hebb to explain the way brains learn via synaptic plasticity. Hebb’s theory can be famously summarized as “Cells that fire together wire together.”

In other words, the connections between neurons grow stronger if they fire together, and these connections are therefore more difficult to break. This is how we learn—repeated synchronized firing of neurons makes the connections between them stronger and harder to overwrite.

So Aljundi and co have developed a way for artificial neural networks to behave in the same way. They do this by measuring the outputs from a neural network and monitoring how sensitive they are to changes in the connections within the network.

This gives them a sense of which network parameters are most important and should therefore be preserved. “When learning a new task, changes to important parameters are penalized,” say the team. They say the resulting network has “memory aware synapses.”

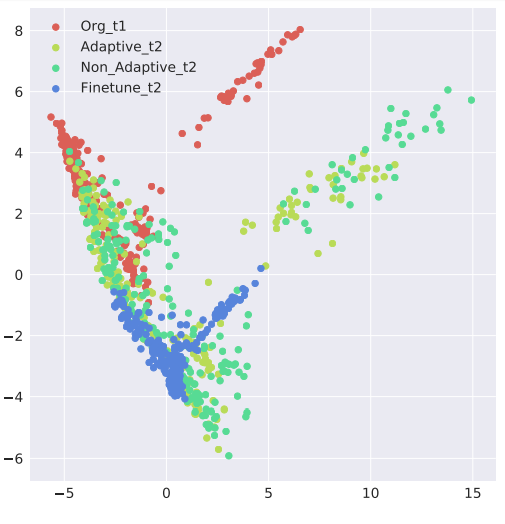

They’ve put this idea through its paces with a set of tests in which a neural network trained to do one thing is then given data that trains it to do something else. For example, a network trained to recognize flowers is then shown birds. The researchers then show it flowers again to see how much of this skill is preserved.

Neural networks with memory aware synapses turn out to perform better in these tests than other networks. In other words, they preserve more of the original skill than networks without this ability, although the results certainly allow room for improvement

The key point, though, is that the team has found a way for neural networks to employ Hebbian learning. “We show that a local version of our method is a direct application of Hebb’s rule in identifying the important connections between neurons,” say Aljundi and co.

That has implications for the future of machine learning. If these scientists can make their version of Hebbian learning better, it should make machines more flexible in their learning. And that will allow them to better adapt to the real world.

Ref: arxiv.org/abs/1711.09601 : Memory Aware Synapses: Learning What (Not) To Forget

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.