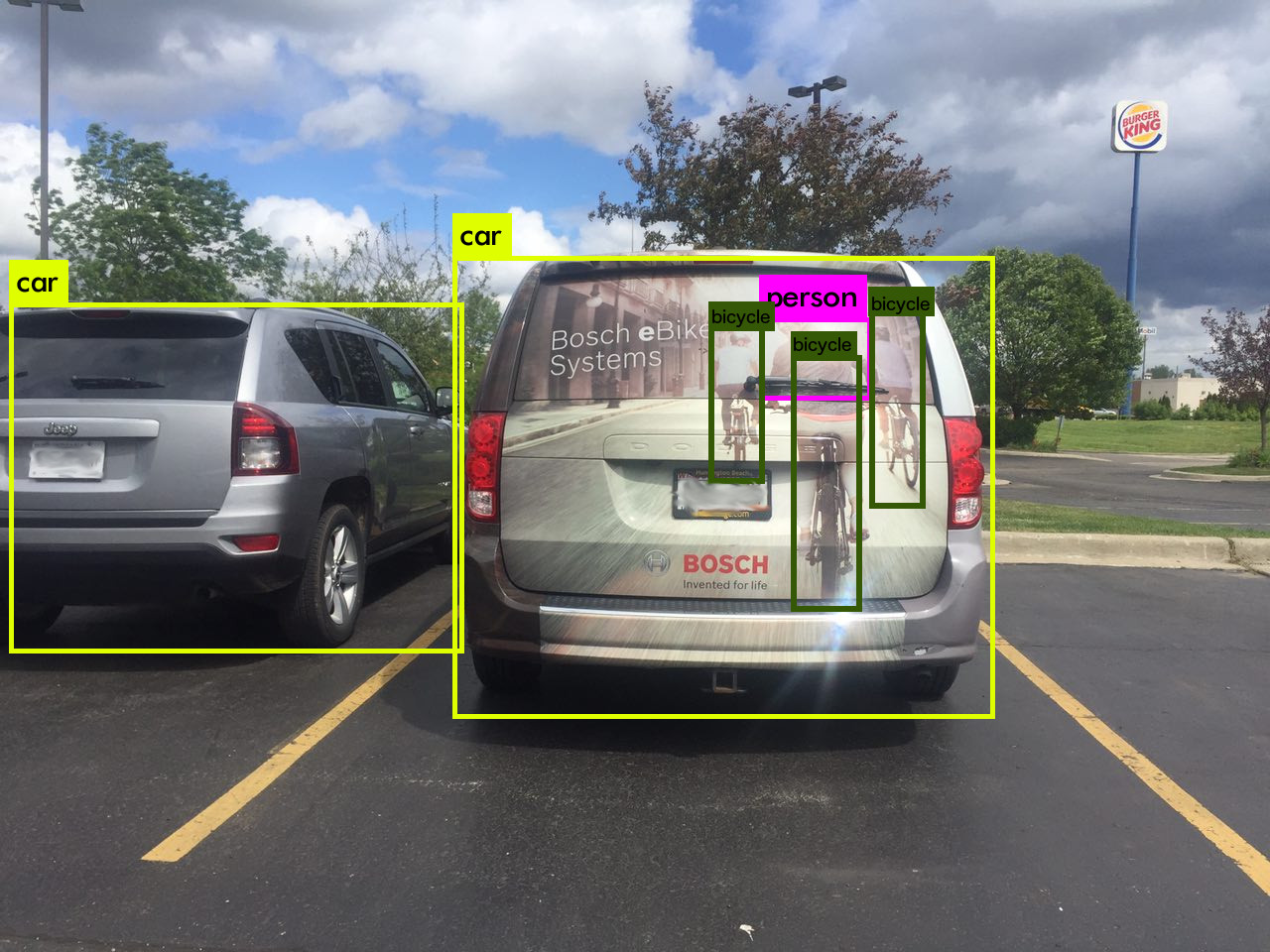

This Image Is Why Self-Driving Cars Come Loaded with Many Types of Sensors

Autonomous cars often proudly claim to be fitted with a long list of sensors—cameras, ultrasound, radar, lidar, you name it. But if you’ve ever wondered why so many sensors are required, look no further than this picture.

You’re looking at what’s known in the autonomous-car industry as an “edge case”—a situation where a vehicle might have behaved unpredictably because its software processed an unusual scenario differently from the way a human would. In this example, image-recognition software applied to data from a regular camera has been fooled into thinking that images of cyclists on the back of a van are genuine human cyclists.

This particular blind spot was identified by researchers at Cognata, a firm that builds software simulators—essentially, highly detailed and programmable computer games—in which automakers can test autonomous-driving algorithms. That allows them to throw these kinds of edge cases at vehicles until they can work out how to deal with them, without risking an accident.

Most autonomous cars overcome issues like the baffling image by using different types of sensing. “Lidar cannot sense glass, radar senses mainly metal, and the camera can be fooled by images,” explains Danny Atsmon, the CEO of Cognata. “Each of the sensors used in autonomous driving comes to solve another part of the sensing challenge.” By gradually figuring out which data can be used to correctly deal with particular edge cases—either in simulation or in real life—the cars can learn to deal with more complex situations.

Tesla was criticized for its decision to use only radar, camera, and ultrasound sensors to provide data for its Autopilot system after one of its vehicles failed to discern a truck trailer from a bright sky and ran into it, killing the driver of the Tesla. Critics argue that lidar is an essential element in the sensor mix—it works well in low light and glare, unlike a camera, and provides more detailed data than radar or ultrasound. But as Atsmon points out, even lidar isn’t without its flaws: it can’t tell the difference between a red and green traffic signal, for example.

The safest bet, then, is for automakers to use an array of sensors, in order to build redundancy into their systems. Cyclists, at least, will thank them for it.

(Read more: “Robot Cars Can Learn to Drive without Leaving the Garage,” “Self-Driving Cars’ Spinning-Laser Problem,” “Tesla Crash Will Shape the Future of Automated Cars”)

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.