Your Best Teammate Might Someday Be an Algorithm

Google is launching an effort that it hopes may turn humans and intelligent machines into productive work buddies.

Through a project called People + AI Research, or PAIR, Google will release tools designed to help make the inner workings of AI systems more transparent. The company is also launching several research initiatives aimed at finding new ways for humans and AI systems to collaborate effectively.

A great deal has been made recently of the potential for artificial intelligence to eliminate jobs and replace humans, but in truth the technology may often serve as a powerful new kind of tool capable of automating only part of a person’s work.

Figuring out how to get humans and AI algorithms to collaborate effectively could have big economic significance, and it could shape the way the workforce is educated. It might also play a role in tempering negative reactions to the growing role of automation in many settings (see “Is Technology Destroying Jobs?” and “Who Will Own the Robots?”).

Barbara Grosz, a professor at Harvard University who has long argued that computer scientists should design AI systems to complement rather than replace people, says this approach is needed because AI is still so limited in what it can do. Grosz adds that human and machine capabilities can add up to more than the sum of their parts. “AI systems, like all computers, need to be developed for the people who are going to use them,” she says.

Some sense of how AI algorithms may collaborate with humans can be seen in games. Chess or Go players can team up with computer programs to perform at an elevated level. This requires a new set of skills and a new approach to each game.

“The past few years have seen rapid advances in machine learning, with dramatic improvements in technical performance,” the researchers wrote in a blog post announcing the initiative. “But we believe AI can go much further—and be more useful to all of us—if we build systems with people in mind at the start of the process.”

PAIR is being led by Fernanda Viégas and Martin Wattenberg, researchers who specialize in developing visualizations that make complex information more comprehensible. Viégas and Wattenberg previously developed a series of tools to elucidate the behavior of complex and abstract machine-learning models. The opacity of such models is a growing concern for those hoping to deploy them in a wider range of settings (see “The Dark Secret at the Heart of AI”).

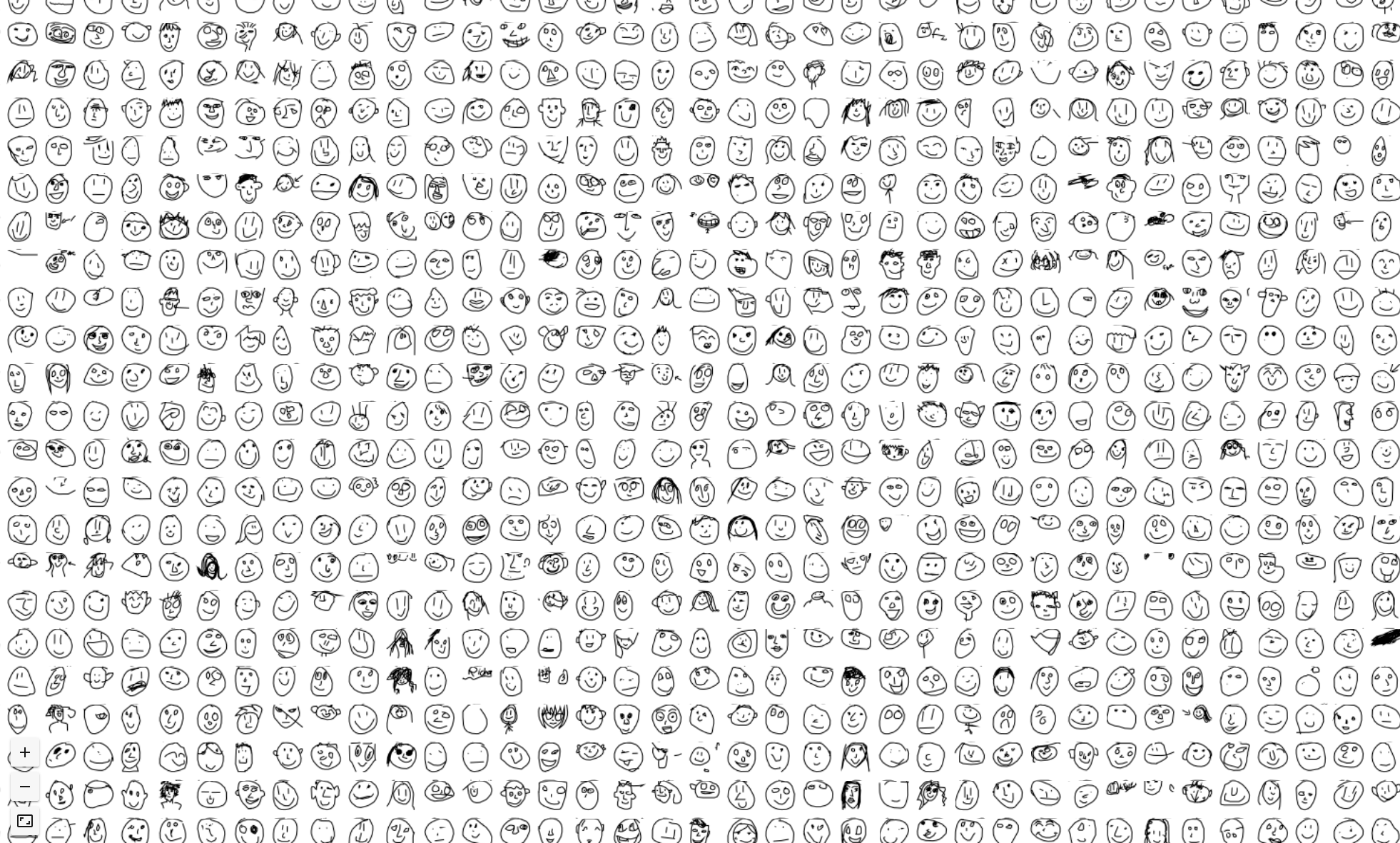

The PAIR project is today releasing two tools for visualizing the kind of large data sets used to train a machine-learning model to make useful predictions. These visualizations can help a data scientist identify anomalies in training data.

Grosz says it is especially challenging to build AI systems that work well with humans, partly because those systems may be complex and opaque, but also because interacting with a human in an intelligent way is a major challenge. “In every situation you need to be able to model a person’s mental state,” she says.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.