Getting robots to do what we want often requires giving them explicit commands for very specific tasks. But new research suggests that we could one day control them in much more intuitive ways.

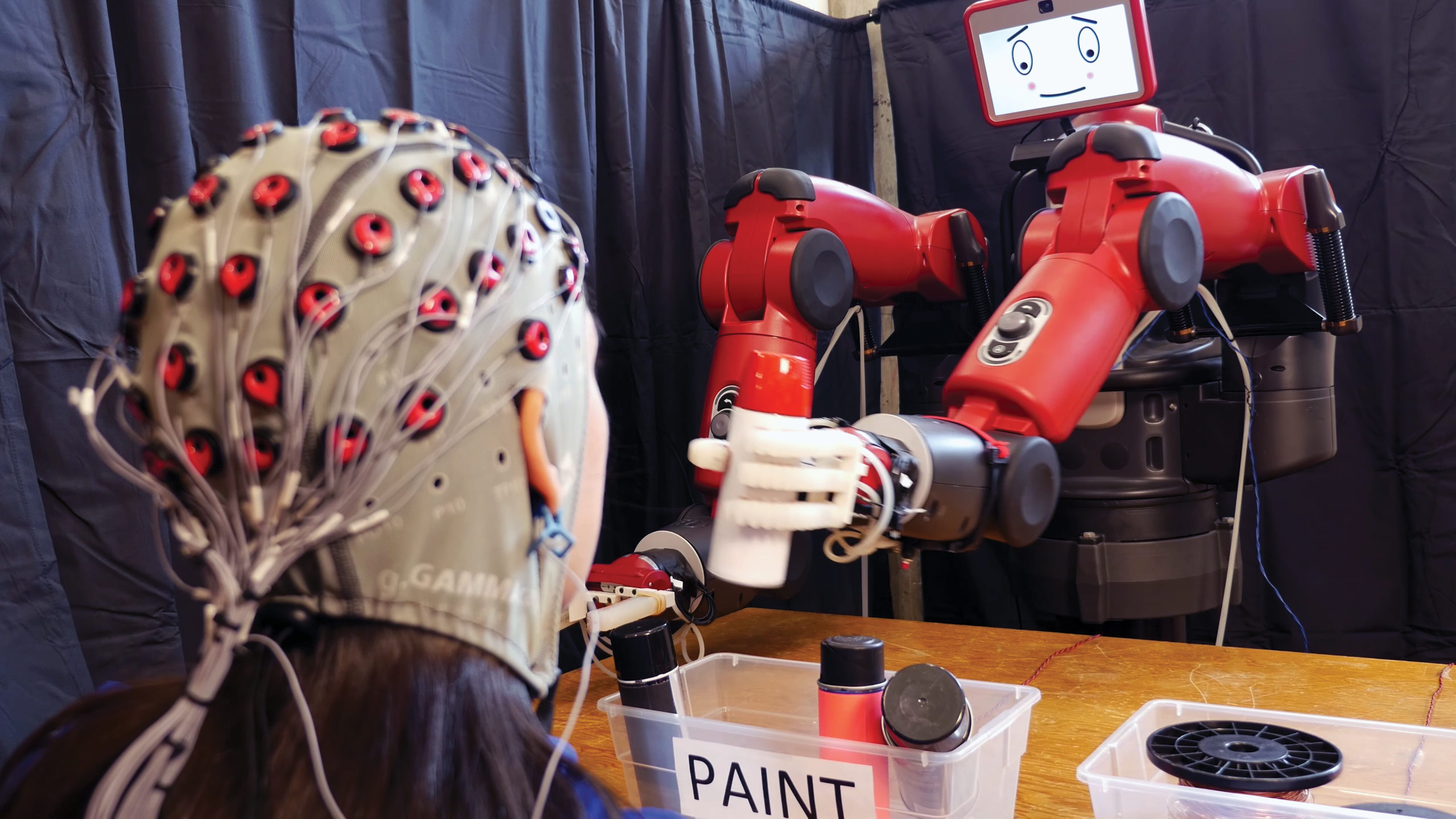

A team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Boston University has developed a feedback system that lets people use their thoughts to correct robots instantly when the machines make mistakes. Using data from an electroencephalography (EEG) monitor that records brain activity, the system can detect—in the space of 10 to 30 milliseconds—if a person notices an error as a robot performs an object-sorting task.

“Imagine being able to instantaneously tell a robot to do a certain action, without needing to type a command, push a button, or even say a word,” says CSAIL director Daniela Rus, a senior author on a paper about the research being presented at the IEEE International Conference on Robotics and Automation in May. “A streamlined approach like that would improve our abilities to supervise factory robots, driverless cars, and other technologies we haven’t even invented yet.”

Past work in robotics controlled by EEG has required training humans to think in a prescribed way that computers can recognize. For example, an operator might have to look at one of two bright light displays, each of which corresponds to a different task for the robot to execute. But the training process and the act of modulating one’s thoughts can be taxing, particularly for people who supervise tasks in navigation or construction that require intense concentration.

Rus’s team wanted to make the experience more natural. To do that, they focused on brain signals called error-related potentials (ErrPs), which are generated whenever our brains notice a mistake. As the robot—in this case, a humanoid robot named Baxter from Rethink Robotics, the company led by former CSAIL director Rodney Brooks—indicates which choice it plans to make in a binary activity, the system uses ErrPs to determine whether the human agrees with the decision.

“As you watch the robot, all you have to do is mentally agree or disagree with what it is doing,” says Rus. “You don’t have to train yourself to think in a certain way—the machine adapts to you and not the other way around.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.