Better Brain Imaging Could Show Computers a Smarter Way to Learn

Machine learning is an extremely clever approach to computer programming. Instead of a having to carefully write out instructions for a particular task, you just feed millions of examples into a very powerful computer and, essentially, let it write the program itself.

Many of the gadgets and online services we take for granted today, like Web search, voice recognition, and image tagging, make use of some form of machine learning. And companies that have oodles of user data (Google, Facebook, Apple, Walmart, etc.) are nicely placed to ride this trend to riches.

A new $12 million dollar project at Carnegie Mellon University could make machine learning even more powerful by uncovering ways to teach computers more efficiently while using much less data.

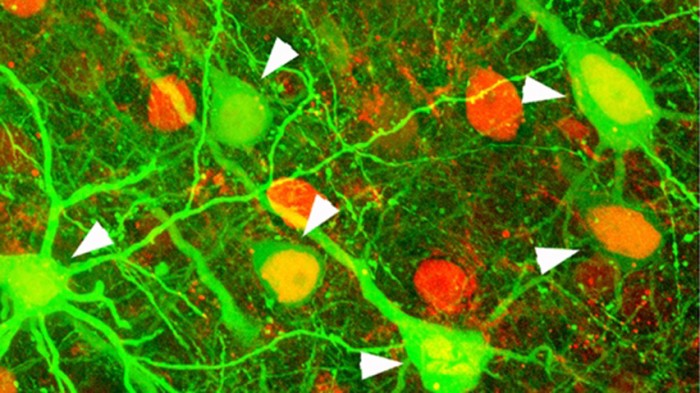

The five-year effort will use a newish technique, called 2-photon calcium imaging microscopy, to study the way visual information is processed in the brain. The funding comes through President Obama’s BRAIN Initiative, and it is a good example of one of the near-term benefits that powerful new brain imaging techniques could have.

Many of the best machine-learning algorithms are, in fact, already loosely based on the functioning of the brain. But these are incredibly crude, and do not account for some simple features of biological networks.

“Powerful as they are, [these algorithms] aren’t nearly as efficient or powerful as those used by the human brain,” said Tai Sing Lee, a professor of computer science at CMU who is leading the effort. “For instance, to learn to recognize an object, a computer might need to be shown thousands of labeled examples and taught in a supervised manner, while a person would require only a handful and might not need supervision.”

Lee will collaborate with Sandra Kuhlman, a professor of biological sciences, also at CMU, and Alan Yuille, a professor of cognitive sciences at Johns Hopkins University.

It isn’t just neuroscience that could help us develop better machine-learning approaches. Some cognitive scientists are taking inspiration from psychology observations in order to build clever new learning systems.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.