Telepresence Robot for the Disabled Takes Directions from Brain Signals

People with severe motor disabilities are testing a new way to interact with the world—using a robot controlled by brain signals.

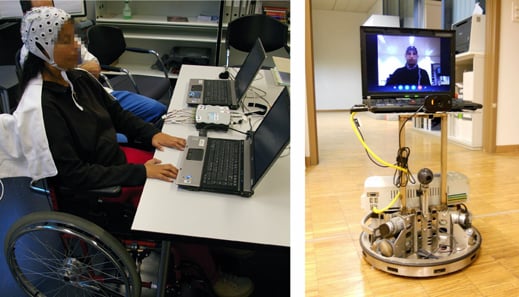

An experimental telepresence robot created by Italian and Swiss researchers uses its own smarts to make things easier for the person using it, a system dubbed shared control. The user tells the robot where to go via a brainwave-detecting headset, and the robot takes care of details like avoiding obstacles and determining the best route forward.

The robot is essentially a laptop mounted on a rolling base—the user sees the robot’s surroundings via the laptop’s webcam, and can converse with people over Skype.

To move the robot, users wear a skullcap studded with electroencephalogram (EEG) sensors, and imagine movements with their feet or hands. Each movement corresponds with a different command, such as forward, backward, left, or right. Software translates the different signals generated into actions for the robot.

However, the robot’s control software decides for itself the best way to change trajectories and accelerate to get where it has been told to go. It has nine infrared sensors that alert it to obstacles, which it can move around while also following the user’s directions.

That design makes the robot easy enough to use that it could offer a practical way to give disabled people more independence, says Robert Leeb, a research scientist at the Swiss Federal Institute of Technology in Lausanne who worked on the project. “Imagine an end-user lying in his bed at home connected to all the necessary equipment to support his life,” he says. “With such a telepresence robot, he could again participate in his family life.”

The researchers tested the robot by having people with and without motor disabilities navigate it through several rooms filled with obstacles. Both groups were able to steer through the course in similar times. They needed to use fewer commands than when they controlled the robot entirely, and completed the course faster.

Participants without motor disabilities were also tested on how quickly they could manually navigate the robot without giving it any autonomy. The study found their times to be only slightly shorter than when they shared control and navigated via brain-computer interface. The researchers published their results in a recent issue of the journal Proceedings of the IEEE.

Researchers are exploring using brain-computer interfaces for everything from steering wheelchairs to moving prosthetic limbs. Versions that are implanted into the brain can give people impressive control of robotic limbs, but are challenging to install and maintain, and not widely used (see “The Thought Experiment”).

Non-invasive brain-computer interfaces that listen for EEG signals simply by touching the scalp at several points are less powerful but more practical. Simple systems are available for home use, such as the Muse headband, intended to aid meditation, and Emotiv, designed for gaming (see “Brain Games”).

“In the last five years, brain-computer interfaces made a big step out of the lab into more clinical and home settings,” says Gernot Muller-Putz, who leads the BCI Institute at the Graz University of Technology in Austria. Devices that used to be designed with researchers in mind have been reimagined with more focus on the needs of people that may want or need to use them to interact with technology, he says.

However, Leeb says, his shared control system for robots won’t be heading to market for years to come. Most effort on commercializing EEG-based brain-computer interfaces is focused on low-cost single-purpose devices, not making high-quality sensors that could be used in many different applications.

“If we develop a system which can then be used easily by everybody, just like a cell phone, this would push the brain-computer interface technology wide out,” Leeb says.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.