Squeezed Light and Quantum Clockspeeds

The world’s fastest computer is the Tianhe-2 supercomputer at National Super Computer Center in Guangzhou, China. It consists of 16,000 computer nodes, each with two Intel Ivy Bridge Xeon processors and three Xeon Phi coprocessor. Together these make it capable of 33.86 quadrillion floating point calculations per second, more than any other computing machine on the planet.

Clearly, the resources available to carry out a calculation are the crucial factor in its performance, and the number of calculations per second is a good guide to a computer’s power.

But quantifying the power of a quantum computer is much harder. These computing devices can perform calculations that are beyond the ken of ordinary processing machines. And yet the resources they require to do this trick are poorly understood.

Certainly the number of quantum bits in play is crucial but so is the amount of entanglement that the computation involves. And that leads to a puzzle: some types of quantum computation require high levels of entanglement while others require almost none to do similar things. So what is the resource that gives quantum computations their special power?

Today, we get an answer of sorts thanks to the work of Nana Liu at the University of Oxford in the U.K. and a few pals who have found a way to evaluate the performance of quantum computers using a single parameter that functions as a kind of quantum clockspeed. This makes it possible to compare different kinds of quantum calculation on a level playing field for the first time.

First some background. The basic idea behind quantum computation is that a quantum object can exist in a superposition of states and therefore as a 0 and a 1 at the same time. This information can be combined with that carried by another quantum object to perform a calculation. But instead of a single reckoning, a quantum computation allows two or more calculations to proceed at the same time, one for each of the numbers in superposition.

That’s the origin of the speed up possible with quantum computers. And while one quantum bit can handle two numbers, two quantum bits can handle four numbers, three qubuts eight numbers, four qubits 16 numbers, and so on. So quantum calculations scale exponentially with the number of qubits.

But there is another factor in this: the way the qubits are combined and manipulated. One way to do this is to entangle the qubits. Entanglement is the curious process in which two quantum objects become so closely linked that they share the same existence. So a measurement performed on one instantaneously influences the other, no matter how far away.

This allows calculations, like factoring, to be performed at speeds that would make the Tianhe-2 look like a pocket calculator. (At least in theory. Physicists haven’t quite overcome the significant technical challenges in building powerful quantum computers yet.)

But in some types of quantum calculation, very little, if any, entanglement seems necessary. A notable example is called “deterministic quantum computation with one qubit.” This can solve certain types of calculation faster than any ordinary computer. But nobody knows how much more or less powerful this flavor of calculation is compared to, say, quantum factoring, because there has never been a way to compare them. Until now.

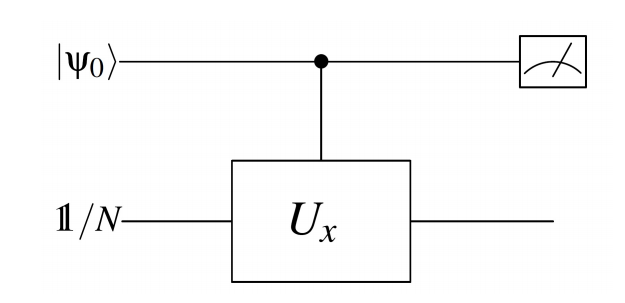

Lui and co have discovered an entirely new way to perform quantum computations that allows the different flavors of calculation to be compared for the first time. Their approach relies on a phenomenon called quantum squeezing. This is a way of manipulating entangled photons to reduce the background vacuum noise associated with them.

The trick is based on Heisenberg’s uncertainty principle—that it is possible to measure a photon’s position accurately or its momentum but not both at the same time. The same is true for other quantum properties such as energy and time or angle and angular momentum—there is always a trade-off in knowing one or the other.

This principle allows physicists to reduce the noise associated with entangled photons when they are detected by making them less measurable in other places—a process known as quantum squeezing. That’s hugely important because reducing the amount of noise allows measurements to be made much more precise. And the amount of squeezing is exactly quantifiable, so it’s easy to see how much of this quantum property is in use.

This gave Liu and co an idea. Because many forms of quantum computation involve photons, they replaced the ordinary photons with squeezed versions. The quantumness involved in each computation could then be exactly quantified by the amount of squeezing required to carry them out. Liu and co measured it in “qumodes,” hence the title of the paper.

The results make for interesting reading. Liu and co say that deterministic quantum computation with one qubit requires zero squeezing and so comes at the bottom of the hierarchy of quantum clockspeeds.

By contrast, the amount of squeezing necessary for quantum factoring depends on the size of the number being factored. Indeed, the amount of squeezing increases exponentially as the number gets bigger.

That allows these two forms of quantum computation to be compared for the first time. “This introduces a new perspective in which to think about hierarchies in quantum algorithms,” say Liu and co.

That should be handy in the future. And there is an interesting corollary that involves the quantum computing now being done by organizations such as Google and NASA courtesy of a computing device sold by a company called D-Wave Systems.

This machine uses quantum annealing to compute but it is hugely controversial. D-Wave insists that the machine is exponentially faster than conventional computers for some types of calculation. But many physicists are deeply skeptical, saying that it lacks the essential quantumness needed to perform the claimed computational feats.

Perhaps the new quantum squeezing approach can help. If it provides a fair way to compare the performance of D-Wave’s machine with other forms of quantum computing, then this debate could be laid to rest. And that should make it possible to say for sure whether this technique will ever lead to a machine more powerful than Tianhe-2.

Ref: arxiv.org/abs/1510.04758 : The Power of One Qumode

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.