Researchers Employ Baxter Robot to Help the Blind

Many people with visual impairments find seeing-eye dogs invaluable for avoiding obstacles and negotiating traffic. But even the smartest guide dog can’t distinguish between similar banknotes, read a bus timetable, or give directions. Now robotics researchers at Carnegie Mellon University are developing assistive robots to help blind travelers navigate the modern world.

“Part of our job is to invent the future,” says M. Bernardine Dias, a professor in the university’s Robotics Institute. “We envision robots being part of society in smart cities and want to make sure that people with visual impairment and other disabilities aren’t left out of that future.”

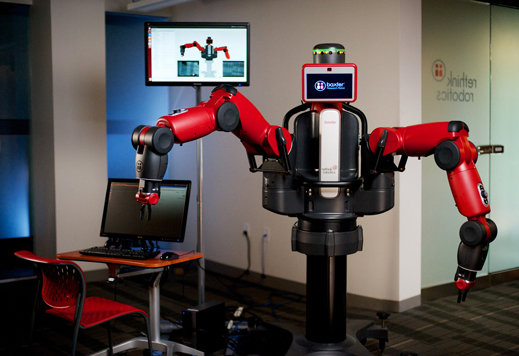

Dias and collaborator Aaron Steinfeld are working to discover the most effective ways for a Baxter research robot to interact with blind and partially sighted people. It’s a chicken and egg problem, says Dias: “If you’ve never interacted with a robot before and you haven’t imagined that possibility, then it’s difficult to answer the question, what would you like to do with a robot?”

In discussion with members of Pittsburgh’s visually impaired community, Dias and Steinfeld settled on the concept of an assistive robot at an information desk in a busy transit center. The idea is that the robot would provide help with visual or physical tasks when human workers were either absent or overwhelmed by disgruntled travelers.

The research, funded by the National Science Foundation and now in its second year, has already revealed some surprises. “Sighted people tend to be apprehensive when they meet a dexterous humanoid robot for the first time,” says Steinfeld. “But blind people seem to be very comfortable interacting with the robot. They were more comfortable holding the robot’s plastic fingers, in fact, than having physical contact with another human being.”

One key reason Dias and Steinfeld chose the Baxter robot was for its lack of dangerous pinch-points. It’s a safe robot for people who are interacting with it through their sense of touch. The robot starts by introducing itself and then switches itself off to allow a visually impaired user the opportunity to manually explore its shape and construction. When they are ready to continue, a verbal command turns the Baxter on again.

Baxter can also quickly learn new tasks by copying users moving its manipulators. “This opens up a whole world of possibilities in terms of having blind people teach Baxter to do things of use to them,” says Dias. “This is for the future, but it is very exciting.”

Dias and Steinfeld now want to integrate the Baxter assistive robot with a smartphone navigation app they have already developed called NavPal, which provides “audio breadcrumbs” to warn visually impaired pedestrians of hyperlocal hazards like potholes or construction sites. Ultimately, the researchers would like to also introduce mobile robots to physically guide people in the manner of seeing-eye dogs. “We’ll have our first stab at that in the next year or so,” says Steinfeld. “Our immediate milestone is to try to fill a gap and help people who’d normally be on their own.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.