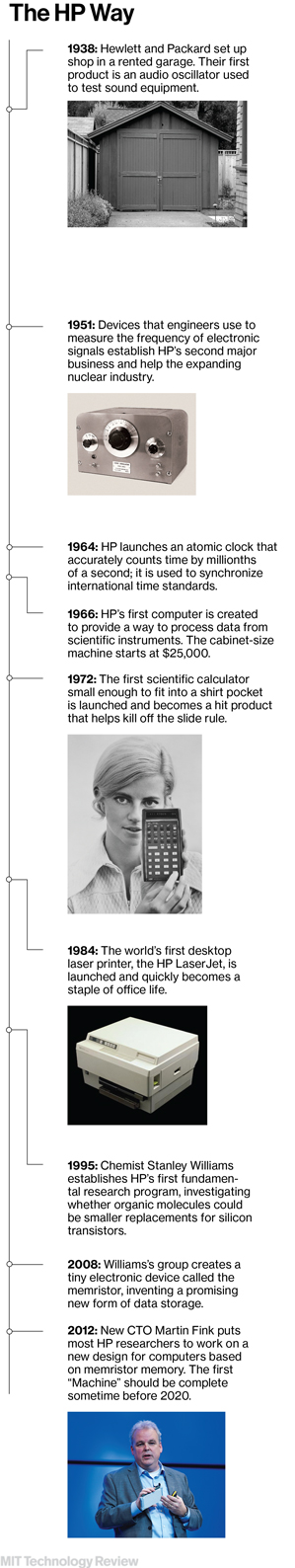

Machine Dreams

There is a shrine inside Hewlett-Packard’s headquarters in Palo Alto, in the heart of Silicon Valley. At one edge of HP’s research building, two interconnected rooms with worn midcentury furniture, vacant for decades, are carefully preserved. From these offices, William Hewlett and David Packard led HP’s engineers to invent breakthrough products, like the 40-pound, typewriter-size programmable calculator launched in 1968.

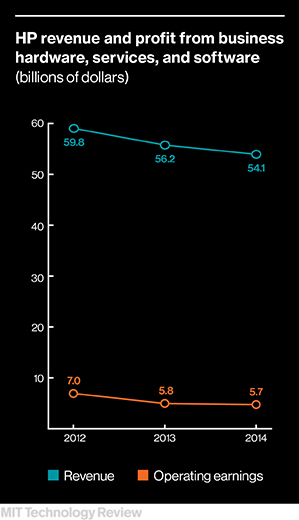

undefinedIn today’s era of smartphones and cloud computing, HP’s core products could also look antiquated before long. Revenue and profit have slid significantly in recent years, pitching the company into crisis. HP is sustained mostly by sales of servers, printers, and ink (its PCs and laptops contribute less than one-fifth of total profits). But businesses have less need for servers now that they can turn to cloud services run by companies like Amazon—which buy their hardware from cheaper suppliers than HP. Consumers and businesses rely much less on printers than they once did and don’t expect to pay much for them.

HP has shed over 40,000 jobs since 2012, and it will split into two smaller but similarly troubled companies later this year (an operation that will itself cost almost $2 billion). HP Inc. will sell printers and PCs; Hewlett Packard Enterprise will offer servers and information technology services to corporations. That latter company will depend largely on a division whose annual revenue dropped by more than 6 percent between 2012 and 2014. Earnings shrank even faster, by over 20 percent. IBM, HP’s closest rival, sold off its server business to China’s Lenovo last year under similar pressures.

And yet, in the midst of this potentially existential crisis, HP Enterprise is working on a risky research project in hopes of driving a remarkable comeback. Nearly three-quarters of the people in HP’s research division are now dedicated to a single project: a powerful new kind of computer known as “the Machine.” It would fundamentally redesign the way computers function, making them simpler and more powerful. If it works, the project could dramatically upgrade everything from servers to smartphones—and save HP itself.

“People are going to be able to solve problems they can’t solve today,” says Martin Fink, HP’s chief technology officer and the instigator of the project. The Machine would give companies the power to tackle data sets many times larger and more complex than those they can handle today, he says, and perform existing analyses perhaps hundreds of times faster. That could lead to leaps forward in all kinds of areas where analyzing information is important, such as genomic medicine, where faster gene-sequencing machines are producing a glut of new data. The Machine will require far less electricity than existing computers, says Fink, making it possible to slash the large energy bills run up by the warehouses of computers behind Internet services. HP’s new model for computing is also intended to apply to smaller gadgets, letting laptops and phones last much longer on a single charge.

It would be surprising for any company to reinvent the basic design of computers, but especially for HP to do it. It cut research jobs as part of downsizing efforts a decade ago and spends much less on research and development than its competitors: $3.4 billion in 2014, 3 percent of revenue. In comparison, IBM spent $5.4 billion—6 percent of revenue—and has a much longer tradition of the kind of basic research in physics and computer science that creating the new type of computer will require. For Fink’s Machine dream to be fully realized, HP’s engineers need to create systems of lasers that fit inside fingertip-size computer chips, invent a new kind of operating system, and perfect an electronic device for storing data that has never before been used in computers.

Pulling it off would be a virtuoso feat of both computer and corporate engineering.

New blueprint

In 2010, Fink was running the HP division that sells high-powered corporate servers and feeling a little paranoid. Customers were flocking to startups offering data storage based on fast, energy-efficient flash memory chips like the ones inside smartphones. But HP was selling only the slower, established storage technology of hard drives. “We weren’t responding aggressively enough,” says Fink. “I was frustrated we were not thinking far enough into the future.”

Trying to see a way to leapfrog ahead, he wondered: why not use new forms of memory not just to upgrade data storage but to reinvent computers entirely? Fink knew that researchers at HP and elsewhere were working on new memory technologies that promised to be much faster than flash chips. He and his chief technical advisor drew up a blueprint that would use those technologies to make computers far more powerful and energy efficient.

An internal paper on that idea, with the corporatese title “Unbound Convergence,” went nowhere. But when Fink was appointed HP’s chief technology officer and head of HP Labs two years later, he saw a chance to resurrect his proposal. “When I looked at all the teams in Labs, I could see the pieces were there,” he says. In particular, HP was working on a competitor to flash, based on a device called the memristor. Although the memristor was still in development, it looked to Fink as if it would someday be fast enough and store data densely enough to make his blueprint realizable. He updated his previous proposal and gave the computer a name he thought would be temporary: the Machine. It stuck.

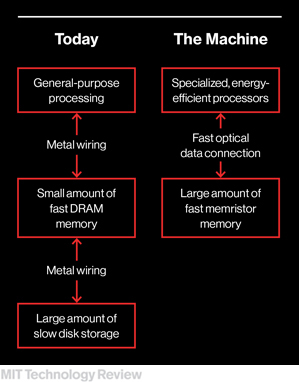

The Machine is an attempt to update the design that has defined the guts of computers since the 1970s. Essentially, computers are constantly shuttling data back and forth between different pieces of hardware that hold information. One, known as storage, keeps your photos and documents plus the computer’s operating system. It consists of hard drives or flash memory chips, which can fit a lot of data into a small space and retain it without power (engineers call it “nonvolatile” memory). But both hard drives and flash chips read and write data very slowly relative to the pace that a computer’s processor can work on it. When a computer needs to get something done, the data must be copied into short-term memory, which uses a technology 10,000 or more times faster: DRAM (dynamic random-access memory). This type of memory can’t store data very densely and goes blank when powered down.

The two-tier system of storage and memory means computers spend a lot of time and energy moving data back and forth just to get into a position to use it. This is why your laptop can’t boot up instantly: the operating system must be retrieved from storage and loaded into memory. One constraint on the battery life of your smartphone is its need to spend energy keeping data alive in DRAM even when it is idling in your pocket.

That may be a mere annoyance for you, but it’s a costly headache for people working on computers that do the sort of powerful number-crunching that’s becoming so important in all kinds of industries, says Yuanyuan Zhou, a professor at the University of California, San Diego, who researches storage technologies. “People working on data-intensive problems are limited by the traditional architecture,” she says. HP estimates that around a third of the code in a typical piece of data analysis software is dedicated purely to juggling storage and memory, not to the task at hand. This doesn’t just curtail performance. Having to transfer data between memory and storage also consumes significant energy, which is a major concern for companies running vast collections of servers, says Zhou. Companies such as Facebook spend millions trying to cut the huge power bills for their warehouses of computers.

The Machine is designed to overcome these problems by scrapping the distinction between storage and memory. A single large store of memory based on HP’s memristors will both hold data and make it available for the processor. Combining memory and storage isn’t a new idea, but there hasn’t yet been a nonvolatile memory technology fast enough to make it practical, says Tsu-Jae King Liu, a professor who studies microelectronics at the University of California, Berkeley. Liu is an advisor to Crossbar, a startup working on a memristor-like memory technology known as resistive RAM. It and a handful of other companies are developing the technology as a direct replacement for flash memory in existing computer designs. HP is alone, however, in saying its devices are ready to change computers more radically.

To make the Machine work as well as Fink imagines, HP needs to create memristor memory chips and a new kind of operating system designed to use a single, giant store of memory. Fink’s blueprint also calls for two other departures from the usual computer design. One is to move data between the Machine’s processors and memory using light pulses sent over optical fibers, a faster and more energy-efficient alternative to metal wiring. The second is to use groups of specialized energy-efficient chips, such as those found in mobile devices, instead of individual, general-purpose processors. The low-energy processors, made by companies such as Intel, can be bought off the shelf today. HP must invent everything else.

Primary occupation

No one has built a fundamentally new operating system for decades. For more than 40 years, every “new” operating system has actually been built or modeled on one that came before, says Rich Friedrich, director of system software for the Machine. Academic research on operating systems has been minimal because existing systems are so dominant.

The work of Friedrich and his colleagues will be crucial. The software must draw together the Machine’s various unusual components into a reliable system unlike any other computer ever built. The group also has to help market that computer. If the operating system doesn’t look attractive to other companies and programmers, the Machine’s technical merits will be irrelevant. For that reason, HP is working on two new operating systems at the same time. One is based on the widely used Linux system and will be released this summer, along with software to emulate the hardware it needs to run. Linux++, as it is called, can’t make full use of the Machine’s power but will be compatible with most existing Linux software, so programmers can easily try it out. Those who like it will be able to step up to HP’s second new operating system, Carbon, which won’t be finished for two years or more. It will be released as open source, so anyone can inspect or modify its code, and is being designed from the ground up to unleash the full power of a computer with no division between storage and memory. By starting from scratch, Friedrich says, this operating system will remove all the complexity, caused by years of updates on top of updates, that leads to crashes and security weaknesses.

Tests with the closest thing to a working version of the Machine—a simulation running inside a cluster of powerful servers—hint at what Carbon and the new computer might be able to deliver once up and running. In one trial, the simulated Machine and a conventional computer raced to analyze a photo and search a database of 80 million other images to find the five that were most visually similar. The off-the-shelf, high-powered HP server completed the task in about two seconds. The simulated Machine needed only 50 milliseconds.

Handling such tasks tens or hundreds of times faster—with the same energy expenditure—could be a crucial advantage at a time when more and more computing problems involve huge data sets. If you have your genome sequenced, it takes hours for a powerful computer to refine the raw data into the finished sequence that can be used to scrutinize your DNA. If the Machine shortened that process to minutes, genomic research could move faster, and sequencing might be easier to use in medical practice. Sharad Singhal, who leads HP’s data analysis research, expects particularly striking improvements for problems involving data sets in the form of a mathematical graph—where entities are linked by a web of connections, not organized in rows and columns. Examples include the connections between people on Facebook or between the people, planes, and bags being moved around by an airline. And wild new applications are likely to emerge once the Machine is working for real. “Techniques that we would discard as impractical suddenly become practical,” Singhal says. “People will think of ways to use this technology that we cannot think of today.”

Perfecting the memristor is crucial if HP is to deliver on that striking potential. That work is centered in a small lab, one floor below the offices of HP’s founders, where Stanley Williams made a breakthrough about a decade ago.

If everything goes to plan, Hewlett Packard Enterprise will be five years old when the first version of the Machine can ride to its rescue.

Williams had joined HP in 1995 after David Packard decided the company should do more basic research. He came to focus on trying to use organic molecules to make smaller, cheaper replacements for silicon transistors (see “Computing After Silicon,” September/October 1999). After a few years, he could make devices with the right kind of switchlike behavior by sandwiching molecules called rotaxanes between platinum electrodes. But their performance was maddeningly erratic. It took years more work before Williams realized that the molecules were actually irrelevant and that he had stumbled into a major discovery. The switching effect came from a layer of titanium, used like glue to stick the rotaxane layer to the electrodes. More surprising, versions of the devices built around that material fulfilled a prediction made in 1971 of a completely new kind of basic electronic device. When Leon Chua, a professor at the University of California, Berkeley, predicted the existence of this device, engineering orthodoxy held that all electronic circuits had to be built from just three basic elements: capacitors, resistors, and inductors. Chua calculated that there should be a fourth; it was he who named it the memristor, or resistor with memory. The device’s essential property is that its electrical resistance—a measure of how much it inhibits the flow of electrons—can be altered by applying a voltage. That resistance, a kind of memory of the voltage the device experienced in the past, can be used to encode data.

HP’s latest manifestation of the component is simple: just a stack of thin films of titanium dioxide a few nanometers thick, sandwiched between two electrodes. Some of the layers in the stack conduct electricity; others are insulators because they are depleted of oxygen atoms, giving the device as a whole high electrical resistance. Applying the right amount of voltage pushes oxygen atoms from a conducting layer into an insulating one, permitting current to pass more easily. Research scientist Jean Paul Strachan demonstrates this by using his mouse to click a button marked “1” on his computer screen. That causes a narrow stream of oxygen atoms to flow briefly inside one layer of titanium dioxide in a memristor on a nearby silicon wafer. “We just created a bridge that electrons can travel through,” says Strachan. Numbers on his screen indicate that the electrical resistance of the device has dropped by a factor of a thousand. When he clicks a button marked “0,” the oxygen atoms retreat and the device’s resistance soars back up again. The resistance can be switched like that in just picoseconds, about a thousand times faster than the basic elements of DRAM and using a fraction of the energy. And crucially, the resistance remains fixed even after the voltage is turned off.

When Williams announced the memristor in 2008, it began what he now calls a roller coaster ride, because the basic research finding quickly progressed to become a crucial development project for HP. “Sometimes the adrenaline can be a little overwhelming,” he says. Memristors have been his team’s primary occupation since 2008, and in 2010 HP announced that it had struck a deal with the South Korean memory chip manufacturer SK Hynix to commercialize the technology. At the time, HP was focused on getting memristors to replace flash memory in conventional computers. Then, in 2012, Fink piled on more pressure by putting memristors at the heart of his blueprint for the Machine.

Risky business

Using memristors, whether as a replacement for flash memory or as the basis for the Machine, would require packaging them into memory chips that combine a dense array of the devices with conventional silicon control circuitry. But such chips don’t exist at the moment. And it’s not clear when HP will be able to get hold of any. It is up to a chip maker such as SK Hynix to develop reliable memristor chips that are suited to its production lines. So far it sends HP silicon wafers with memristors that can be tested individually, not used in a computer.

Fink and Williams say that the first prototype memory chips could arrive sometime next year. (Previous statements by Williams made it seem as if the technology would hit the market in 2013, but he and Fink say these remarks were misinterpreted.) A spokesman for Hynix, Heeyoung Son, declined to comment on whether 2016 is feasible. “There is no specific time line,” he said. “It will take some time to reach that.”

Edwin Kan, a professor at Cornell University who works on memory technology, says that progress on memristors and similar devices appeared to stall when companies tried to integrate them into dense, reliable chips. “It looks promising, but it has been looking promising for too long,” he says.

Dmitri Strukov, one of Williams’s former collaborators at HP, says memristors have yet to pass a key test. Strukov, an assistant professor at the University of California, Santa Barbara, and lead author on the 2008 paper announcing the memristor, says that while technical publications released by HP and SK Hynix have shown that individual memristors can be switched trillions of times without failing, it’s not yet clear that large arrays perform the same way. “That’s nontrivial,” he says.

Stanley Williams says the memristor will offer an unrivaled combination of speed, density, and energy efficiency.

Surrounded by prototype memristors in his lab, Williams cheerily says his confidence in the technology remains high. “If I had thought something else was coming along that was better, I would have jumped on that in a femtosecond,” he says. Williams keeps a chart on the wall summarizing the competition from a handful of other memory technologies that companies including IBM and Samsung are positioning as replacements for flash. Those companies and HP are at similar stages: progress is being reported, though the commercial future of their devices is unclear. But Williams says none of the other technologies has as good a combination of speed, density, and energy efficiency as memristors. Even though the first generation of memristor chips has yet to be introduced, his group is already looking at ways for subsequent generations to pack in more and more data. Those include stacking memristors up in layers and storing more than a single bit in each memristor.

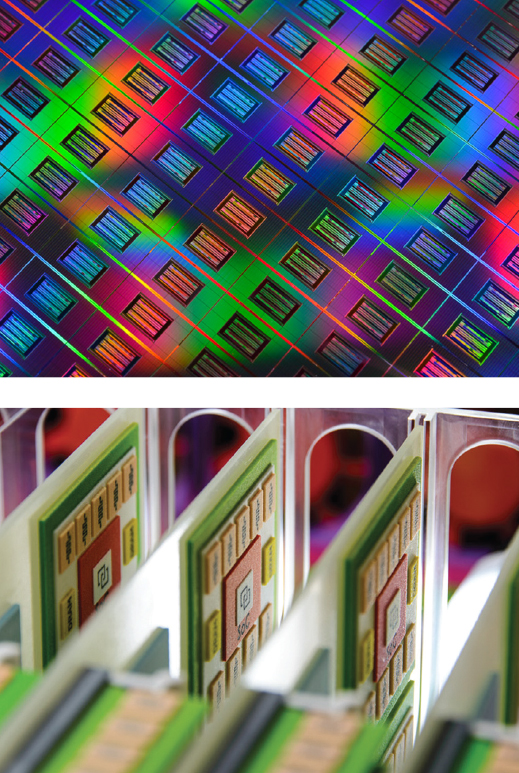

In the lab next door, researchers are working on optical data connections compact enough to link up the components inside a computer. But this project is at an even earlier stage. HP’s engineers can show off a silicon wafer covered in tiny lasers, each a quarter the width of a human hair, and use them to pulse light down slim optical fibers. The lasers are intended to be packaged into small chips and added to the Machine’s circuit boards to connect its memory and processors. Existing computers use metal wires for that, because conventional optical connection technology is too bulky. Fink expects this to be the last of the Machine’s components to be ready, around the end of the decade.

When that time comes, the first full version of the Machine could be rented out for companies or universities to access remotely for large-scale data analysis, Fink says. Soon after, Machine-style servers will be available to buy. Work will also begin on scaling down the new computer for use in other types of devices, such as laptops, smartphones, and even cable TV boxes, he says.

If everything goes to plan, Hewlett Packard Enterprise will be five years old when the first version of the Machine can ride to its rescue. But the market may not be much more welcoming than it is to HP’s existing business today.

New computing technologies tend to start out expensive and difficult to use. Interest in HP’s servers is waning because cloud services offer companies the exact opposite—a cheaper and easier alternative to services that once had to be built in-house. Even companies that buy their own servers are likely to keep seeing significant performance improvements from steady upgrades in technologies such as networking and storage for several years, says Doug Burger, a senior researcher at Microsoft. “A clean sheet is great because you get to redefine everything, but you have to redefine everything,” he says. “Disruptive, successful projects in very complex system architectures are pretty rare.”

Fink argues that his researchers can create tools that ease the process of migrating to the strange new future of computing. And he thinks companies will become more open to embracing a new platform as incremental upgrades to conventional computers start to yield diminishing returns.

Somewhat paradoxically, Fink also says that the prospect of failing to deliver the Machine at all doesn’t trouble him. HP can fall back on selling memristor chips and photonic interconnects as ways to upgrade the flawed architecture of today’s computers, he says—though HP isn’t in the business of manufacturing components today. “We’re gaining a lot by doing this,” he says. “It’s not all or nothing.”

HP’s struggles seem to suggest just the opposite.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.