A Startup’s Neural Network Can Understand Video

In recent years, researchers at companies including Google and Facebook have made impressive breakthroughs in training software to understand what’s going on in images, thanks to a technique known as deep learning. Now a startup called Clarifai is offering a service that uses deep learning to understand video.

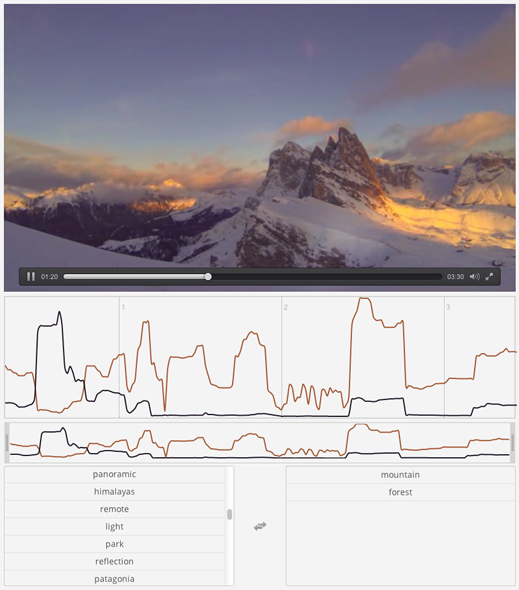

The company says its software can rapidly analyze video clips to recognize 10,000 different objects or types of scene. In a demo given last week at a conference on deep learning, Clarifai’s cofounder and CEO Matthew Zeiler uploaded a clip that included footage of a varied alpine landscape. The software created a timeline with graph lines summarizing when different objects or types of scene were detected. It showed exactly when “snow” and “mountains” occurred individually and together. The software can analyze video faster than a human could watch it; in the demonstration, the 3.5 minute clip was processed in just 10 seconds.

Clarifai is offering the technology as a service and expects it to be used for things like matching ads to content in online videos or developing new ways to organize video collections and edit footage.

Deep learning involves processing data through a network of simple simulated “neurons” that have been trained using example data. Clarifai’s technology originated in research at New York University, and in 2013 the company took the top five spots at the leading annual contest for software that identifies the content of images.

Most research in the field has so far focused on still images, not video. Clarifai launched a still-image recognition service last year. Another startup that uses deep learning, Dextro, launched its own video-processing service at the end of December.

Anyone can put Clarifai’s new video system to the test. On its website, you can upload a video clip of up to 10 megabytes in size for analysis. The different objects or types of scene detected might include cars, trees, or people. The software can also apply more abstract descriptive concepts, such as “fun” or “togetherness.”

Zeiler believes his technology could lead to a new approach to serving up advertising alongside videos online. “The software can identify where in the video is the optimum place for an ad to be placed,” he said.

Companies can already pay to position their ads next to videos of a particular type or ones on a particular subject. Zeiler believes that being able to automatically match ads with particular moments in videos should be even more attractive to advertisers. Clarifai’s technology could make it possible, for example, for Starbucks to make its ads appear anytime coffee was being consumed in footage.

Zeiler says he is already working with companies interested in making use of the video analysis technology. The company is also working on having the software do more. Two features under development will let the software automatically summarize what happens in a video and recognize when a particular activity has taken place, Zeiler says.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.