Why Neural Networks Look Set to Thrash the Best Human Go Players for the First Time

Computers are rapidly beginning to outperform humans in more or less every area of endeavor. For example, machine vision experts recently unveiled an algorithm that outperforms humans in face recognition. Similar algorithms are beginning to match humans at object recognition too. And human chess players long ago gave up the fight to beat computers.

But there is one area where humans still triumph. That is in playing the ancient Chinese game of Go. Computers have never mastered this game. The best algorithms only achieve the skill level of a very strong amateur player which the best human players easily outperform.

That looks set to change thanks to the work of Christopher Clark and Amos Storkey at the University of Edinburgh in Scotland. These guys have applied the same machine learning techniques that have transformed face recognition algorithms to the problem of finding the next move in a game of Go. And the results leave little hope that humans will continue to dominate this game.

In brief, Go is a two-player game usually played on a 19 x 19 grid. The players alternately place black and white stones on the grid in an attempt to end up occupying more of the board than their opponent when the game finishes. Players can remove their opponent’s stones by surrounding them with their own.

Experts think there are two reasons why computers have failed to master Go. The first is the sheer number of moves that are possible at each stage of the game. Go players have 19 x 19 = 361 possible starting moves and there are usually hundreds of possible moves at any point in the game. By contrast, the number of moves in chess is usually about 50.

The second problem is that computers find it difficult to evaluate the strengths and weaknesses of a board position. In chess, simply adding up the value of each piece left on the board gives a reasonable indication of the strength of a player’s position. But this does not work in Go. “Counting the number of stones each player has is a poor indicator of who is winning,” say Clark and Storkey.

The way that state-of-the-art Go algorithms tackle this problem is to play out the entire game after every move and to do this in many different ways. If the computer wins in the majority of these games, then that move is deemed a good one.

Clearly, this is a time-consuming and computationally intensive task. Even so, it generally fails to beat human Go experts who can usually evaluate the state of a Go board with little more than a glance.

Many experts believe that the secret to human’s Go-playing mastery is pattern recognition—the ability to spot strengths and weaknesses based on the shape that the stones make rather than by looking several moves ahead.

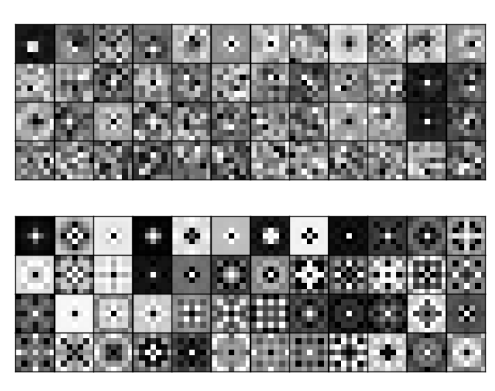

That’s why the recent advances in pattern recognition algorithms could help computers do much better. These advances have used massive databases of images to train deep convolutional neural networks to recognize objects and faces with the kind of accuracy that now matches human performance. So it is reasonable to imagine that the same kind of approach could make a big difference to the automated evaluation of Go boards.

And that is exactly what Clark and Storkey have done. The question that these guys have trained a deep convolutional neural network to answer is: given a snapshot of a game between two Go experts, is it possible to predict the next move in the game?

The way they have approach this is by using a vast database of Go games to train a neural network to find the next move. Clark and Storkey used over 160,000 games between experts to generate a database of 16.5 million positions along with their next move. They used almost 15 million of these position-move pairs to train an eight-layer convolutional neural network to recognize which move these expert players made next. This was a process that took several days.

They then used the rest of the dataset to test the neural network. In other words, they presented the network with a board position from a game and asked it to pick the next move. Clark and Storkey say that the trained network was able to predict the next move up to 44 percent of the time “surpassing previous state of the art on this task by significant margins.”

That’s Interesting not least because the new approach does not use any of the previous moves to make its decision; neither does it evaluate future positions.

Having trained the neural network, Clark and Storkey then played it against two of the best Go algorithms around. The first is called GNU Go, which plays at a level that is equivalent to an intermediate amateur with a ranking of 6-8 kyu. (Go rankings range from a beginner with a rank of 30-20 kyu to a professional expert with a ranking of 1 kyu).

The second was a state-of-the-art program called Fuego 1.1, which has a ranking of approximately 5-4 kyu. A human player would usually take many years of study to reach this level.

The results clearly suggest that the writing is on the wall for human Go players. Clark and Storkey’s neural network beat GNU Go almost 90 percent of the time in a run of 200 games. In other words, after a few days training, the neural net was able to beat GNU Go consistently.

Against Fuego 1.1, it fared less well, winning only just over 10 percent of its games. Nevertheless, that is a significant achievement. “Being able to win even a few games against this opponent indicates a high degree of skill was acquired,” say Clark and Starkey.

That’s clearly very promising. “Even though the networks are playing using a ‘zero step look ahead’ policy, and using a fraction of the computation time as their opponents, they are still able to play better then GNU Go and take some games away from Fuego,” they say.

And there is clearly potential for improvement, for example, by combining this approach with others that do use previous moves and look ahead. One idea that Clark and Starkey suggest is to run the convolutional neural network in parallel with the conventional approach to help prune the tree of possible moves that need to be explored.

There is no suggestion from Clark and Storkey that this approach will beat the best Go players in the world. But surely, it is only a matter of time before even Go players will have to bow to their computerized overlords.

Ref: arxiv.org/abs/1412.3409: Teaching Deep Convolutional Neural Networks to Play Go

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.