Should Industrial Robots Be Able to Hurt Their Human Coworkers?

How much should a robot be allowed to hurt its coworkers? It’s a puzzling question facing companies developing a new breed of industrial robots that work alongside humans on the factory floor.

Setting limits on the level of pain a robot may (accidentally) inflict on a human is a crucial goal of the first safety standards being drawn up for these “collaborative” robots, which are meant to do things like pass people tools or help fit together parts on an assembly line (see “How Human-Robot Teamwork Will Upend Manufacturing”).

Existing guidance from regulators such as the U.S. Occupational Safety and Health Administration assumes that robots operate only when humans aren’t nearby. That has meant it’s mostly small manufacturers that have adopted collaborative robots, says Esben Ostergaard, chief technology officer at Universal Robots, a Danish company that sells robot arms designed to collaborate with humans.

For collaborative robots to really change manufacturing, and earn significant profits, they must be embraced by large companies, for whom safety certification is crucial. “We lived a happy life until we reached the big companies—then we got all these problems about standards,” says Ostergaard. “It’s not the law, but the big companies have to have a standard to hold onto.” Universal is working with car manufacturers including BMW, and with major packaged goods companies, says Ostergaard.

The International Standards Organization is due to release an update next year to its existing industrial-robot safety standards, modifying them to cover collaborative robots. Its rules are expected to be influential with U.S. and European safety authorities and more or less set the bar for how collaborative robots can be designed and used.

At a conference on collaborative robots run last week by the Robotics Industry Association, Ostergaard was one of several to voice worries that the rules would place unrealistic demands on robot designers and operators.

The ISO’s update will, for instance, include guidance on the maximum force with which a robot may strike a human it is working with. Those limits will be based on research under way at the German Institute for Occupational Safety and Health. One part of that work involves using a machine to touch human volunteers with gradually increasing force to determine the pressure needed to cause the sensation of pain in 29 different regions of the body.

Björn Matthias, a member of the group drawing up the addition to the ISO standard, argued at the meeting that robots should be permitted to hurt humans sometimes. “Contact between human and robot is going to be infrequent and not part of normal intended use,” he said. “I would say that you can tolerate being above the pain sensation threshold.” The Swiss energy and automation company ABB, where Matthias works as a researcher, is set to release its first collaborative robot in 2015.

If a robot caused “a bruise a day,” that would clearly be intolerable, said Matthias. But it would be acceptable if a worker received a “substantially painful” blow in the case of an accident. He and others argue that more restrictive guidelines would unnecessarily increase the compliance burden on companies that want to employ collaborative robots and limit the usefulness of their ability to work with humans.

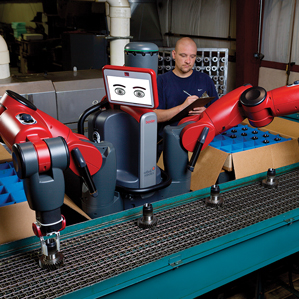

Collaborative robots launched so far, such as Rethink Robotics’ Baxter (see “This Robot Could Transform Manufacturing”), are relatively puny and work with only small loads because humans are expected to be within reach most of the time. When MIT Technology Review first saw Baxter in 2012, Rethink cofounder Rodney Brooks allowed the robot to strike him in the head to prove the point.

The ISO’s updated guidelines will consider how collaborative robots could safely wield much more force, according to Pat Davison, director of standards development at the Robotic Industries Association. In a technique known as “speed and separation monitoring,” laser sensors allow a robot to perform dangerous actions when no one is around and then gradually slow down and eventually stop if a human approaches. “The further away I am, the more hazardous the robot’s activity can be,” Davison said at last week’s meeting. That could allow humans and robots to collaborate on tasks such as moving and assembling heavy parts.

The robotics industry’s standards problem is complicated by the public perception of robots, which remains mostly shaped by science fiction. That, and the tendency to distrust new technologies more than familiar ones, can lead to unreasonable expectations, says Phil Crowther, a global product manager at ABB. “Do you think planes are safe? They sometimes fall out of the sky,” he said. “The fundamentals are the same—you have to limit risk to an acceptable level.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.