Data Mining Reveals How Social Coding Succeeds (And Fails)

The process of developing software has undergone huge transformation in the last decade or so. One of the key changes has been the evolution of social coding websites, such as GitHub and BitBucket.

These allow anyone to start a collaborative software project that other developers can contribute to on a voluntary basis. Millions of people have used these sites to build software, sometimes with extraordinary success.

Of course, some projects are more successful than others. And that raises an interesting question: what are the differences between successful and unsuccessful projects on these sites?

Today, we get an answer from Yuya Yoshikawa at the Nara Institute of Science and Technology in Japan and a couple of pals at the NTT Laboratories, also in Japan. These guys have analysed the characteristics of over 300,000 collaborative software projects on GitHub to tease apart the factors that contribute to success. Their results provide the first insights into social coding success from this kind of data mining.

A social coding project begins when a group of developers outline a project and begin work on it. These are the “internal developers” and have the power to update the software in a process known as a “commit”. The number of commits is a measure of the activity on the project.

External developers can follow the progress of the project by “starring” it, a form of bookmarking on GitHub. The number of stars is a measure of the project’s popularity. These external developers can also request changes, such as additional features and so on, in a process known as a pull request.

Yoshikawa and co begin by downloading the data associated with over 300,000 projects from the GitHub website. This includes the number of internal developers, the number of stars a project receives over time and the number of pull requests it gets.

The team then analyse the effectiveness of the project by calculating factors such as the number of commits per internal team member, the popularity of the project over time, the number of pull requests that are fulfilled and so on.

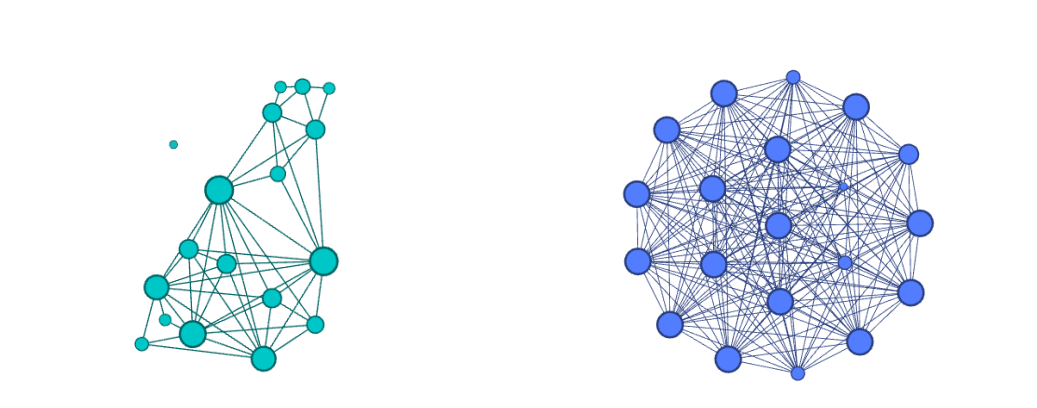

The results provide a fascinating insight into the nature of social coding. Yoshikawa and co say the number of internal developers on a project plays a significant role in its success. “Projects with larger numbers of internal members have higher activity, popularity and sociality,” they say.

However, there is a downside to large projects as well. One measure of the efficiency of a project is the number of commits per internal team member. Yoshikawa and co say the data shows that the most efficient projects involve a single person working alone.

As a project grows, efficiency is roughly constant in projects with between two and 60 members but falls sharply after this. “We conclude that it is undesirable to involve more than 60 developers in a project if we want the project members to work efficiently,” they say.

The team also study how work is distributed between internal members. In general, teams with more evenly distributed work are more likely to have higher activity.

And when projects receive requests for changes from external developers, those that fulfil these requests faithfully are likely to be more popular.

They also measured the types of projects that are more popular. Unsurprisingly, they say that software designed to run on Apple’s various products have the highest popularity.

That is an interesting insight into an increasingly common form of software development. GitHub alone says it has 6 million registered users.

Of course, but these guys have found correlations and an important question is one of causation. It is possible, for example, that the positive correlations they have found are the result of some hidden variables that are not revealed in this study.

The best way to find out is for somebody to put into practice the lessons learnt in this study and see whether they work. There is certainly good reason to think that many of their conclusions are related to good practice.

Over to the developers!

Ref: arxiv.org/abs/1408.6012 : Collaboration on Social Media: Analyzing Successful Projects on Social Coding

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.