A Google Glass App Knows What You’re Looking At

Google has shown that the camera integrated into Google Glass, the company’s head-worn computer, can capture some striking video. Now machine learning company AlchemyAPI has built an app that uses that camera to recognize what a person is looking at. The app was built at an employee hack session held by the company this month to experiment with ways to demonstrate their new image recognition service.

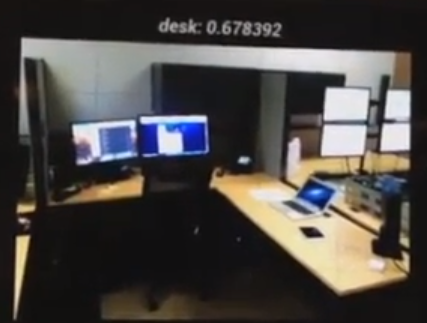

The app can either work on photos taken by a person wearing Glass, or constantly grab images from the device’s camera. Those are sent to the cloud or a nearby computer for processing by AlchemyAPI’s image recognition software. The software sends back its best guess at what it sees and then Glass will display, or speak, the verdict.

“There’s a slight delay and then you’ll hear it say ‘arm chair’ or ‘desktop computer,’” says AlchemyAPI’s CEO Elliot Turner. “It takes about 250ms to analyze a given frame.”

Here’s a video of the app in action:

You could say Turner’s app simply states the obvious, but doing that in (almost) real time is no mean feat for computer vision software. AlchemyAPI’s image recognition system is built on a system of complex simulated neural networks of the type known as “deep learning”, which can produce systems that learn faster and smarter than more established techniques. Google has been a pioneer in this area (see “Deep Learning”) and many other large companies including Microsoft (see “Microsoft Brings Star Trek’s Voice Translator to Life”) and Facebook (“Facebook Launches Advanced AI Research Group”) are also investing in the technology.

An online demo shows the capability of AlchemyAPI’s image recognition software. It shows the system responding to a constant train of images pulled from Google Image search and Flickr.

Although far from perfect, the software’s performance is impressive. The insight the demo gives into the certainty of each judgement it makes also suggests it could easily be made to appear more competent. Many of the system’s failures come when it tries to be very specific. Saying “This is an insect” would be better than “I’m not sure what this is, it could be a mantis or a cricket”. Turner says that early customers for the image recognition offering are mostly media companies that want to categorise and search large collections of unlabelled photographs.

Object recognition systems can be compared by testing them against the standard ImageNet database, which contains more than 50 million images labeled with 22,000 different categories. Elliot won’t share exact figures, but says his system performs on par with the best systems publicly tested against that, which typically get about 15-17 percent of their guesses wrong. One such system now powers the object recognition built into Google’s image search feature for its Google Plus social network, after Google bought a startup founded by deep learning pioneer Geoffrey Hinton of the University of Toronto year.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.