First Trial of Crowdsourced Grading for Computer Science Homework

One of the most time-consuming aspects of teaching is grading homework assignments. So here’s an interesting crowdsourcing tool from Luca de Alfaro and Michael Shavlovsky at the University of California Santa Cruz that switches the burden from the teacher to the students themselves.

The new tool is called CrowdGrader and it is available at http://www.crowdgrader.org/.

The basic idea is straightforward. De Alfaro and Shavlovsky’s website allows students to submit their homework and then redistributes it to their peers for assessment. Each student receives five pieces of anonymous work to grade.

It’s easy to imagine that the quality of student feedback would be poor. But de Alfaro and Shavlovsky have a useful trick to encourage high-quality assessment– each student’s grade depends in part on the quality of the feedback they give. In this test they received 25 per cent of their mark on that basis. That provides plenty of incentive for the students to be fair and consistent.

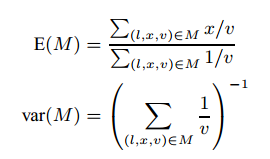

One of the important features of the tool is that it uses an iterative algorithm to calculate the consensus grade for each piece of homework while at the same time evaluating the quality of the assessment that each student gives by comparing it to the assessment of his or her peers.

De Alfaro and Shavlovsky have tested CrowdGrader in the evaluation of coding assignments for various computer science classes they have taught. And they have compared the results with a control assessed by teaching assistants.

The results are generally positive. De Alfaro and Shavlovsky point out that the crowdsourced feedback from several students is far more detailed than anything a student receives from a single instructor.

“When instructors or teaching assistants are faced with grading a large number of assignments, the feedback they provide on each individual assignment is usually limited,” they say. “With CrowdGrader, students had access to multiple reviews of their homework submissions.”

What’s more, the students benefited from seeing the way that their peers tackled same problem.

There are disadvantages as well. Assessments marked by a teaching assistant tend to be more consistent with the same attention given to aspects of the work. And for the student, a big disadvantage is the extra time they have to spend on CrowdGrader.

However, de Alfaro and Shavlovsky say that the two different grading options are of similar quality. “With teaching assistants, the risk is that they do not pay attention in their grading to the aspects where most effort is put (where the flaws are); with crowdsourced grades, the risk is in the inherent variability of the process,” they say.

That looks like a promising way to make better use of an instructor or teaching assistant’s time while improving the learning experience for the student. Of course, a big factor is the user interface and the training each student receives in making assessments.

But as crowdsourcing comes of age as both a scientific and problem-solving tool, there are a growing number of examples of how to do this well, such as the extraordinary crowdsourcing science done at the Zooniverse.org.

Given the big changes that are occurring in higher education around the world with online teaching services such as Udacity and the Khan Academy flourishing, it’s not hard to imagine that innovative ideas like this will find a large audience quickly.

Ref: arxiv.org/abs/1308.5273: CrowdGrader: Crowdsourcing the Evaluation of Homework Assignments

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.