From Our Archive: Wearable Computing, Long Before Google Glass

If you’re interested in Google Glass, the Internet giant’s ingenious, terrifying, baffling (tick as appropriate), head-worn computer display (see “Treading Carefully, Google Encourages Developers to Hack Glass”), you might like to read a feature article that appeared in the pages of MIT Technology Review nearly fifteen years ago, which puts the project in some historical context.

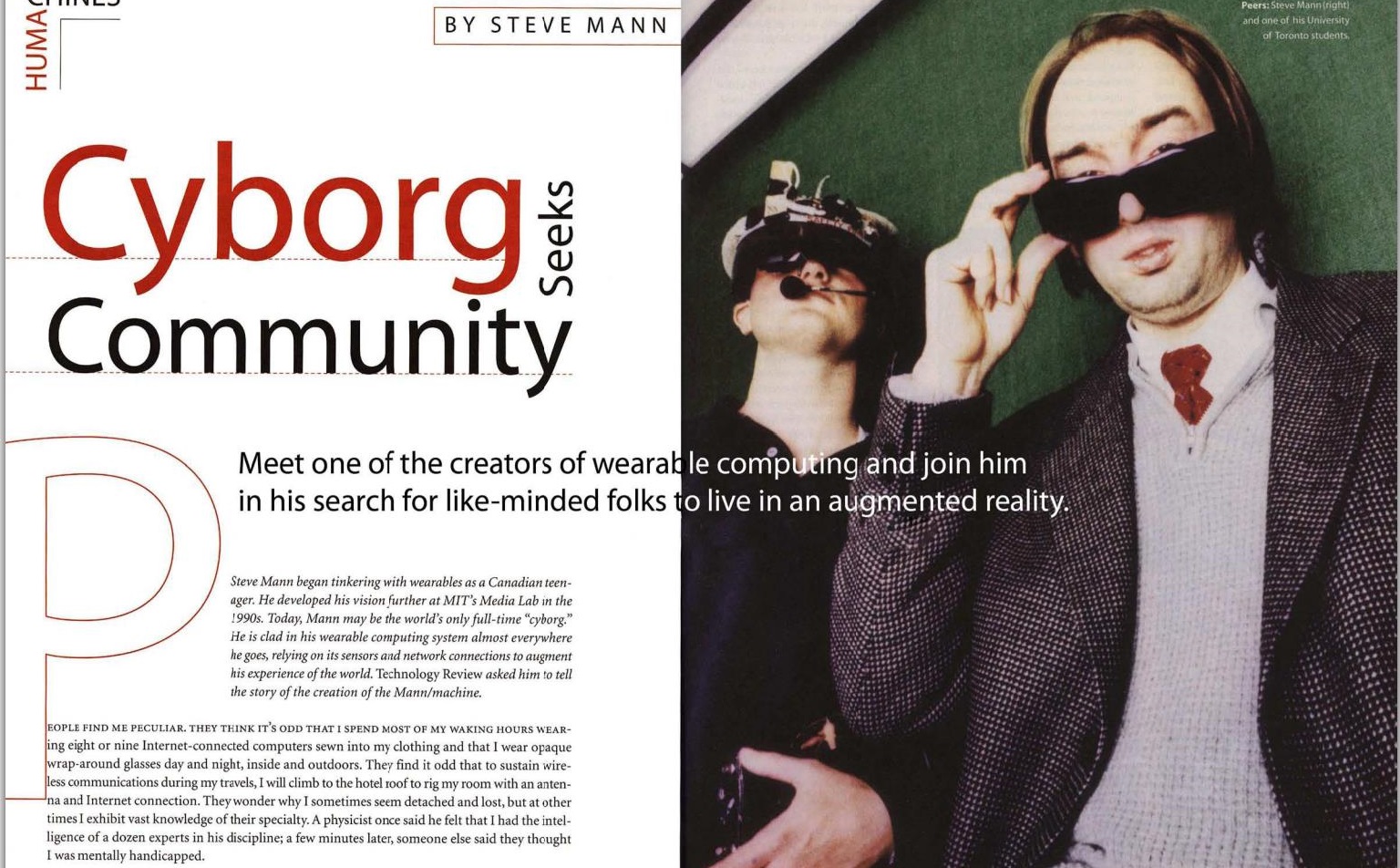

In the cover story “Cyborg Seeks Community”, Steve Mann—one of the pioneers of the field known as “wearable computing” along with Thad Starner who is heavily involved with the Glass project at Google—gives a then-futuristic account of what it’s like to use an always-internet-connected, head-mounted wearable computer. Some of it seems remarkably similar to what we’re hearing about glass. For example, Mann writes:

[People] wonder why I sometimes seem detached and lost, but at other times I exhibit vast knowledge of their specialty. A physicist once said he felt that I had the intelligence of a dozen experts in his discipline; a few minutes later, someone else said they thought I was mentally handicapped.

To really enjoy the piece, you can sign up and download the PDF of that issue here, which includes some great photos of Mann and his cohorts. Looking at these old images, and reading Mann’s account, I can’t help feeling that, for those brave pioneers, wearable computing was not only about finding new ways for technology to mediate your experience of the world; it was also about exploring a future that would be too weird for most people, not to mention wearing your nerdiness like a giant badge of honor. So it seems particularly ironic to see wearable computers on the catwalk at New York Fashion Week.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.